The digital content creation landscape is undergoing a notable transformation and the introduction of Sora, OpenAI's pioneering text-to-video conversion model, It means a great step forward on this journey. This next-generation delivery model redefines the landscape of video generation, delivering unprecedented capabilities that promise to transform the way we interact and create visual content. Inspired by advances in the DALL·E and GPT models, Sora shows the incredible potential of ai to simulate the real world with astonishing precision and creativity.

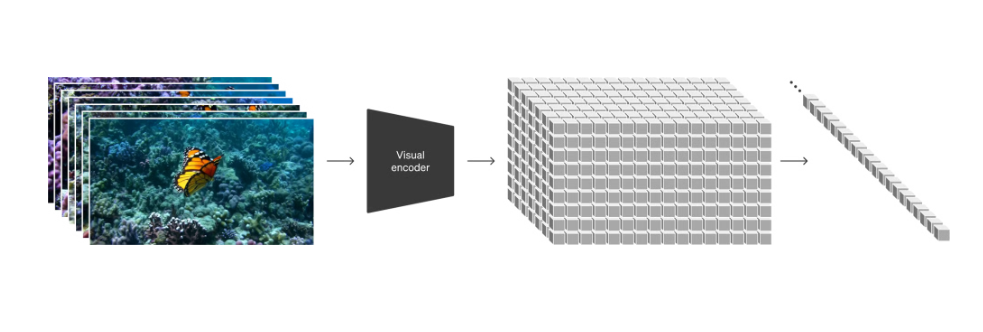

The core of Sora lies in its ability to generate videos from a starting point that resembles static noise, transforming into clear and coherent visual narratives over many steps. This transformative process is not just about creating videos from scratch; Sora can zoom in on existing videos, make them longer, or animate still images into dynamic scenes. The model's architecture, built on a foundation similar to GPT transformers, allows it to scale performance in a way never before seen in video generation.

What sets Sora apart is its innovative use of space-time patches, that is, small units of data that represent videos and images. This approach mirrors the use of tokens in language models such as GPT, allowing the model to handle diverse visual data at different durations, resolutions, and aspect ratios. By converting videos into a sequence of these patches, Sora can train with diverse visual content, from short clips to minute-long high-definition videos, without the limitations of traditional models.

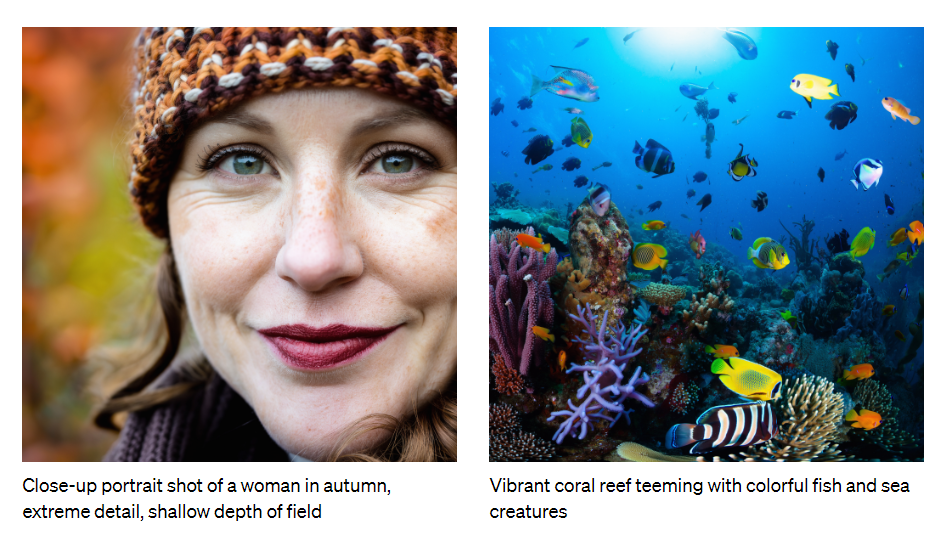

Sora's capabilities go far beyond simple video generation. The model can animate images with notable details, grow videos quickly, and even fill in missing frames. Its application of the recaptioning technique, first introduced in DALL·E 3, enables the generation of videos that closely follow the user's instructions, providing unparalleled fidelity and adherence to the creative intent.

The implications of Sora's technology are immense. Content creators can now produce videos tailored to specific aspect ratios and resolutions, catering to various platforms without compromising quality. The model's understanding of framing and composition, enhanced by training on videos in their native aspect ratios, results in visually engaging content that captures the essence of the creator's vision.

Sora's capabilities represent a significant advancement, delivering nuanced, dynamic, high-fidelity video generation. Some key points that highlight Sora's performance:

- High quality video generation: Sora can generate videos of remarkable quality, taking inputs that resemble static noise and transforming them into clear, detailed and coherent videos. This process involves removing noise in many steps to reveal the final video, which can be up to one minute in high definition.

- Versatility in content creation: Sora's ability to generate images of variable sizes, up to a impressive 2048×2048 resolution, shows its ability to produce high-quality visual content. Sora can create videos in different aspect ratios, including widescreen formats such as 1920x1080p, vertical formats such as 1080×1920And everything else.

- Advanced animation capabilities: Sora can animate still images, bringing them to life with impressive attention to detail. This capability extends to creating seamlessly looping videos and extending videos forward or backward in time, showing the model's ability to understand and manipulate temporal dynamics.

- Consistency and coherence: One of Sora's most notable features is its ability to maintain subject consistency and temporal coherence, even when subjects are temporarily out of sight. This is achieved by forecasting the model many frames at once, ensuring that characters and objects remain consistent throughout the video.

- Simulation of real world dynamics: Sora exhibits emerging capabilities in simulating aspects of the real and digital worlds, including 3D consistency, object permanence, and interactions that affect the state of the world.

- Scalability: By leveraging a transformative architecture, Sora demonstrates superior scaling performance, enabling the generation of increasingly higher quality videos as training computing increases.

- Fidelity of text and image prompts: By applying the DALL·E 3 recapture technique, Sora exhibits high fidelity in following the user's textual instructions, allowing precise control over the generated content. Additionally, the model can create videos based on existing images or videos, showing its ability to understand and extend the visual contexts provided.

- Popup properties: Sora has shown several emergent properties, such as the ability to simulate actions with real-world effects (e.g., a painter adding strokes to a canvas) and render digital environments (e.g., video game simulations). These properties highlight the potential of the model to create complex and interactive scenes.

Despite its impressive capabilities, Sora, like any advanced model, has limitations, including challenges in modeling certain physical interactions accurately and maintaining consistency over long periods. However, the model's current performance and possibilities for future improvements make it an important milestone in creating highly capable simulators of the physical and digital worlds.

Sora is not just a tool for creating captivating videos; represents a fundamental step towards achieving AGI. By simulating aspects of the physical and digital worlds, including 3D consistency, long-range coherence, and even simple interactions that affect the state of the world, Sora shows the potential of ai to understand and recreate complex real-world dynamics.

Sora is at the forefront of ai-powered video generation and offers a vision of the future of content creation. With its ability to generate, zoom, and animate videos and images, Sora enhances the creative process and paves the way for developing more sophisticated reality simulators. As we continue to explore the capabilities of models like Sora, we get closer to unlocking the full potential of ai to create and understand the world around us.

![]()

Hello, my name is Adnan Hassan. I'm a consulting intern at Marktechpost and soon to be a management trainee at American Express. I am currently pursuing a double degree from the Indian Institute of technology, Kharagpur. I am passionate about technology and I want to create new products that make a difference.

<!– ai CONTENT END 2 –>