Language models are titans that leverage the vast expanse of human language to power many applications. These models have revolutionized the way machines understand and generate text, enabling translation, content creation, and advancements in conversational ai. Their enormous size is a source of their prowess and presents formidable challenges. The computational weight required to operate these behemoths restricts their usefulness to those with access to significant resources. It raises concerns about its environmental footprint due to substantial energy consumption and associated carbon emissions.

The key to improving the efficiency of the language model is to achieve the delicate balance between model size and performance. Previous models have been engineering marvels, capable of understanding and generating human-like text. However, their operational demands have made them less accessible and raised questions about their long-term viability and environmental impact. This enigma has prompted researchers to act and develop innovative techniques aimed at slimming these models without diluting their capabilities.

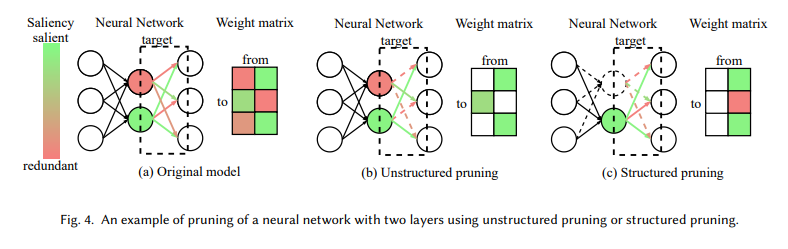

Pruning and quantization emerge as key techniques in this effort. Pruning involves identifying and removing parts of the model that contribute little to its performance. This surgical approach not only reduces the size of the model but also its complexity, leading to gains in efficiency. Quantization simplifies the numerical precision of the model, effectively compressing its size while maintaining its essential characteristics. These techniques represent a powerful arsenal for more manageable and environmentally friendly language models.

The survey conducted by researchers at Seoul National University delves into the depths of these optimization techniques, presenting a comprehensive study ranging from high-cost, high-precision methods to innovative, low-cost compression algorithms. These latter approaches are particularly noteworthy and offer hope of making large language models more accessible. By significantly reducing the size and computational demands of these models, low-cost compression algorithms promise to democratize access to advanced ai capabilities. The survey meticulously analyzes and compares these methods based on their potential to reshape the landscape of language model optimization.

Revelations from this study are the surprising effectiveness of low-cost compression algorithms in improving model efficiency. These previously underexplored methods have shown great promise in reducing the footprint of large language models without a corresponding drop in performance. The study's in-depth analysis of these techniques illuminates their unique contributions and underscores their potential as a focal point for future research. By highlighting the advantages and limitations of different approaches, the survey offers valuable insights into the path forward for optimizing language models.

The implications of this research are profound and extend far beyond the immediate benefits of reduced model size and increased efficiency. By paving the way toward more accessible and sustainable language models, these optimization techniques have the potential to catalyze new innovations in ai. They promise a future in which advanced language processing capabilities are available to a broader range of users, fostering inclusion and driving progress in diverse applications.

In summary, the path towards the optimization of linguistic models is marked by a relentless search for balance between size and performance, accessibility and capacity. This research demands continued focus on developing innovative compression techniques that can unlock the full potential of language models. As we stand on the edge of this new frontier, the possibilities are as vast as the digital universe. The search for more efficient, accessible and sustainable language models is a technical challenge and a gateway to a future in which ai is woven into our daily lives, improving our capabilities and enriching our understanding of the world.

Review the Paper. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on Twitter and Google news. Join our 36k+ ML SubReddit, 41k+ Facebook community, Discord Channeland LinkedIn Grabove.

If you like our work, you will love our Newsletter..

Don't forget to join our Telegram channel

![]()

Sana Hassan, a consulting intern at Marktechpost and a dual degree student at IIT Madras, is passionate about applying technology and artificial intelligence to address real-world challenges. With a strong interest in solving practical problems, she brings a new perspective to the intersection of ai and real-life solutions.

<!– ai CONTENT END 2 –>