In an era where digital privacy has become paramount, the ability of artificial intelligence (ai) systems to forget specific data when requested is not just a technical challenge but a social imperative. Researchers have embarked on an innovative journey to address this problem, particularly within image-to-image (I2I) generative models. These models, known for their prowess in creating detailed images from given inputs, have presented unique challenges for data removal, primarily due to their deep learning nature, which inherently remembers training data.

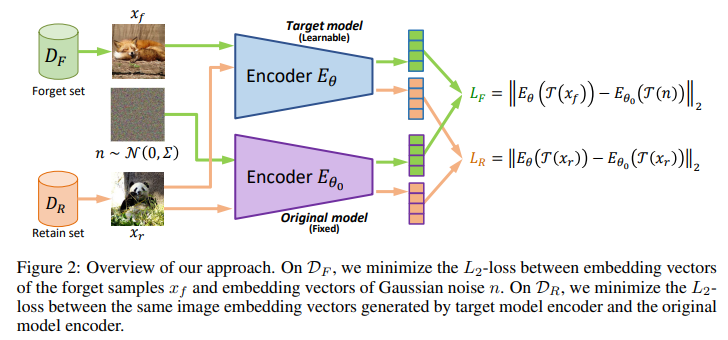

The crux of the research lies in the development of a machine unlearning framework designed specifically for I2I generative models. Unlike previous attempts focused on classification tasks, this framework aims to efficiently remove unwanted data (called forgotten samples) while preserving the quality and integrity of the desired data or retaining samples. This effort is not trivial; Generative models, by design, excel at memorizing and reproducing input data, making selective forgetting a complex task.

Researchers at the University of Texas at Austin and JPMorgan proposed an algorithm based on a unique optimization problem to address this problem. Through theoretical analysis, they established a solution that effectively removes forgotten samples with minimal impact on retained samples. This balance is crucial to comply with privacy regulations without sacrificing overall model performance. The effectiveness of the algorithm was demonstrated through rigorous empirical studies on two major datasets, ImageNet1K and Places-365, showing its ability to comply with data retention policies without requiring direct access to retained samples.

This pioneering work marks a significant advance in machine unlearning of generative models. It offers a viable solution to a problem that has as much to do with ethics and legality as it does with technology. The framework's ability to efficiently delete specific data sets from memory without complete model retraining represents a major step forward in the development of privacy-compliant ai systems. By ensuring that the integrity of retained data remains intact while removing information from forgotten samples, the research provides a solid foundation for the responsible use and management of ai technologies.

At its core, the research conducted by the team at the University of Texas at Austin and JPMorgan Chase is a testament to the changing landscape of ai, where technological innovation meets growing demands for privacy and data protection. The contributions of the study can be summarized as follows:

- He pioneered a framework for machine unlearning within I2I generative models, addressing a gap in the current research landscape.

- Through a novel algorithm, it achieves the dual goals of preserving data integrity and completely eliminating forgotten samples, balancing performance with privacy compliance.

- Empirical validation of the research on large-scale data sets confirms the effectiveness of the framework, setting a new standard for privacy-aware ai development.

As ai grows, the need for models that respect user privacy and comply with legal standards has never been more critical. This research not only addresses this need, but also opens new avenues for future exploration in the area of machine unlearning, marking a significant step towards the development of powerful and privacy-aware ai technologies.

Review the Paper and GitHub. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on Twitter and Google news. Join our 36k+ ML SubReddit, 41k+ Facebook community, Discord channeland LinkedIn Grabove.

If you like our work, you will love our Newsletter..

Don't forget to join our Telegram channel

![]()

Hello, my name is Adnan Hassan. I'm a consulting intern at Marktechpost and soon to be a management trainee at American Express. I am currently pursuing a double degree from the Indian Institute of technology, Kharagpur. I am passionate about technology and I want to create new products that make a difference.

<!– ai CONTENT END 2 –>