Improving how users discover new content is critical to increasing user engagement and satisfaction on media platforms. Keyword searching alone presents challenges in capturing semantics and user intent, resulting in results that lack relevant context; for example, searching for date nights or Christmas-themed movies. This can lead to lower retention rates if users can't reliably find the content they're looking for. However, with large language models (LLMs), there is an opportunity to solve these semantic and user intent challenges. By combining embeddings that capture semantics with a technique called Retrieval Augmented Generation (RAG), you can generate more relevant responses based on the context retrieved from your own data sources.

In this post, we show you how to safely build a movie chatbot by implementing RAG with your own data using Amazon Bedrock knowledge bases. We used the IMDb and Box Office Mojo dataset to simulate a catalog for media and entertainment clients and show how you can create your own RAG solution in just a couple of steps.

Solution Overview

IMDb and Box Office's licensed Mojo Movies/TV/OTT data package provides a wide range of entertainment metadata, including more than 1.6 billion user ratings; credits for more than 13 million cast and crew members; 10 million movie, television and entertainment titles; and global box office reporting data from more than 60 countries. Many AWS media and entertainment customers license IMDb data through AWS Data Exchange to improve content discovery and increase customer engagement and retention.

Introduction to Amazon Bedrock Knowledge Bases

To equip an LLM with up-to-date proprietary information, organizations use RAG, a technique that involves obtaining data from enterprise data sources and enriching the application with that data to provide more relevant and accurate answers. Knowledge bases for Amazon Bedrock enable a fully managed RAG capability that allows you to personalize LLM responses with contextual and business-relevant data. Knowledge bases automate the end-to-end RAG workflow, including ingestion, retrieval, message augmentation, and citations, eliminating the need to write custom code to integrate data sources and manage queries. Knowledge bases for Amazon Bedrock also enable multi-turn conversations so the LLM can answer complex user queries with the correct answer.

We use the following services as part of this solution:

We go through the following high-level steps:

- Preprocess IMDb data to create documents from each movie record and upload the data to an Amazon Simple Storage Service (Amazon S3) bucket.

- Create a knowledge base.

- Synchronize your knowledge base with your data source.

- Use the knowledge base to answer semantic queries about the movie catalog.

Previous requirements

The IMDb data used in this post requires a commercial content license and a paid subscription to the IMDb and Box Office Mojo Movies/TV/OTT licensing package on AWS Data Exchange. To request information about a license and access sample data, visit developer.imdb.com. To access the dataset, see Power Recommendation and Search Using a Knowledge Graph from IMDb – Part 1 and follow the instructions. Access IMDb data section.

Preprocess IMDb data

Before creating a knowledge base, we need to preprocess the IMDb dataset into text files and upload them to an S3 bucket. In this post, we simulate a customer catalog using the IMDb dataset. We took 10,000 popular movies from the IMDb dataset for the catalog and created the dataset.

Use the following laptop to create the dataset with additional information such as names of actors, directors and producers. We use the following code to create a single file for a movie with all the information stored in the file in unstructured text that LLMs can understand:

Once you have the data in .txt format, you can upload it to Amazon S3 using the following command:

Create the IMDb knowledge base

Complete the following steps to create your knowledge base:

- In the Amazon Bedrock console, choose Knowledge base in the navigation panel.

- Choose Create knowledge base.

- For Knowledge base nameget into

imdb. - For Knowledge Base Descriptionenter an optional description, such as Knowledge Base to ingest and store imdb data.

- For IAM permissionsselect Create and use a new service roleand then enter a name for your new service role.

- Choose Next.

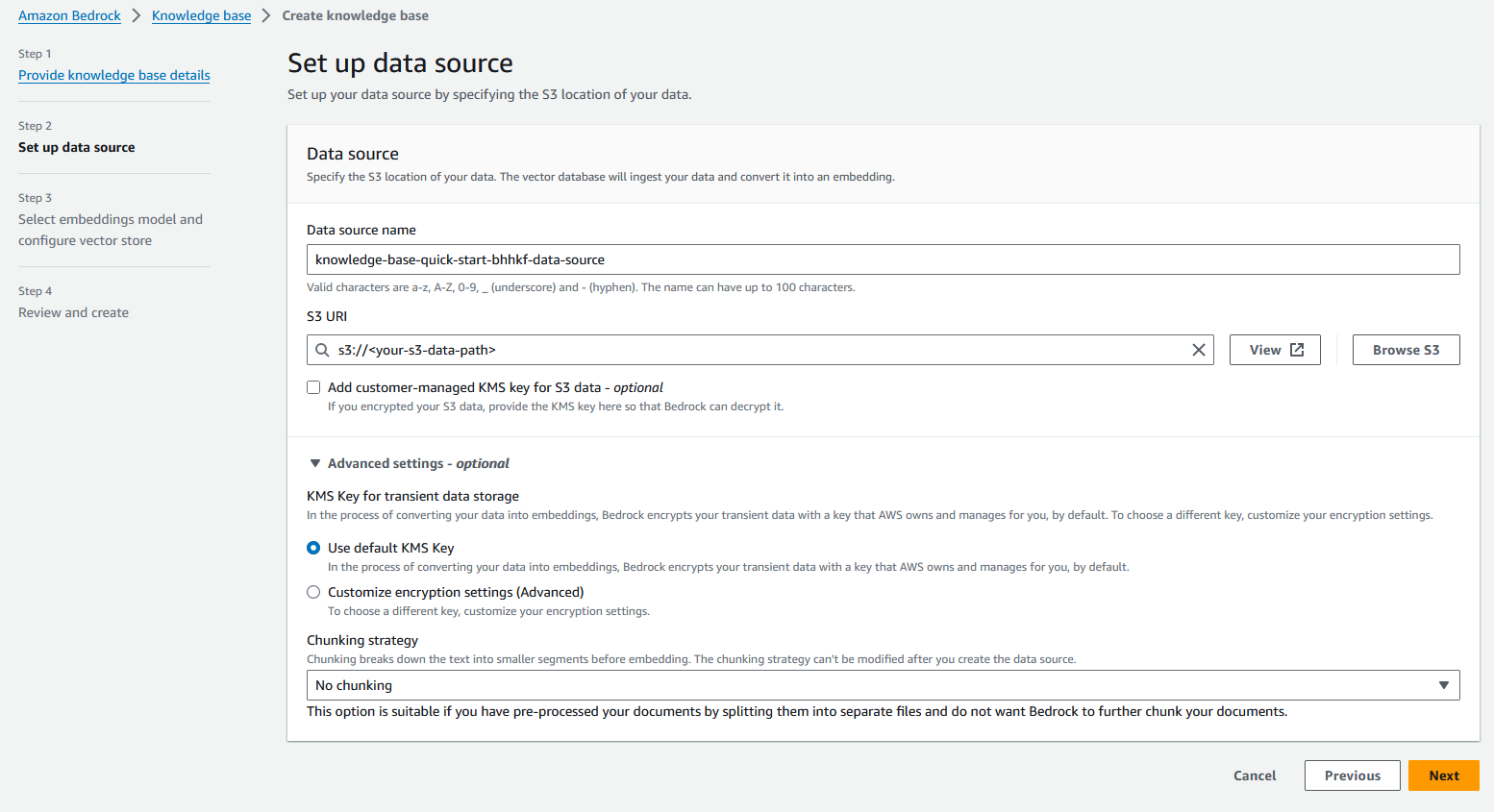

- For Data source nameget into

imdb-s3. - For S3 URIEnter the S3 URI you uploaded the data to.

- In it Advanced settings – optional section, for fragmentation strategychoose no fragmentation.

- Choose Next.

Knowledge bases allow you to divide your documents into smaller segments to make it easier to process large documents. In our case, we have already divided the data into a smaller document (one per movie).

- In it Vector database section, select Quickly create a new vector store.

Amazon Bedrock will automatically create a fully managed OpenSearch Serverless vector search collection and configure settings to embed your data sources using your chosen Titan Embedding G1 text embedding model.

<img loading="lazy" class="alignnone wp-image-69791 size-full" src="https://technicalterrence.com/wp-content/uploads/2024/01/1706735472_698_Build-a-Movie-Chatbot-for-TVOTT-Platforms-Using-Retrieval-Augmented.png" alt="knowledge base vector shop page” width=”1540″ height=”988″/>

- Choose Next.

- Review your settings and choose Create knowledge base.

Synchronize your data with the knowledge base

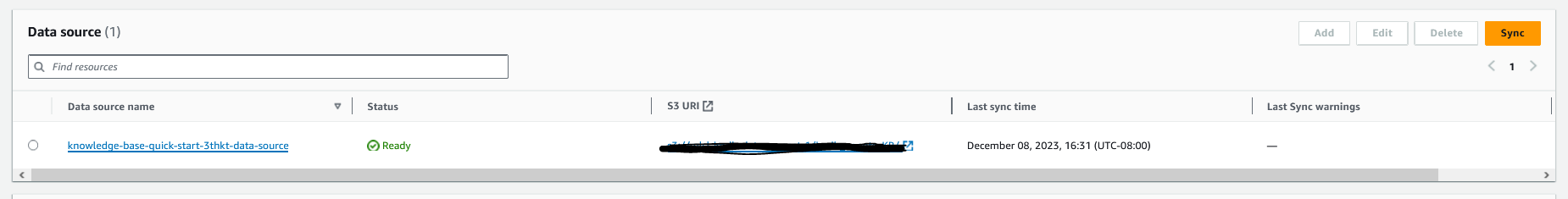

Now that you've created your knowledge base, you can sync it with your data.

- In the Amazon Bedrock console, navigate to your knowledge base.

- In it Data source section, choose Sync up.

Once the data source is synchronized, you are ready to query the data.

Improve search using semantic results

Complete the following steps to test the solution and improve your search using semantic results:

- In the Amazon Bedrock console, navigate to your knowledge base.

- Select your knowledge base and choose Test knowledge base.

- Choose Select modeland choose Anthropic Claude v2.1.

- Choose Apply.

You are now ready to query the data.

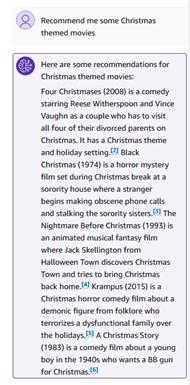

We can ask some semantic questions, like “Recommend me some Christmas-themed movies.”

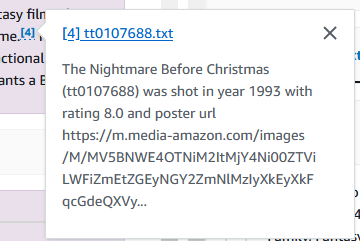

The answers in the knowledge base contain quotes that you can explore to determine if the answers are correct and factual.

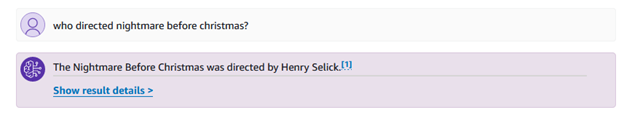

You can also delve into any information you need from these movies. In the following example, we ask “who directed The Nightmare Before Christmas?”

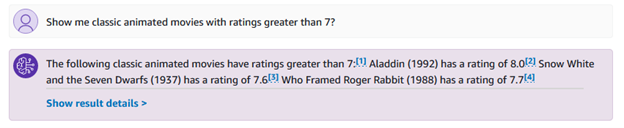

You can also ask more specific questions related to genres and ratings, such as “show me classic animated movies with ratings above 7?”

Increase your knowledge base with agents

Amazon Bedrock agents help you automate complex tasks. Agents can break the user's query into smaller tasks and call custom APIs or knowledge bases to supplement the information to execute actions. With Agents for Amazon Bedrock, developers can integrate intelligent agents into their applications, accelerating the delivery of ai-powered applications and saving weeks of development time. With agents, you can grow your knowledge base by adding more features, such as Amazon Personalize recommendations for user-specific recommendations, or performing actions such as filtering movies based on user needs.

Conclusion

In this post, we show how to create a conversational movie chatbot using Amazon Bedrock in a few steps to answer semantic searches and conversational experiences based on your own data and the licensed IMDb and Box Office Mojo Movies/TV/OTT dataset. In the next post, we discuss the process of adding more features to your solution using Agents for Amazon Bedrock. To get started with Amazon Bedrock knowledge bases, see Amazon Bedrock Knowledge Bases.

About the authors

Gaurav Rele is a senior data scientist in the Generative ai Innovation Center, where he works with AWS customers across verticals to accelerate the use of generative ai and AWS cloud services to solve their business challenges.

Gaurav Rele is a senior data scientist in the Generative ai Innovation Center, where he works with AWS customers across verticals to accelerate the use of generative ai and AWS cloud services to solve their business challenges.

Divya Bhargavi She is a Senior Applied Scientist in the Generative ai Innovation Center, where she solves high-value business problems for AWS customers using generative ai methods. She works on image and video understanding and retrieval, large language models augmented with knowledge graphs, and personalized advertising use cases.

Divya Bhargavi She is a Senior Applied Scientist in the Generative ai Innovation Center, where she solves high-value business problems for AWS customers using generative ai methods. She works on image and video understanding and retrieval, large language models augmented with knowledge graphs, and personalized advertising use cases.

Suren Gunturu is a data scientist working in the Generative ai Innovation Center, where he works with several AWS customers to solve high-value business problems. He specializes in building machine learning pipelines using large language models, primarily through Amazon Bedrock and other AWS cloud services.

Suren Gunturu is a data scientist working in the Generative ai Innovation Center, where he works with several AWS customers to solve high-value business problems. He specializes in building machine learning pipelines using large language models, primarily through Amazon Bedrock and other AWS cloud services.

Vidya Sagar Ravipati is a Science Manager at the Generative ai Innovation Center, where he leverages his extensive experience in large-scale distributed systems and passion for machine learning to help AWS customers across industry verticals accelerate their adoption of ai. ai and the cloud.

Vidya Sagar Ravipati is a Science Manager at the Generative ai Innovation Center, where he leverages his extensive experience in large-scale distributed systems and passion for machine learning to help AWS customers across industry verticals accelerate their adoption of ai. ai and the cloud.