Large language models (LLMs) have become increasingly essential in the burgeoning field of artificial intelligence, especially in data management. These models, which are based on advanced machine learning algorithms, have the potential to significantly speed up and improve data processing tasks. However, integrating LLMs into repetitive data generation processes is challenging, primarily due to their unpredictable nature and the possibility of significant output errors.

Operationalizing LLMs for large-scale data generation tasks is fraught with complexities. For example, in functions such as generating personalized content based on user data, LLMs can be high-performing in some cases, but they also run the risk of generating incorrect or inappropriate content. This inconsistency can lead to significant problems, particularly when LLM results are used in sensitive or critical applications.

The management of LLM within data pipelines has largely relied on manual interventions and basic validation methods. Developers face substantial challenges in predicting all possible failure modes of LLMs. This difficulty leads to an over-reliance on basic frameworks that incorporate rudimentary assertions to filter out bad data. These statements, while useful, must be more exhaustive to detect all types of errors, leaving gaps in the data validation process.

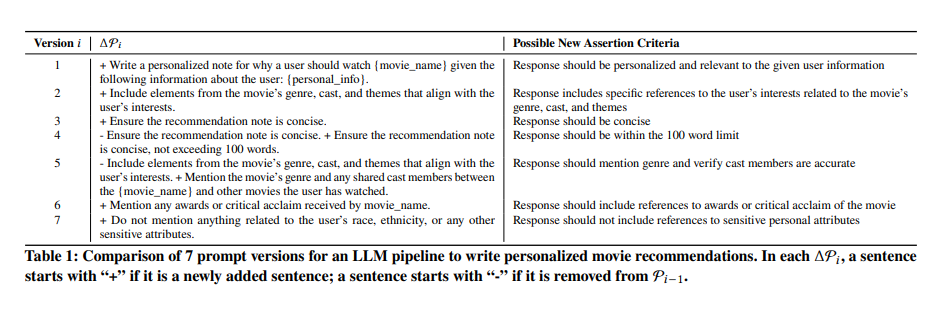

The introduction of Spade, a method for synthesizing claims in LLM processes by researchers at UC Berkeley, HKUST, LangChain, and Columbia University, significantly advances this area. Spade addresses key LLM reliability and accuracy challenges by innovatively synthesizing and filtering assertions, ensuring the generation of high-quality data across diverse applications. It works by analyzing differences between consecutive versions of LLM messages, which often indicate specific failure modes of LLMs. According to this analysis, spade synthesizes Python functions as candidate assertions. These features are then meticulously filtered to ensure minimum redundancy and maximum accuracy, addressing the complexities of the data generated by LLM.

Spade's methodology involves generating candidate assertions based on request deltas: the differences between consecutive request versions. These deltas often indicate specific failure modes that LLMs may encounter. For example, an adjustment to a prompt to avoid complex language might require a statement to check the complexity of the response. Once these candidate statements are generated, they undergo a rigorous filtering process. This process aims to reduce redundancy, which often arises from repeated refinements on similar parts of a question, and improve precision, particularly in statements involving complex LLM calls.

In practical applications, in several LLM processes, it has significantly reduced the number of assertions required and decreased the false failure rate. This is evident in its ability to reduce the number of assertions by 14% and decrease false failures by 21% compared to simpler baseline methods. These results highlight Spade's ability to improve the reliability and accuracy of LLM results in data generation tasks, making it a valuable tool in data management.

In summary, the following points can be presented about the research carried out:

- Spade represents a breakthrough in LLM management in data processes, addressing the unpredictability and potential for error in LLM results.

- Generate and filter assertions based on fast deltas, ensuring minimal redundancy and maximum accuracy.

- The tool has significantly reduced the number of assertions required and the rate of false failures in various LLM processes.

- Its introduction is a testament to continued advances in ai, particularly in improving the efficiency and reliability of data generation and processing tasks.

This comprehensive overview of Spade underscores its importance in the changing landscape of artificial intelligence and data management. Spade ensures the generation of high-quality data by addressing the fundamental challenges associated with LLMs. It simplifies the operational complexities associated with these models, paving the way for their more effective and widespread use.

Review the Paper. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on Twitter. Join our 36k+ ML SubReddit, 41k+ Facebook community, Discord Channeland LinkedIn Grabove.

If you like our work, you will love our Newsletter..

Don't forget to join our Telegram channel

![]()

Hello, my name is Adnan Hassan. I'm a consulting intern at Marktechpost and soon to be a management trainee at American Express. I am currently pursuing a double degree from the Indian Institute of technology, Kharagpur. I am passionate about technology and I want to create new products that make a difference.

<!– ai CONTENT END 2 –>