The development of basic models such as large language models (LLM), vision transformers (ViT), and multimodal models marks an important milestone. These models, known for their versatility and adaptability, are reshaping the approach to ai applications. However, the growth of these models is accompanied by a considerable increase in demand for resources, making their development and deployment a resource-intensive task.

The main challenge in implementing these basic models is their significant resource requirements. Training and maintaining models like LLaMa-270B involves immense computational power and energy, resulting in high costs and significant environmental impacts. This resource-intensive nature limits their accessibility, limiting the ability to train and deploy these models to entities with substantial computational resources.

In response to resource efficiency challenges, significant research efforts are being directed toward developing more resource-efficient strategies. These efforts encompass algorithm optimization, system-level innovations, and novel architectural designs. The goal is to minimize the resource footprint without compromising the performance and capabilities of the models. This includes exploring various techniques to optimize algorithmic efficiency, improve data management, and innovate system architectures to reduce computational load.

The survey conducted by researchers from Beijing University of Posts and Telecommunications, Peking University and Tsinghua University delves into the evolution of basic language models, detailing their architectural developments and the subsequent tasks they perform. It highlights the transformative impact of the Transformer architecture, attention mechanisms, and encoder-decoder structure on language models. The survey also sheds light on basic speech models, which can derive meaningful representations from raw audio signals, and their computational costs.

Basic vision models are another area of focus. Encoder-only architectures such as ViT, DeiT, and SegFormer have significantly advanced the field of computer vision, demonstrating impressive results in image classification and segmentation. Despite their resource demands, these models have pushed the limits of self-supervised pre-training on vision models.

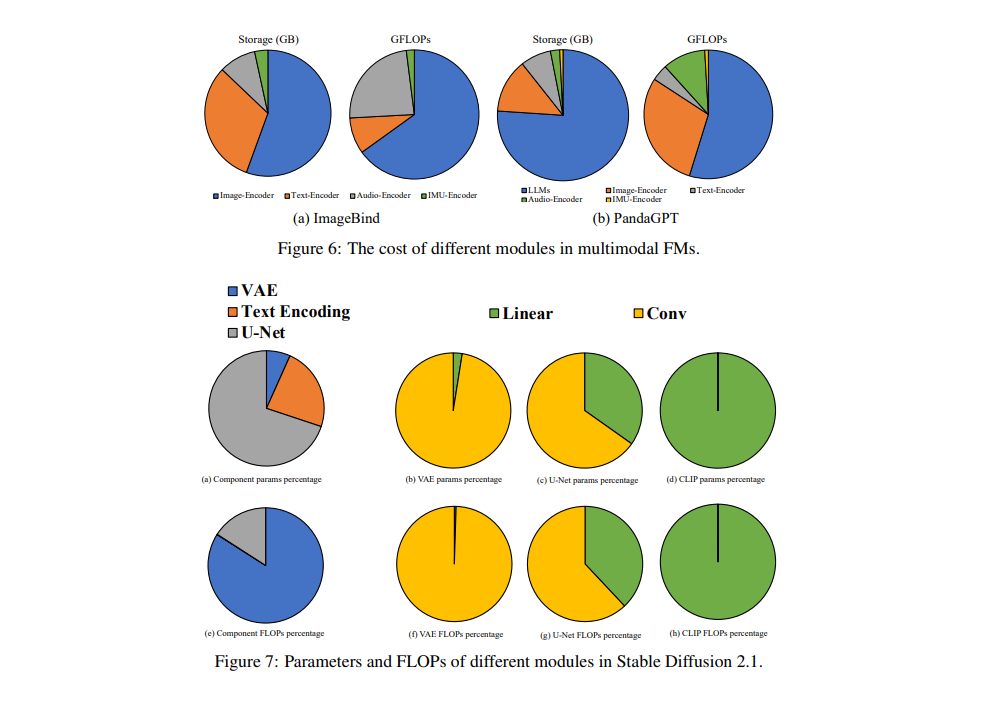

An area of growing interest is multimodal core models, which aim to encode data from different modalities into a unified latent space. These models typically use transformer encoders for data encoding or decoders for intermodal generation. The survey analyzes key architectures, such as multi-encoder and encoder-decoder models, representative models in intermodal generation and their cost analysis.

The paper provides an in-depth look at the current state and future directions of resource-efficient algorithms and systems in basic models. It provides valuable information on various strategies employed to address the problems posed by the large resource footprint of these models. The document highlights the importance of continuous innovation to make foundation models more accessible and sustainable.

Key findings from the survey include:

- The increase in demand for resources marks the evolution of foundation models.

- Innovative strategies are being developed to improve the efficiency of these models.

- The goal is to minimize the resource footprint while maintaining performance.

- Efforts span algorithm optimization, data management, and system architecture innovation.

- The paper highlights the impact of these models in the domains of language, speech, and vision.

Review the Paper. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on Twitter. Join our 36k+ ML SubReddit, 41k+ Facebook community, Discord channeland LinkedIn Grabove.

If you like our work, you will love our Newsletter..

Don't forget to join our Telegram channel

![]()

Hello, my name is Adnan Hassan. I'm a consulting intern at Marktechpost and soon to be a management trainee at American Express. I am currently pursuing a double degree from the Indian Institute of technology, Kharagpur. I am passionate about technology and I want to create new products that make a difference.

<!– ai CONTENT END 2 –>