Future models must receive superior feedback for training signals to be effective and thus advance the development of superhuman agents. Current methods often derive reward models from human preferences, but limitations of human performance limit this process. Relying on fixed reward models impedes the ability to improve learning during large language model (LLM) training. Overcoming these challenges is crucial to making progress in creating agents with capabilities that surpass human performance.

Leveraging human preference data significantly improves LLMs' ability to follow instructions effectively, as demonstrated by recent studies. Traditional reinforcement learning from human feedback (RLHF) involves learning a reward model from human preferences, which is then fixed and used for LLM training using methods such as proximal policy optimization (PPO). An emerging alternative, direct preference optimization (DPO), skips the reward model training step and directly uses human preferences for LLM training. However, both approaches face limitations related to the scale and quality of available human preference data, and RLHF is further limited by the quality of the frozen reward model.

Researchers from Meta and New York University have proposed a novel approach called Self-gratifying language models, with the aim of overcoming the obstacles of traditional methods. Unlike frozen reward models, their process involves training a self-improving reward model that is continually updated during LLM alignment. By integrating instruction following and reward modeling into a single system, the model generates and evaluates your examples, refining instruction following and reward modeling skills.

Self-gratifying language models Start with a pre-trained language model and a limited set of human-annotated data. The model is designed to simultaneously excel in two key skills: i) following instructions and ii) creating self-instruction. The model self-evaluates the responses generated through the LLM mechanism as a judge, eliminating the need for an external reward model. The iterative self-alignment process involves developing new cues, evaluating responses, and updating the model using ai Feedback Training. This approach improves instruction following and improves the reward modeling ability of the model in successive iterations, deviating from traditional fixed reward models.

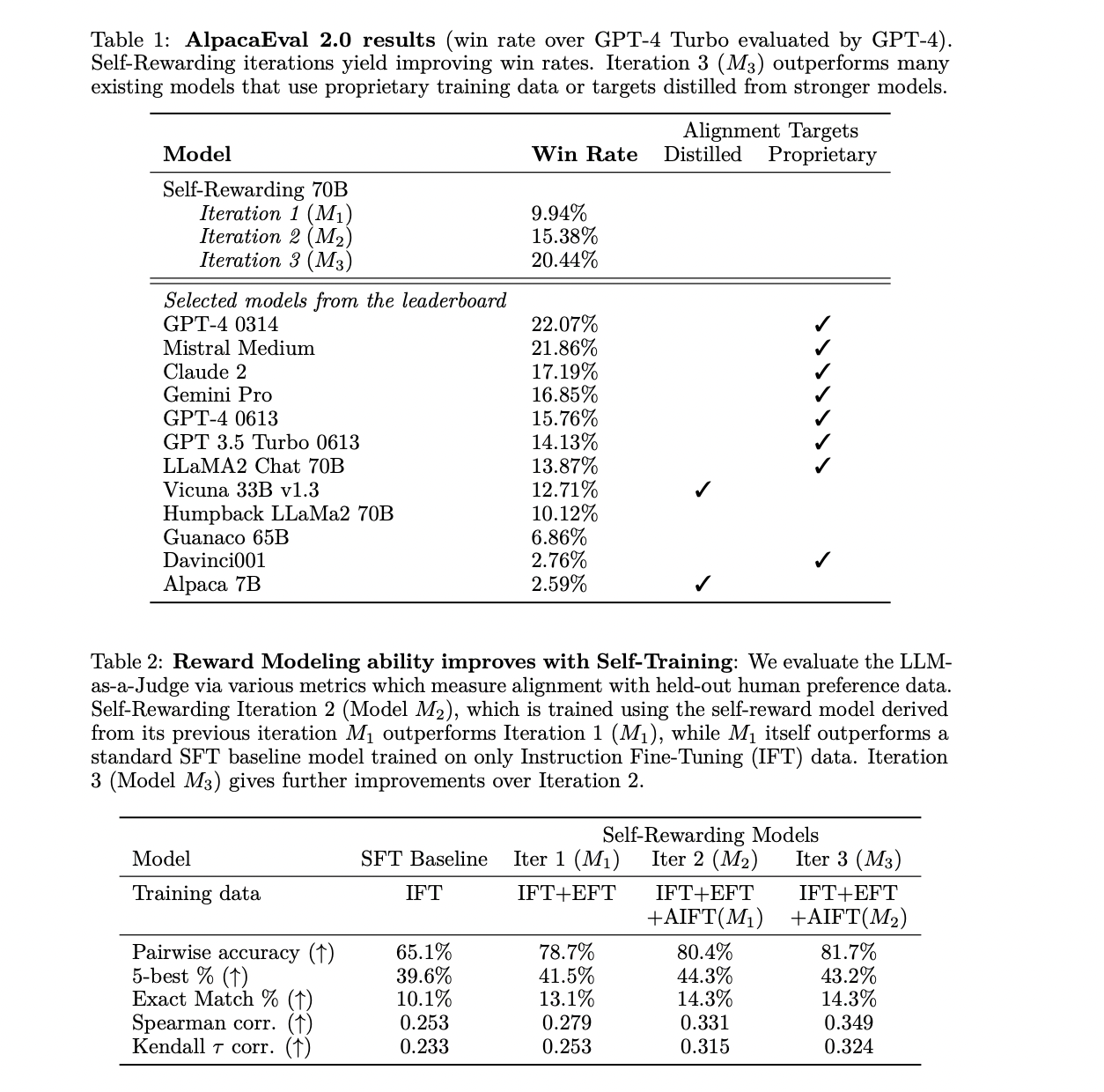

Self-rewarding language models demonstrate significant improvements in following instruction and modeling rewards. The training iterations show substantial improvements in performance, outperforming previous iterations and reference models. The self-compensated models exhibit competitive performance in AlpacaEval 2.0 classification, outperforming existing models (Claude 2, Gemini Pro and GPT4) with proprietary alignment data. The effectiveness of the method lies in its ability to iteratively improve instruction following and reward modeling, providing a promising avenue for self-improvement in language models. Model training has been shown to be superior to alternative approaches that rely solely on positive examples.

Researchers at Meta and New York University introduced self-rewarding language models capable of iterative self-alignment by generating and judging their training data. The model assigns rewards to its generations through LLM prompts as a judge and iterative DPO, improving both instruction following and reward modeling capabilities across iterations. While acknowledging the preliminary nature of the study, the approach presents an interesting avenue of research, suggesting continued improvement beyond traditional human preference-based reward models in language model training.

Review the Paper. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on Twitter. Join our 36k+ ML SubReddit, 41k+ Facebook community, Discord channeland LinkedIn Grabove.

If you like our work, you will love our Newsletter..

Don't forget to join our Telegram channel

![]()

Asjad is an internal consultant at Marktechpost. He is pursuing B.tech in Mechanical Engineering at Indian Institute of technology, Kharagpur. Asjad is a machine learning and deep learning enthusiast who is always researching applications of machine learning in healthcare.

<!– ai CONTENT END 2 –>