The development of multimodal large language models (MLLM) represents an important advance. These advanced systems, which integrate language and visual processing, have wide applications, from image captioning to visible answers to questions. However, a major challenge has been the high computational resources that these models typically require. Existing models, while powerful, require substantial resources for training and operation, limiting their practical usefulness and adaptability in various scenarios.

Researchers have made notable progress with models like LLaVA and MiniGPT-4, demonstrating impressive capabilities in tasks such as image captioning, visual question answering, and understanding cue expressions. However, these models must deal with computational efficiency issues despite their innovative achievements. They require significant resources, especially during the training and inference stages, which poses a considerable barrier to their widespread use, particularly in scenarios with limited computational capabilities.

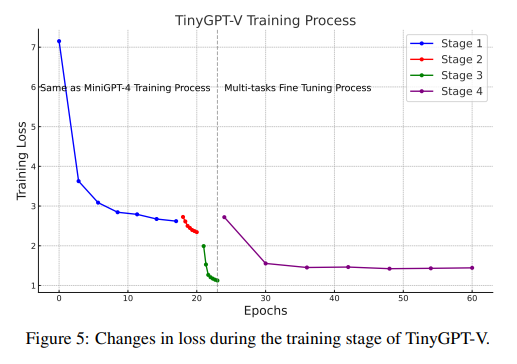

To address these limitations, researchers from Anhui Polytechnic University, Nanyang Technological University, and Lehigh University have introduced TinyGPT-V, a model designed to combine impressive performance with reduced computational demands. TinyGPT-V is distinguished by the requirement of simply a 24G GPU for training and an 8G GPU or CPU for inference. It achieves this efficiency by leveraging the Phi-2 model as the language backbone and pretrained BLIP-2 or CLIP vision modules. The Phi-2 model, known for its state-of-the-art performance among base language models with fewer than 13 billion parameters, provides a solid foundation for TinyGPT-V. This combination allows TinyGPT-V to maintain high performance while significantly reducing the computational resources required.

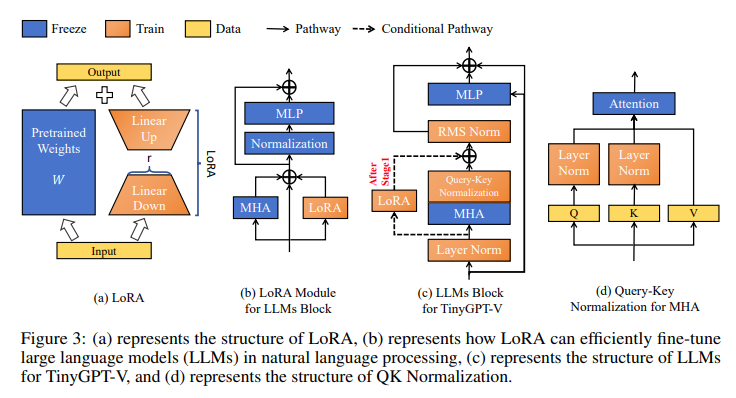

TinyGPT-V's architecture includes a unique quantization process that makes it suitable for inference and local deployment tasks on 8G-capable devices. This feature is particularly beneficial for practical applications where it is not feasible to deploy large-scale models. The model structure also includes linear projection layers that incorporate visual features into the language model, facilitating more efficient understanding of image-based information. These projection layers are initialized with a Gaussian distribution, bridging the gap between visual and linguistic modalities.

TinyGPT-V has demonstrated remarkable results on multiple benchmarks, demonstrating its ability to compete with much larger scale models. In the Visual-Spatial Reasoning (VSR) zero-shot task, TinyGPT-V achieved the highest score, outperforming its counterparts with many more parameters. Its performance on other benchmarks, such as GQA, IconVQ, VizWiz, and the Hateful Memes dataset, further underlines its ability to handle complex multimodal tasks efficiently. These results highlight the high performance and computational efficiency balance of TinyGPT-V, making it a viable option for various real-world applications.

In conclusion, the development of TinyGPT-V marks a significant advance in MLLMs. Effectively balancing high performance with manageable computational demands opens new possibilities for applying these models in scenarios where resource constraints are critical. This innovation addresses the challenges in implementing MLLM and paves the way for their broader applicability, making them more accessible and cost-effective for various uses.

Review the Paper and GitHub. All credit for this research goes to the researchers of this project. Also, don't forget to join. our SubReddit of more than 35,000 ml, 41k+ Facebook community, Discord channel, LinkedIn Graboveand Electronic newsletterwhere we share the latest news on ai research, interesting ai projects and more.

If you like our work, you'll love our newsletter.

![]()

Sana Hassan, a consulting intern at Marktechpost and a dual degree student at IIT Madras, is passionate about applying technology and artificial intelligence to address real-world challenges. With a strong interest in solving practical problems, she brings a new perspective to the intersection of ai and real-life solutions.

<!– ai CONTENT END 2 –>