Transformer has become the basic model that adheres to the scaling rule after achieving great success in natural language processing and computer vision. Time series forecasting is seeing the emergence of a Transformer, which is highly capable of extracting multi-level representations from sequences and representing pairwise relationships, thanks to its enormous success in other broad disciplines. However, the validity of transformer-based forecasts, which typically incorporate multiple variants of the same timestamp into indistinguishable channels and focus emphasis on these temporal tokens to capture temporal relationships, has come under scrutiny lately by the academics.

Transformer has become the basic model that adheres to the scaling rule after achieving great success in natural language processing and computer vision. Time series forecasting is seeing the emergence of a Transformer, which is highly capable of extracting multi-level representations from sequences and representing pairwise relationships, thanks to its enormous success in other broad disciplines. However, the validity of transformer-based forecasts, which typically incorporate multiple variants of the same timestamp into indistinguishable channels and focus emphasis on these temporal tokens to capture temporal relationships, has come under scrutiny lately by the academics.

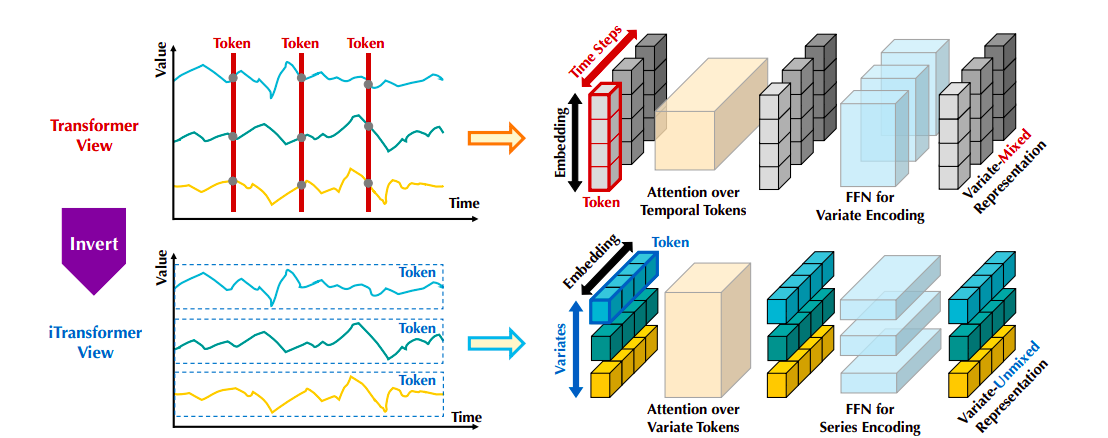

They note that multivariate time series forecasting may need to better fit the current structure of Transformer-based forecasters. The left panel of Figure 2 takes note of the fact that points from the same time step that essentially reflect radically diverse physical meanings captured by conflicting measurements are combined into a single sample with multivariate correlations deleted. Furthermore, due to the highly local receptive field of the real world and the misaligned timestamps of multiple time points, the token created in a single time step may have difficulty revealing useful information. Furthermore, in the temporal dimension, permutation-invariant attention mechanisms are inappropriately used even though the order of the sequence could have a significant impact on the variations of the series.

As a result, Transformer loses its ability to describe multivariate correlations and capture crucial series representations, which restricts its application and generalization capabilities across various time series data. They use an inverted view of time series and embed the entire time series of each variable separately into a token, the extreme example of Patching expanding the local receptive field in response to the irrationality of embedding multivariate points from each time step as a token. The embedded token inverts and aggregates global representations of series, which can be better utilized by booming attention mechanisms for more variable-centric, multivariate correlation.

Figure 1: iTransformer performance. TimesNet is used to report average results (MSE).

Meanwhile, the feedback network can be trained to acquire sufficiently generalized representations for different variables that are encoded from any retrospective series and then decoded to forecast subsequent series. For the reasons described above, they think Transformer is being misused rather than ineffective for time series forecasting. In this study they review the Transformer architecture and promote iTransformer as the essential framework for time series forecasting. In technical terms, they use the feedback network for series encoding, adopt attention for multivariate correlations, and incorporate each time series as variable tokens. In terms of experimentation, the suggested iTransformer unexpectedly addresses the shortcomings of Transformer-based forecasters while achieving state-of-the-art performance on the real-world forecasting benchmarks in Figure 1.

Figure 2: A comparison of the suggested iTransformer (below) and the vanilla Transformer (above). Unlike Transformer, which integrates each time step into the temporal token, iTransformer integrates the entire series independently into the variable token. As a result, the feedback network encodes series representations and the attention mechanism can show multivariate correlations.

Three things that they have contributed are the following:

• Researchers at Tsinghua University suggest iTransformer, which considers independent time series as tokens to capture multivariate correlations through self-attention. It uses layer normalization and feedback network modules to learn better global series representations for time series forecasting.

• They reflect on the Transformer architecture and refine the proficient capability of native Transformer components in time series that have not yet been explored.

• In real-world prediction benchmarks, iTransformer consistently achieves state-of-the-art results in experiments. Their comprehensive analysis of inverted modules and architectural decisions points to a potential path for the advancement of Transformer-based predictors in the future.

Review the Paper and GitHub. All credit for this research goes to the researchers of this project. Also, don’t forget to join. our 32k+ ML SubReddit, 41k+ Facebook community, Discord channel, and Electronic newsletterwhere we share the latest news on ai research, interesting ai projects and more.

If you like our work, you’ll love our newsletter.

we are also in Telegram and WhatsApp.

![]()

Aneesh Tickoo is a consulting intern at MarktechPost. She is currently pursuing her bachelor’s degree in Data Science and artificial intelligence at the Indian Institute of technology (IIT), Bhilai. She spends most of her time working on projects aimed at harnessing the power of machine learning. Her research interest is image processing and she is passionate about creating solutions around it. She loves connecting with people and collaborating on interesting projects.

<!– ai CONTENT END 2 –>