Introduction

Large Language Models (LLMs) and Generative ai represent a transformative breakthrough in artificial intelligence and Natural Language Processing. They can understand and generate human language and produce content like text, imagery, audio, and synthetic data, making them highly versatile in various applications. Generative ai holds immense importance in real-world applications by automating and enhancing content creation, personalizing user experiences, streamlining workflows, and fostering creativity. In this read, we will focus on how Enterprises can integrate with Open LLMs by grounding the prompts effectively using Enterprise Knowledge Graphs.

Learning Objectives

- Acquire knowledge on Grounding and Prompt building while interacting with LLMs/Gen-ai systems.

- Understanding the Enterprise relevance of Grounding, the business value out of integration with open Gen-ai systems with an example.

- Analyzing two major grounding contending solutions knowledge graphs and Vector stores on various fronts and understanding which suits when.

- Study a sample enterprise design of grounding and prompt building, leveraging knowledge graphs, learning data modeling, and graph modeling in JAVA for a personalized recommendation customer scenario.

This article was published as a part of the Data Science Blogathon.

What are Large Language Models?

A Large Language Model is an advanced ai model trained using deep learning techniques on massive amounts of text|unstructured data. These models are capable of interacting with human language, generating human-like text, images, and audio, and performing various natural language processing tasks.

In contrast, the definition of a language model refers to assigning probabilities to sequences of words based on the analysis of text corpora. A language model can vary from simple n-gram models to more sophisticated neural network models. However, the term “large language model” usually refers to models that use deep learning techniques and have a large number of parameters, which can range from millions to billions. These models can capture complex patterns in language and produce text often indistinguishable from that written by humans.

What is a Prompt?

A prompt to any LLM or a similar chatbot ai system is a text-based input or message you provide to initiate a conversation or interaction with the ai. LLMs are versatile, trained with a wide variety of big data, and can be used for various tasks; hence, the context, scope, quality, and clarity of your prompt significantly influence the responses you receive from the LLM systems.

What is Grounding/RAG?

Grounding, AKA Retrieval-Augmented Generation(RAG), in the context of natural language LLM processing, refers to enriching the prompt with context, additional metadata, and scope we provide to LLMs to improve and retrieve more tailored and accurate responses. This connection helps ai systems understand and interpret the data in a way that aligns with the required scope and context. Research on LLMs shows that the quality of their response depends on the quality of the prompt.

It is a fundamental concept in ai, as it bridges the gap between raw data and ai’s ability to process and interpret that data in a way consistent with human understanding and scoped context. It enhances the quality and reliability of ai systems and their ability to deliver accurate and useful information or responses.

What are the Drawbacks with LLMs?

Large Language Models (LLMs), like GPT-3, have gained significant attention and use in various applications, but they also come with several cons or drawbacks. Some of the main cons of LLMs include:

1. Bias and Fairness: LLMs often inherit biases from the training data. This can result in the generation of biased or discriminatory content, which can reinforce harmful stereotypes and perpetuate existing biases.

2. Hallucinations: LLMs do not truly understand the content they generate; they generate text based on patterns in the training data. This means they can produce factually incorrect or nonsensical information, making them unsuitable for critical applications like medical diagnosis or legal advice.

3. Computational Resources: Training and running LLMs require enormous computational resources, including specialized hardware like GPUs and TPUs. This makes them expensive to develop and maintain.

4. Data Privacy and Security: LLMs can generate convincing fake content, including text, images, and audio. This risks data privacy and security, as they can be exploited to create fraudulent content or impersonate individuals.

5. Ethical Concerns: Using LLMs in various applications, such as deepfakes or automated content generation, raises ethical questions about their potential for misuse and impact on society.

6. Regulatory Challenges: The rapid development of LLM technology has outpaced regulatory frameworks, making it challenging to establish appropriate guidelines and regulations to address the potential risks and challenges associated with LLMs.

It’s important to note that many of these cons are not inherent to LLMs but rather reflect how they are developed, deployed, and used. Efforts are ongoing to mitigate these drawbacks and make LLMs more responsible and beneficial for society. Here is where grounding and masking can be leveraged and be of huge advantage to the Enterprises.

Enterprise Relevance of Grounding

Enterprises thrive to induce Large Language Models (LLMs) into their mission-critical applications. They understand the potential value that LLMs could benefit across various domains. Building LLMs, pre-training, and fine-tuning them is quite expensive and cumbersome for them. Rather, they could use the open ai systems available in the industry with grounding and masking the prompts around enterprise use cases.

Hence, Grounding is a leading consideration for enterprises and is more relevant and helpful for them both in improving the quality of responses as well as overcoming the concern of hallucinations, Data security, and compliance, as it can drive amazing business value out of the open LLMs available in the market for numerous use cases that they have a challenge automating today.

Benefits to Enterprises

There are several benefits for Enterprises to implementing grounding with LLMs:

1. Enhanced Credibility: By ensuring that the information and content generated by LLMs are grounded in verified data sources, enterprises can enhance the credibility of their communications, reports, and content. This can help build trust with customers, clients, and stakeholders.

2. Improved Decision-Making: In enterprise applications, especially those related to data analysis and decision support, using LLMs with data grounding can provide more reliable insights. This can lead to better-informed decision-making, which is crucial for strategic planning and business growth.

3. Regulatory Compliance: Many industries are subject to regulatory requirements for data accuracy and compliance. Data grounding with LLMs can assist in meeting these compliance standards, reducing the risk of legal or regulatory issues.

4. Quality Content Generation: LLMs are often used in content creation, such as for marketing, customer support, and product descriptions. Data grounding ensures that the generated content is factually accurate, reducing the risk of disseminating false or misleading information or hallucinations.

5. Reduction in Misinformation: In an era of fake news and misinformation, data grounding can help enterprises combat the spread of false information by ensuring that the content they generate or share is based on validated data sources.

6. Customer Satisfaction: Providing customers with accurate and reliable information can enhance their satisfaction and trust in an enterprise’s products or services.

7. Risk Mitigation: Data grounding can help reduce the risk of making decisions based on inaccurate or incomplete information, which could lead to financial or reputational harm.

Example: A Customer Product Recommendation Scenario

Let’s see how data grounding could help for an enterprise use case using openAI chatGPT

Basic prompts

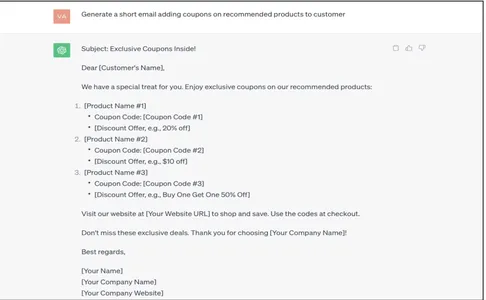

Generate a short email adding coupons on recommended products to customer

The response generated by ChatGPT is very generic, non-contextualized, and raw. This needs to be manually updated/mapped with the right enterprise customer data, which is expensive. Let’s see how this could be automated with data grounding techniques.

Say, suppose the enterprise already holds the enterprise customer data and an intelligent recommendation system that can generate coupons and recommendations for the customers; we could very well ground the above prompt by enriching it with the right metadata so that the generated email text from chatGPT would be exactly same as how we want it to be and can very well be automated to sending email to the customer without manual intervention.

Let’s assume our grounding engine will obtain the right enrichment metadata from customer data and update the prompt below. Let’s see how the ChatGPT response for the grounded prompt would be.

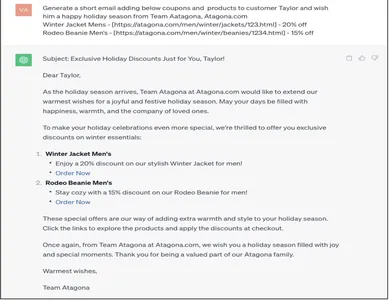

Grounded Prompt

Generate a short email adding below coupons and products to customer Taylor and wish him a

Happy holiday season from Team Aatagona, Atagona.com

Winter Jacket Mens - (https://atagona.com/men/winter/jackets/123.html) - 20% off

Rodeo Beanie Men’s - (https://atagona.com/men/winter/beanies/1234.html) - 15% off

The response generated with the ground prompt is exactly how the enterprise would want the customer to be notified. The enriched customer data embedding into an email response from Gen ai is an automation that would be remarkable to scale up and sustain enterprises.

Enterprise LLM Grounding Solutions for Software Systems

There are multiple ways to ground the data in enterprise systems, and a combination of these techniques could be used for effective data grounding and prompt generation specific to the use case. The two primary contenders as potential solutions for implementing retrieval augmented generation(grounding) are

- Application Data|Knowledge graphs

- Vector embeddings and semantic search

Usage of these solutions would depend on the use case and the grounding you want to apply. For example, vector stores provided responses can be inaccurate and vague, whereas knowledge graphs would return precise, accurate, and stored in a human-readable format.

A few other strategies that could be blended on top of the above could be

- Linking to External APIs, Search engines

- Data Masking and compliance adherence systems

- Integrating with internal data stores, systems

- Realtime Unifying data from multiple sources

In this blog, let’s look at a sample software design on how you can achieve with enterprise application data graphs.

Enterprise Knowledge Graphs

A knowledge graph can represent semantic information of various entities and relationships among them. In the Enterprise world, they store knowledge about customers, products, and beyond. Enterprise customer graphs would be a powerful tool to ground data effectively and generate enriched prompts. Knowledge graphs enable graph-based search, allowing users to explore information through linked concepts and entities, which can lead to more precise and diverse search results.

Comparison with Vector Databases

Choosing the grounding solution would be use-case-specific. However, there are multiple advantages with graphs over vectors like

| Criteria | Graph grounding | Vector grounding |

| Analytical Queries | Data graphs are suitable for structured data and analytical queries, providing accurate results due to their abstract graph layout. | Vector data stores may not perform as well with analytical queries as they mostly operate on unstructured data, semantic search with vector embeddings, and rely on similarity scoring. |

| Accuracy and Credibility | knowledge graphs use nodes and relationships to store data, returning only the information present. They avoid incomplete or irrelevant results. | Vector databases may provide incomplete or irrelevant results, mainly due to their reliance on similarity scoring and predefined result limits. |

| Correcting Hallucinations | Knowledge graphs are transparent with a human-readable representation of data. They help identify and correct misinformation, trace back the pathway of the query, and make corrections to it, improving LLM (Large Language Model) accuracy. | Vector databases are often seen as black boxes not stored in readable format and may not facilitate easy identification and correction of misinformation. |

| Security and Governance | Knowledge graphs offer better control over data generation, governance, and compliance adherence, including regulations like GDPR. | Vector databases may face challenges in imposing restrictions and governance due to their nontransparent nature. |

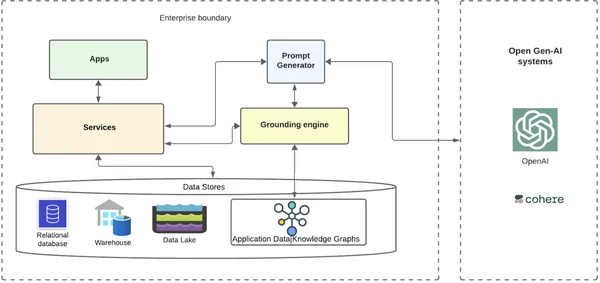

High-Level Design

Let us see on a very high level how the system can look for an enterprise that uses knowledge graphs and open LLMs for grounding.

The base layer is where enterprise customer data and metadata are stored across various databases, data warehouses, and data lakes. There can be a service building the data knowledge graphs out of this data and storing it in a graph db. There can be numerous enterprise services|micros services in a distributed cloud native world that would interact with these data stores. Above these services could be various applications that would leverage the underlying infra.

Applications can have numerous use cases to embed ai into their scenarios or intelligent automated customer flows, which requires interacting with internal and external ai systems. In the case of generative ai scenarios, let’s take a simple example of a workflow where an enterprise wants to target customers via an email offering a few discounts on personalized recommended products during a holiday season. They can achieve this with first-class automation, leveraging ai more effectively.

The Workflow

- Workflow that wants to send an email can take the help of open Gen-ai systems by sending a grounded prompt with customer contextualized data.

- The workflow application would send a request to its backend service to obtain the email text leveraging GenAI systems.

- Backend service would route the service to a prompt generator service, which routes to a grounding engine.

- The grounding engine grabs all the customer metadata from one of its services and retrieves the customer data knowledge graph.

- The grounding engine traverses the graph across the nodes and relevant relationships extracts the ultimate information required, and sends it back to the prompt generator.

- The prompt generator adds the grounded data with a pre-existing template for the use case and sends the grounded prompt to the open ai systems the enterprise chooses to integrate with(e.g., OpenAI/Cohere).

- Open GenAI systems return a much more relevant and contextualized response to the enterprise, sent to the customer via email.

Let’s break this into two parts and understand in detail:

1. Generating Customer Knowledge graphs

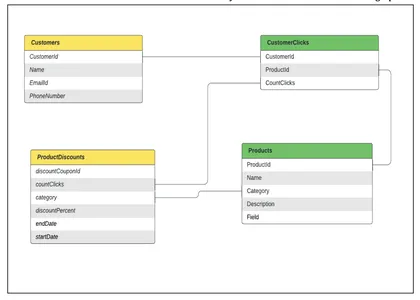

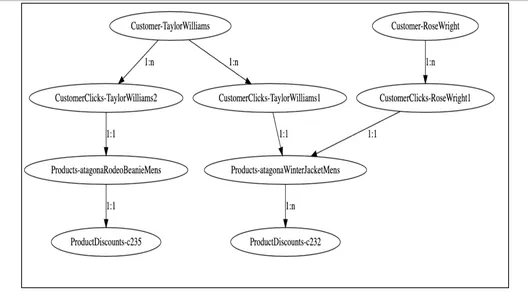

The below design suits the above example, modeling can be done in various ways according to the requirement.

Data Modeling: Assume we have various tables modeled as nodes in a graph and join between tables as relationships between nodes. For the above example, we need

- a table that holds the Customer’s data,

- a table that holds the product data,

- a table that holds the CustomerInterests(Clicks) data for personalized recommendations

- a table that holds the ProductDiscounts data

It’s the enterprise’s responsibility to have all of this data ingested from multiple data sources and updated regularly to reach customers effectively.

Let’s see how these tables can be modeled and how they can be transformed into a customer graph.

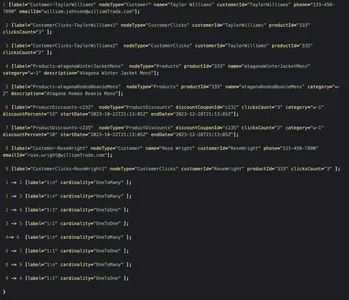

2. Graph Modeling

From the above graph visualizer, we can see how customer nodes are related to various products based on their clicks engagement data and further to the discounts nodes. It’s easy for the grounding service to query these customer graphs, traverse these nodes through relationships, and obtain the required information around discounts eligible to respective customers.

A sample graph node and relationship JAVA POJOs for the above could look similar to the below

public class KnowledgeGraphNode implements Serializable {

private final GraphNodeType graphNodeType;

private final GraphNode nodeMetadata;

}

public interface GraphNode {

}

public class CustomerGraphNode implements GraphNode {

private final String name;

private final String customerId;

private final String phone;

private final String emailId;

}

public class ClicksGraphNode implements GraphNode {

private final String customerId;

private final int clicksCount;

}

public class ProductGraphNode implements GraphNode {

private final String productId;

private final String name;

private final String category;

private final String description;

private final int price;

}

public class ProductDiscountNode implements GraphNode {

private final String discountCouponId;

private final int clicksCount;

private final String category;

private final int discountPercent;

private final DateTime startDate;

private final DateTime endDate;

}

public class KnowledgeGraphRelationship implements Serializable {

private final RelationshipCardinality Cardinality;

}

public enum RelationshipCardinality {

ONE_TO_ONE,

ONE_TO_MANY

}A sample raw graph in this scenario could look like below

Traversing through the graph from customer node ‘Taylor Williams’ would solve the problem for us and fetch the right product recommendations and eligible discounts.

3. Popular Graph stores in industry

There are numerous graph stores available in the market that can suit enterprise architectures. Neo4j, TigerGraph, Amazon Neptune, and OrientDB are widely adopted as graph databases.

We introduce the new paradigm of Graph Data Lakes, which enables graph queries on tabular data (structured data in lakes, warehouses, and lakehouses). This is achieved with new solutions listed below, without the need to hydrate or persist data in graph data stores, leveraging Zero-ETL.

- PuppyGraph(Graph Data Lake)

- Timbr.ai

Compliance and Ethical Considerations

Data Protection: Enterprises must be responsible for storing and using customer data adhering to GDPR and other PII compliance. Data stored needs to be governed and cleansed before processing and reusing for insights or applying ai.

Hallucinations & Reconciliation: Enterprises can also add reconciling services that would identify misinformation in data, trace back the pathway of the query, and make corrections to it, which can help improve LLM accuracy. With knowledge graphs, since the data stored is transparent and human-readable, this should be relatively easy to achieve.

Restrictive Retention policies: To adhere to data protection and prevent misuse of customer data while interacting with open LLM systems, it is very important to have zero retention policies so the external systems enterprises interact with would not hold the requested prompt data for any further analytical, or business purposes.

Conclusion

In conclusion, Large Language Models (LLMs) represent a remarkable advancement in artificial intelligence and natural language processing. They can transform various industries and applications, from natural language understanding and generation to assisting with complex tasks. However, the success and responsible use of LLMs require a strong foundation and grounding in various key areas.

Key Takeaways

- Enterprises can benefit hugely from effective grounding and prompting while using LLMs for various scenarios.

- Knowledge graphs and Vector stores are popular Grounding solutions, and choosing one would depend on the purpose of the solution.

- Knowledge graphs can have more accurate and reliable information over vector stores, which gives an edge for Enterprise use cases without having to add additional security and compliance layers.

- Transform the traditional data modeling with entities and relationships into Knowledge graphs with nodes and edges.

- Integrate the enterprise knowledge Graphs with various data sources with existing big data storage enterprises.

- Knowledge graphs are ideal for analytical queries. Graph data lakes enable tabular data to be queried as graphs in enterprise data storage.

Frequently Asked Questions

A. LLM is an ai algorithm that uses DL techniques and massively large data sets to understand, summarize, generate, and predict new content.

A. An application data graph is a data structure storing data in the form of nodes and edges. Model them as the relationships between different data nodes.

A. A vector database stores and manages unstructured data like text, audio, and video. It excels in quick indexing and retrieval for applications like recommendation engines, machine learning, and Gen-ai.

A. In a vector store, embeddings are numerical representations of objects, words, or data points in a high-dimensional vector space. These embeddings capture semantic relationships and similarities between items, enabling efficient data analysis, similarity searches, and machine-learning tasks.

A. Structured data is well-organized with defined tables and schema. Unstructured data, like text, images, audio, or video, is harder to analyze due to its lack of format.

The media shown in this article is not owned by Analytics Vidhya and is used at the Author’s discretion.

NEWSLETTER

NEWSLETTER