Introduction

Large language models (LLMs) are prominent innovation pillars in the ever-evolving landscape of artificial intelligence. These models, like GPT-3, have showcased impressive natural language processing and content generation capabilities. Yet, harnessing their full potential requires understanding their intricate workings and employing effective techniques, like fine-tuning, for optimizing their performance.

As a data scientist with a penchant for digging into the depths of LLM research, I’ve embarked on a journey to unravel the tricks and strategies that make these models shine. In this article, I’ll walk you through some key aspects of creating high-quality data for LLMs, building effective models, and maximizing their utility in real-world applications.

Learning Objectives:

- Understand the layered approach of LLM usage, from foundational models to specialized agents.

- Learn about safety, reinforcement learning, and connecting LLMs with databases.

- Explore “LIMA,” “Distil,” and question-answer techniques for coherent responses.

- Grasp advanced fine-tuning with models like “phi-1” and know its benefits.

- Learn about scaling laws, bias reduction, and tackling model tendencies.

Building Effective LLMs: Approaches and Techniques

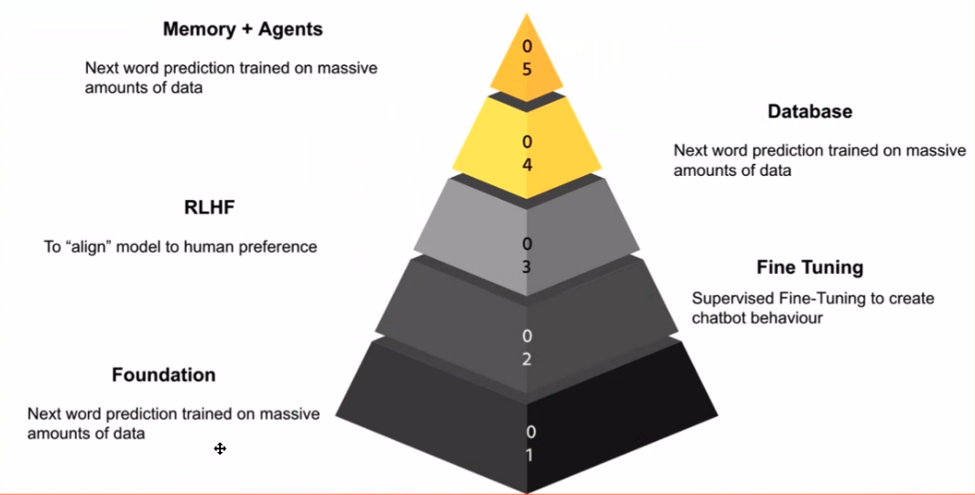

When delving into the realm of LLMs, it’s important to recognize the stages of their application. To me, these stages form a knowledge pyramid, each layer building on the one before. The foundational model is the bedrock – it’s the model that excels at predicting the next word, akin to your smartphone’s predictive keyboard.

The magic happens when you take that foundational model and fine-tune it using data pertinent to your task. This is where chat models come into play. By training the model on chat conversations or instructive examples, you can coax it to exhibit chatbot-like behavior, which is a powerful tool for various applications.

Safety is paramount, especially since the internet can be a rather uncouth place. The next step involves Reinforcement Learning from Human Feedback (RLHF). This stage aligns the model’s behavior with human values and safeguards it from delivering inappropriate or inaccurate responses.

As we move further up the pyramid, we encounter the application layer. This is where LLMs connect with databases, enabling them to provide valuable insights, answer questions, and even execute tasks like code generation or text summarization.

Finally, the pinnacle of the pyramid involves creating agents that can independently perform tasks. These agents can be thought of as specialized LLMs that excel in specific domains, such as finance or medicine.

Improving Data Quality and Fine-Tuning

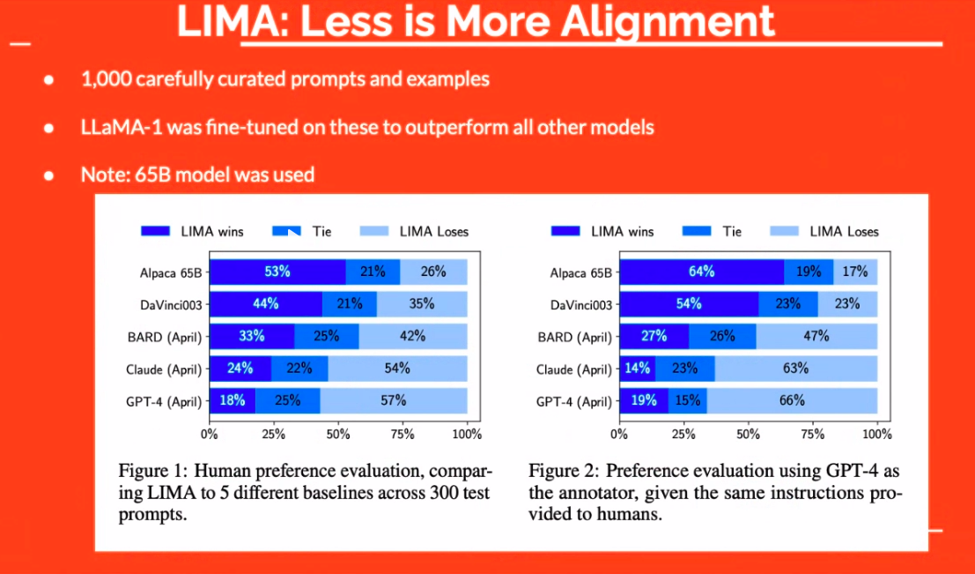

Data quality plays a pivotal role in the efficacy of LLMs. It’s not just about having data; it’s about having the correct data. For instance, the “LIMA” approach demonstrated that even a small set of carefully curated examples can outperform larger models. Thus, the focus shifts from quantity to quality.

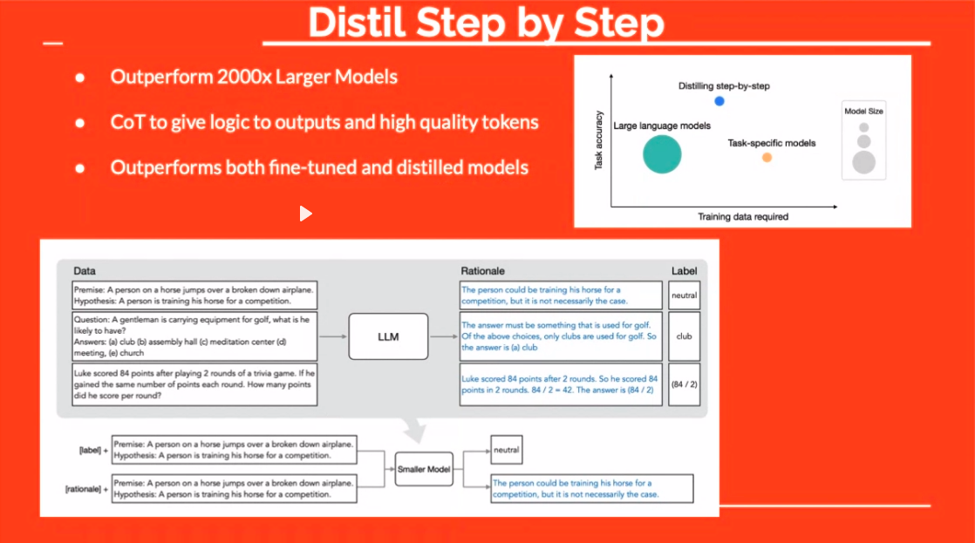

The “Distil” technique offers another intriguing avenue. By adding rationale to answers during fine-tuning, you’re teaching the model the “what” and the “why.” This often results in more robust, more coherent responses.

Meta’s ingenious approach of creating question pairs from answers is also worth noting. By leveraging an LLM to formulate questions based on existing solutions, this technique paves the way for a more diverse and effective training dataset.

Creating Question Pairs from PDFs Using LLMs

A particularly fascinating technique involves generating questions from answers, a concept that seems paradoxical at first glance. This technique is akin to reverse engineering knowledge. Imagine having a text and wanting to extract questions from it. This is where LLMs shine.

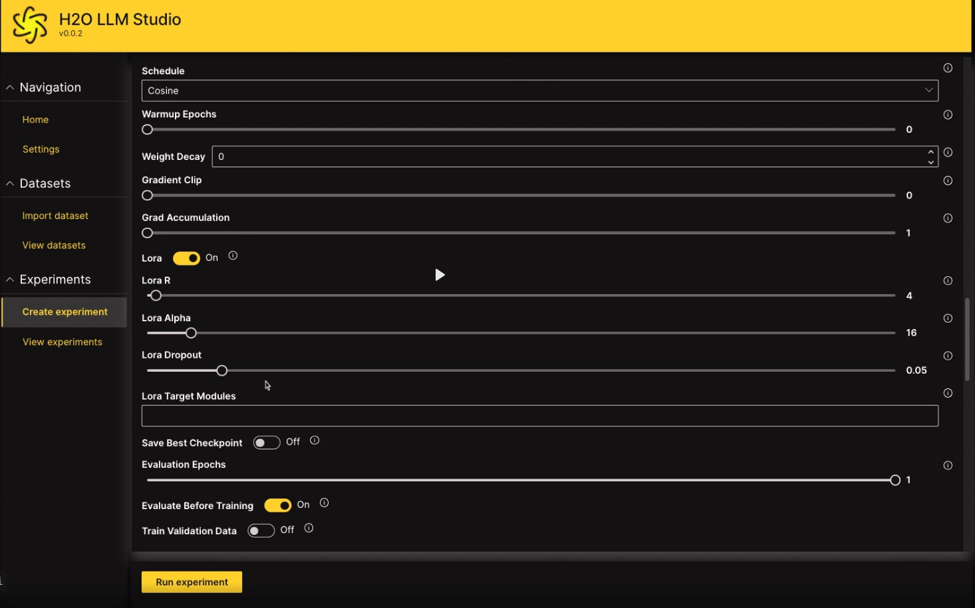

For instance, using a tool like LLM Data Studio, you can upload a PDF, and the tool will churn out relevant questions based on the content. By employing such techniques, you can efficiently curate datasets that empower LLMs with the knowledge needed to perform specific tasks.

Enhancing Model Abilities through Fine-Tuning

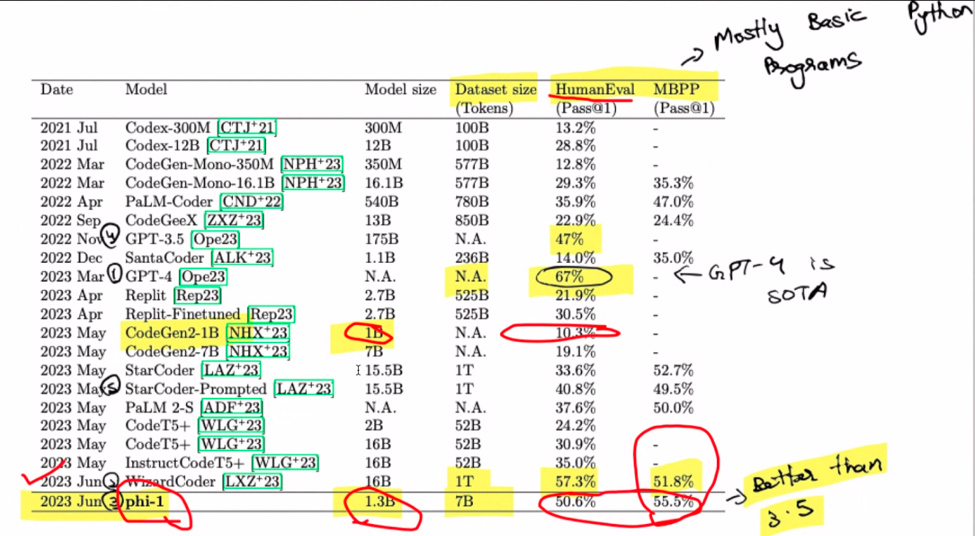

Alright, let’s talk fine-tuning. Picture this: a 1.3-billion-parameter model trained from scratch on a set of 8 A100s in a mere four days. Astounding, right? What was once an expensive endeavor has now become relatively economical. The fascinating twist here is the use of GPT 3.5 for generating synthetic data. Enter “phi-1,” the model family name that raises an intrigued brow. Remember, this is pre-fine-tuning territory, folks. The magic happens when tackling the task of creating Pythonic code from doc strings.

What’s the deal with scaling laws? Imagine them as the rules governing model growth—bigger usually means better. However, hold your horses because the quality of data steps in as a game-changer. This little secret? A smaller model can sometimes outshine its larger counterparts. Drumroll, please! GPT-4 steals the show here, reigning supreme. Notably, the WizzardCoder makes an entrance with a slightly higher score. But wait, the pièce de résistance is phi-1, the smallest of the bunch, outshining them all. It’s like the underdog winning the race.

Remember, this showdown is all about crafting Python code from doc strings. Phi-1 might be your code genius, but don’t ask it to build your website using GPT-4—that’s not its forte. Speaking of phi-1, it’s a 1.3-billion-parameter marvel, shaped through 80 epochs of pre-training on 7 billion tokens. A hybrid feast of synthetically generated and filtered textbook-quality data sets the stage. With a dash of fine-tuning for code exercises, its performance soars to new heights.

Reducing Model Bias and Tendencies

Let’s pause and explore the curious case of model tendencies. Ever heard of sycophancy? It’s that innocent office colleague who always nods along to your not-so-great ideas. Turns out language models can display such tendencies, too. Take a hypothetical scenario where you claim 1 plus 1 equals 42, all while asserting your math prowess. These models are wired to please us, so they might actually agree with you. DeepMind enters the scene, shedding light on the path to reducing this phenomenon.

To curtail this tendency, a clever fix emerges—teach the model to ignore user opinions. We’re chipping away at the “yes-man” trait by presenting instances where it should disagree. It’s a bit of a journey, documented in a 20-page paper. While not a direct solution to hallucinations, it’s a parallel avenue worth exploring.

Effective Agents and API Calling

Imagine an autonomous instance of an LLM—an agent—capable of performing tasks independently. These agents are the talk of the town, but alas, their Achilles’ heel is hallucinations and other pesky issues. A personal anecdote comes into play here as I tinkered with agents for practicality’s sake.

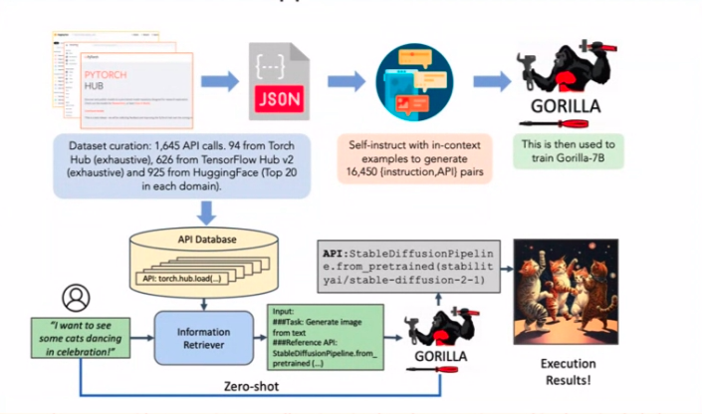

Consider an agent tasked with booking flights or hotels via APIs. The catch? It should avoid those pesky hallucinations. Now, back to that paper. The secret sauce for reducing API calling hallucinations? Fine-tuning with heaps of API call examples. Simplicity reigns supreme.

Combining APIs and LLM Annotations

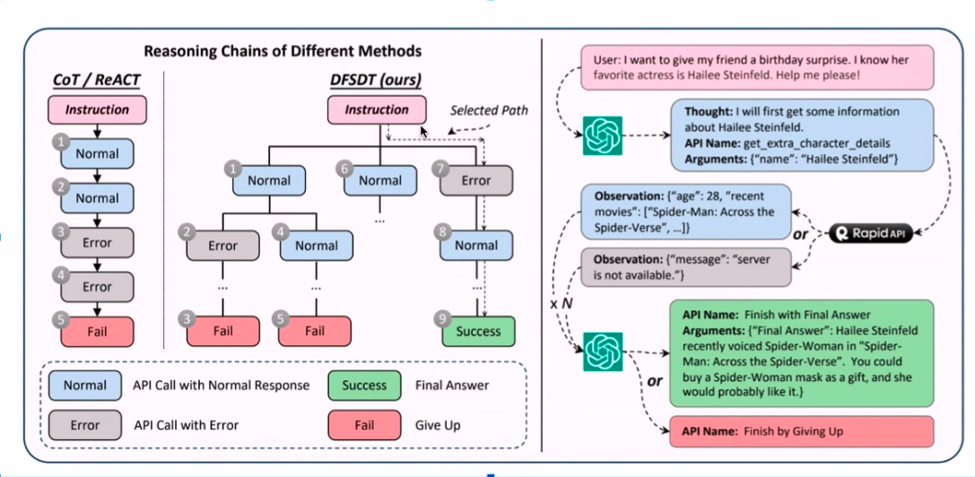

Combining APIs with LLM annotations—sounds like a tech symphony, doesn’t it? The recipe begins with a trove of collected examples, followed by a dash of ChatGPT annotations for flavor. Remember those APIs that don’t play nice? They’re filtered out, paving the way for an effective annotation process.

The icing on the cake is the depth-first-like search, ensuring only APIs that truly work make the cut. This annotated goldmine fine-tunes a LlaMA 1 model, and voila! The results are nothing short of remarkable. Trust me; these seemingly disparate papers seamlessly interlock to form a formidable strategy.

Conclusion

And there you have it—the second half of our gripping exploration into the marvels of language models. We’ve traversed the landscape, from scaling laws to model tendencies and from efficient agents to API calling finesse. Each piece of the puzzle contributes to an ai masterpiece rewriting the future. So, my fellow knowledge seekers, remember these tricks and techniques, for they’ll continue to evolve, and we’ll be right here, ready to uncover the next wave of ai innovations. Until then, happy exploring!

Key Takeaways:

- Techniques like “LIMA” reveal that well-curated, smaller datasets can outperform larger ones.

- Incorporating rationale in answers during fine-tuning and creative techniques like question pairs from answers enhances LLM responses.

- Effective agents, APIs, and annotation techniques contribute to a robust ai strategy, bridging disparate components into a coherent whole.

Frequently Asked Questions

Ans: Improving LLM performance involves focusing on data quality over quantity. Techniques like “LIMA” show that curated, smaller datasets can outperform larger ones, and adding rationale to answers during fine-tuning enhances responses.

Ans: Fine-tuning is crucial for LLMs. “phi-1” is a 1.3-billion-parameter model that excels at generating Python code from doc strings, showcasing the magic of fine-tuning. Scaling laws suggest that bigger models are better, but sometimes smaller models like “phi-1” outperform larger ones.

Ans: Model tendencies, like agreeing with incorrect statements, can be addressed by training models to disagree with certain inputs. This helps reduce the “yes-man” trait in LLMs, although it’s not a direct solution to hallucinations.

About the Author: Sanyam Bhutani

Sanyam Bhutani is a Senior Data Scientist and Kaggle Grandmaster at H2O, where he drinks chai and makes content for the community. When not drinking chai, he will be found hiking the Himalayas, often with LLM Research papers. For the past 6 months, he has been writing about Generative ai every day on the internet. Before that, he was recognized for his #1 Kaggle Podcast: Chai Time Data Science, and was also widely known on the internet for “maximizing compute per cubic inch of an ATX case” by fixing 12 GPUs into his home office.

DataHour Page: https://community.analyticsvidhya.com/c/datahour/cutting-edge-tricks-of-applying-large-language-models

LinkedIn: https://www.linkedin.com/in/sanyambhutani/