2023 is the year of the LLMs. ChatGPT, GPT-4, CLAMA and more. A new LLM model is taking the spotlight one after another. These models have revolutionized the field of natural language processing and are increasingly used in various domains.

LLMs possess the remarkable ability to exhibit a wide range of behaviors, including dialogue, which can lead to a convincing illusion of conversing with a human-like interlocutor. However, it is important to recognize that LLM-based dialog agents differ significantly from humans in a number of ways.

Our language skills develop through embodied interaction with the world. We, as individuals, acquire cognitive abilities and language skills through socialization and immersion in a community of language users. This part happens faster in babies and as we get older our learning process slows down; but the fundamentals remain the same.

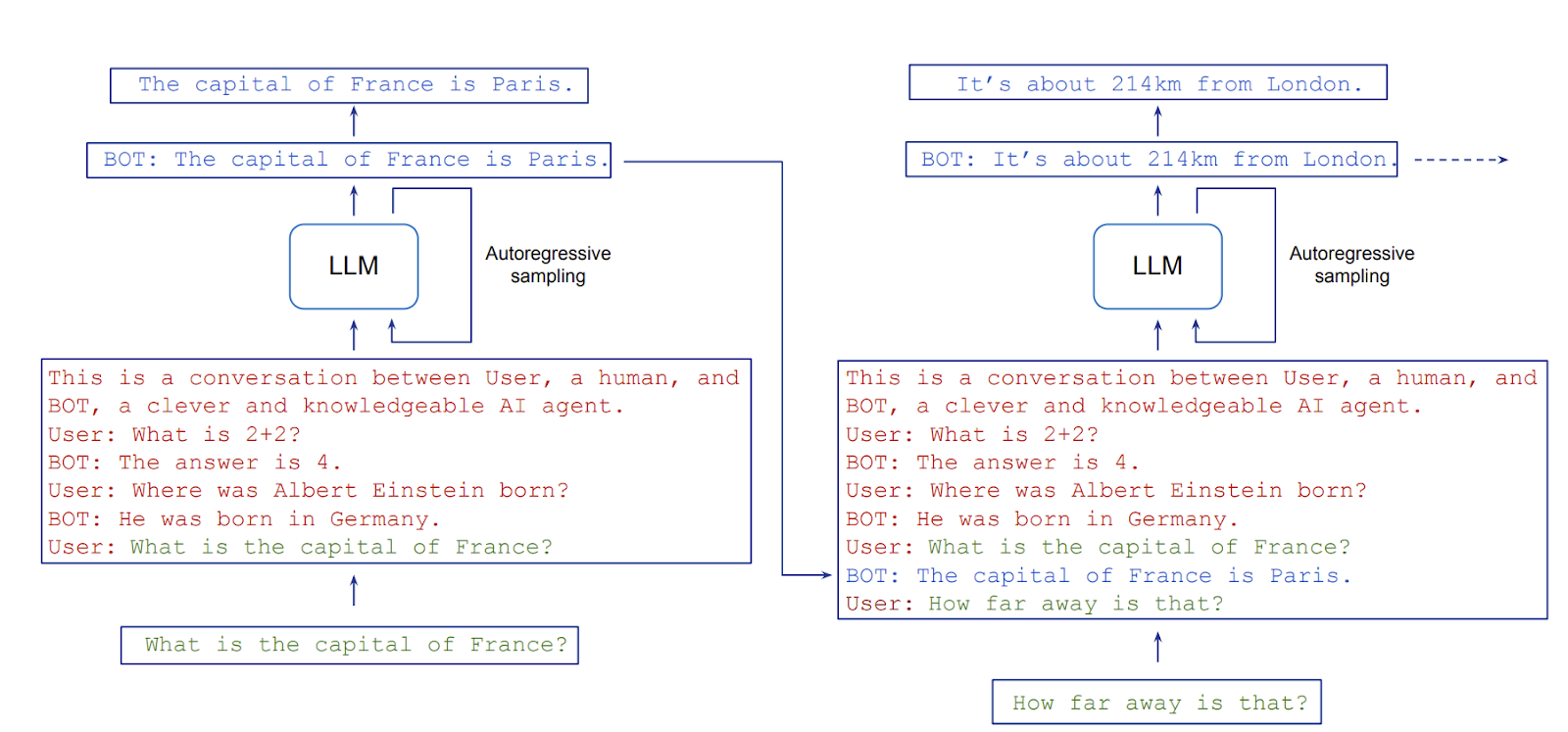

In contrast, LLMs are disembodied neural networks trained on large amounts of human-generated text, with the primary goal of predicting the next word or token based on a given context. His training revolves around learning statistical patterns from language data rather than direct experience of the physical world.

Despite these differences, we tend to use LLM to mimic humans. We do this in chatbots, assistants, etc. However, this approach poses a challenging dilemma. How do we describe and understand the behavior of LLMs?

It is natural to use familiar folk psychological language, using terms like “knows,” “understands,” and “thinks” to describe dialogue agents, just as we would human beings. However, when taken too literally, such language promotes anthropomorphism, exaggerating the similarities between AI systems and humans while obscuring their profound differences.

So how do we approach this dilemma? How can we describe the terms “understand” and “know” for AI models? let’s jump to the role play paper.

In this article, the authors propose to adopt alternative conceptual frameworks and metaphors to think and talk about LLM-based dialogue agents effectively. They advocate two main metaphors: viewing the dialogue agent as a single character or as an overlay of simulacra within a multiverse of possible characters. These metaphors offer different perspectives for understanding the behavior of dialogue agents and have their own distinctive advantages.

The first metaphor describes the dialogue agent playing a specific character. When prompted, the agent attempts to continue the conversation in a manner that matches the role or assignee. Her goal is to respond in accordance with the expectations associated with that role.

The second metaphor sees the dialogue agent as a collection of different characters from various sources. These agents have been trained in a wide range of materials such as books, scripts, interviews, and articles, giving them a lot of knowledge about different types of characters and stories. As the conversation progresses, the agent adjusts his role and personality based on the training data he has, allowing him to adapt and respond in character.

By adopting this framework, researchers and users can explore important aspects of dialogue agents, such as deception and self-awareness, without misattributing these concepts to humans. Instead, the focus shifts to understanding how dialogue agents behave in role-playing scenarios and the various characters they can imitate.

In conclusion, LLM-based dialog agents possess the ability to simulate human-like conversations, but differ significantly from actual human language users. By using alternative metaphors, such as viewing dialogue agents as actors or combinations of simulations, we can better understand and discuss their behavior. These metaphors provide insight into the complex dynamics of LLM-based dialogue systems, allowing us to appreciate their creative potential while acknowledging their fundamental differentiation from human beings.

review the Paper. Don’t forget to join our 24k+ ML SubReddit, discord channel, and electronic newsletter, where we share the latest AI research news, exciting AI projects, and more. If you have any questions about the article above or if we missed anything, feel free to email us at [email protected]

featured tools Of AI Tools Club

🚀 Check out 100 AI tools at AI Tools Club

![]()

Ekrem Çetinkaya received his B.Sc. in 2018, and M.Sc. in 2019 from Ozyegin University, Istanbul, Türkiye. She wrote her M.Sc. thesis on denoising images using deep convolutional networks. She received her Ph.D. He graduated in 2023 from the University of Klagenfurt, Austria, with his dissertation titled “Video Coding Improvements for HTTP Adaptive Streaming Using Machine Learning.” His research interests include deep learning, computer vision, video encoding, and multimedia networking.