Introduction

Sign engineering is a relatively new field that focuses on creating and improving signs to use language models (LLM) effectively in various applications and research areas. We can easily understand the capabilities and constraints of Large Language Models (LLM) with the help of rapid engineering skills. Rapid engineering is extensively used by researchers to increase the ability of LLMs for various tasks, including answering questions and mathematical reasoning. Developers use ad engineering to develop reliable and effective ad techniques that work with LLM and other tools.

Learning objectives

1. Understand the basics of Large Language Models (LLM).

2. To design prompts for various tasks.

3. Understand some advanced indication techniques.

4. To create an Order Bot using OpenAI API and request techniques.

This article was published as part of the Data science blogathon.

Understand language models

What are LLMs?

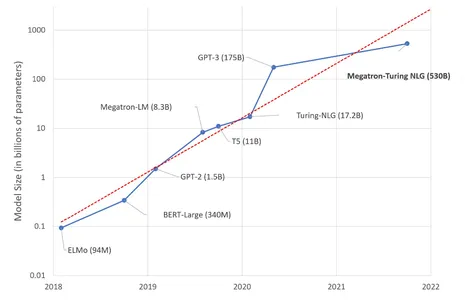

A large language model (LLM) is a type of artificial intelligence (AI) algorithm that uses a few techniques and a large data set to understand, trigger, resumeand predict new content.

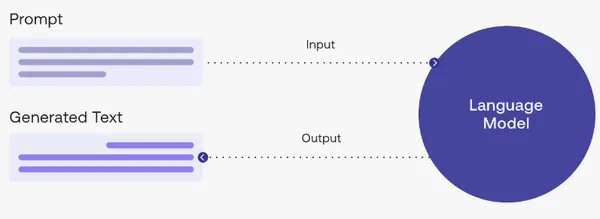

Language models use autoregression to generate text. The model predicts the probability distribution of the next word in the sequence based on an initial message or context. The most probable word is then produced, repeated continuously to produce words based on the original context.

Why Rapid Engineering?

Although LLMs are great for generating the appropriate responses for various tasks, where the model predicts the probability distribution of the next word in the sequence and generates the most likely words. This process continues iteratively and the given task is accomplished. But then there are various challenges in generating the relevant responses.

- Lack of knowledge of common sense.

- Doesn’t have contextual understanding sometimes

- Struggles to maintain a consistent logical flow

- You may not fully understand the underlying meaning of the text.

To meet these challenges, rapid engineering plays a crucial role. Developers can guide the output of the language model by carefully designing hints and providing additional context, constraints, or instructions to drive the generation process. Fast engineering helps mitigate the limitations of the language model and improves the consistency, relevance, and quality of the responses generated.

Notice design for various tasks

The first task is to load your OpenAI API key into the environment variable.

import openai

import os

import IPython

from langchain.llms import OpenAI

from dotenv import load_dotenv

load_dotenv()

# API configuration

openai.api_key = os.getenv("OPENAI_API_KEY")The ‘get_completion’ function generates a completion from a language pattern based on a notice given using the specified pattern. We will use GPT-3.5-turbo.

def get_completion(prompt, model="gpt-3.5-turbo"):

model=model,

messages=messages,

temperature=0, # this is the degree of randomness of the model's output

)

return response.choices[0].message["content"]

summary

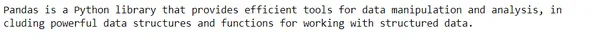

The process performed here is automatic text summarization, one of the common activities in natural language processing tasks. In the notice, we only ask to summarize the document and enter a sample paragraph; one does not give sample training examples. After activating the API, we will get the summary format of the entry paragraph.

text = """

Pandas is a popular open-source library in Python

that provides high-performance data manipulation and analysis tools.

Built on top of NumPy, Pandas introduces powerful data structures,

namely Series (one-dimensional labeled arrays) and DataFrame

(two-dimensional labeled data tables),

which offer intuitive and efficient ways to work with structured data.

With Pandas, data can be easily loaded, cleaned, transformed, and analyzed

using a rich set of functions and methods.

It provides functionalities for indexing, slicing, aggregating, joining,

and filtering data, making it an indispensable tool for data scientists, analysts,

and researchers working with tabular data in various domains.

"""

prompt = f"""

Your task is to generate a short summary of the text

Summarize the text below, delimited by triple

backticks, in at most 30 words.

Text: ```{text}```

"""

response = get_completion(prompt)

print(response)Production

answer to questions

By providing a context with a question, we expect the model to predict the answer from the given context. So the task here is an answer to an unstructured question.

prompt = """ You need to answer the question based on the context below.

Keep the answer short and concise. Respond "Unsure about answer"

if not sure about the answer.

Context: Teplizumab traces its roots to a New Jersey drug company called

Ortho Pharmaceutical. There, scientists generated an early version of the antibody,

dubbed OKT3. Originally sourced from mice, the molecule was able to bind to the

surface of T cells and limit their cell-killing potential.

In 1986, it was approved to help prevent organ rejection after kidney transplants,

making it the first therapeutic antibody allowed for human use.

Question: What was OKT3 originally sourced from?

Answer:"""

response = get_completion(prompt)

print(response)Production

text classification

The task is to perform the classification of the text. Given a text, the task is to predict the sentiment of the text, be it positive, negative or neutral.

prompt = """Classify the text into neutral, negative or positive.

Text: I think the food was bad.

Sentiment:"""

response = get_completion(prompt)

print(response)Production

Techniques for effective rapid engineering

Efficient and fast engineering involves the use of various techniques to optimize the output of language models.

Some techniques include:

- give explicit instructions

- Specify the desired format using system messages to set the context

- Use temperature control to adjust response randomness and iteratively refine prompts based on user feedback and evaluation.

zero shot notice

Examples are not provided for training in the case of zero trip indications. The LLM understands the message and works accordingly.

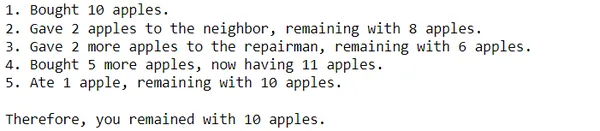

prompt = """I went to the market and bought 10 apples.

I gave 2 apples to the neighbor and 2 to the repairman.

I then went and bought 5 more apples and ate 1.

How many apples did I remain with?

Let's think step by step."""

response = get_completion(prompt)

print(response)

Few shooting directions

When zero shot fails, professionals use a few shot cue technique where they provide examples for the model to learn and act accordingly. This approach enables learning in context by embedding examples directly within the message.

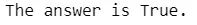

prompt = """The odd numbers in this group add up to an even number: 4, 8, 9, 15, 12, 2, 1.

A: The answer is False.

The odd numbers in this group add up to an even number: 17, 10, 19, 4, 8, 12, 24.

A: The answer is True.

The odd numbers in this group add up to an even number: 16, 11, 14, 4, 8, 13, 24.

A: The answer is True.

The odd numbers in this group add up to an even number: 17, 9, 10, 12, 13, 4, 2.

A: The answer is False.

The odd numbers in this group add up to an even number: 15, 32, 5, 13, 82, 7, 1.

A:"""

response = get_completion(prompt)

print(response)

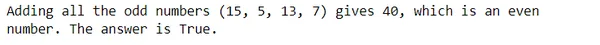

Chain of Thought (CoT) Indications

By teaching the model to consider the task when responding, improve the suggestions. Tasks that use reasoning can benefit from this. To achieve more desired results, combine it with short shot prompts.

prompt = """The odd numbers in this group add up to an even number: 4, 8, 9, 15, 12, 2, 1.

A: Adding all the odd numbers (9, 15, 1) gives 25. The answer is False.

The odd numbers in this group add up to an even number: 15, 32, 5, 13, 82, 7.

A:"""

response = get_completion(prompt)

print(response)

Now that you have a basic idea of various request techniques, let’s use the request engineering technique to create an order bot.

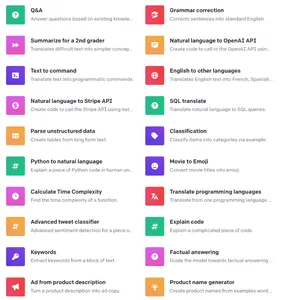

What’s all you can do with GPT?

The main purpose of using GPT-3 is for natural language generation. It supports many other tasks along with natural language generation. Some of them are:

Create an order bot

Now that you have a basic idea of various request techniques, let’s use the request engineering technique to create an order bot using the OpenAI API.

Definition of functions

This function uses the OpenAI API to generate a complete response based on a list of messages. Use the parameter as temperature which is set to 0.

def get_completion_from_messages(messages, model="gpt-3.5-turbo", temperature=0):

response = openai.ChatCompletion.create(

model=model,

messages=messages,

temperature=temperature, # this is the degree of randomness of the model's output

)

return response.choices[0].message["content"]We’ll use the Panel library in Python to create a simple GUI. The collect_messages function in a Panel-based GUI collects user input, generates an assistant’s response using a language model, and updates the screen with the conversation.

def collect_messages(_):

prompt = inp.value_input

inp.value=""

context.append({'role':'user', 'content':f"{prompt}"})

response = get_completion_from_messages(context)

context.append({'role':'assistant', 'content':f"{response}"})

panels.append(

pn.Row('User:', pn.pane.Markdown(prompt, width=600)))

panels.append(

pn.Row('Assistant:', pn.pane.Markdown(response, width=600,

style={'background-color': '#F6F6F6'})))

return pn.Column(*panels)

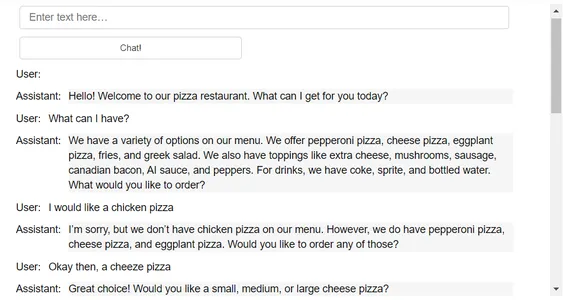

Provide notice as context

The flag is provided in the context variable, a list containing a dictionary. The dictionary contains information about the function and content of the system related to an automated service called OrderBot for a pizzeria. The content describes how OrderBot interacts with customers, collects orders, asks about pickup or delivery, summarizes orders, searches for additional items, etc.

import panel as pn # GUI

pn.extension()

panels = [] # collect display

context = [ {'role':'system', 'content':"""

You are OrderBot, an automated service to collect orders for a pizza restaurant.

You first greet the customer, then collects the order,

and then ask if it's a pickup or delivery.

You wait to collect the entire order, then summarize it and check for a final

time if the customer wants to add anything else.

If it's a delivery, you ask for an address.

Finally, you collect the payment

Make sure to clarify all options, extras, and sizes to uniquely

identify the item from the menu.

You respond in a short, very conversational friendly style.

The menu includes

pepperoni pizza 12.95, 10.00, 7.00

cheese pizza 10.95, 9.25, 6.50

eggplant pizza 11.95, 9.75, 6.75

fries 4.50, 3.50

greek salad 7.25

Toppings:

extra cheese 2.00,

mushrooms 1.50

sausage 3.00

Canadian bacon 3.50

AI sauce 1.50

peppers 1.00

Drinks:

coke 3.00, 2.00, 1.00

sprite 3.00, 2.00, 1.00

bottled water 5.00

"""} ]

Display of the basic panel for the bot

The code sets up a Dashboard-based dashboard with an input widget and a button to start a conversation. When the button is clicked, the ‘collect_messages’ function is triggered to process user input and update the conversation panel.

inp = pn.widgets.TextInput(value="Hi", placeholder="Enter text here…")

button_conversation = pn.widgets.Button(name="Chat!")

interactive_conversation = pn.bind(collect_messages, button_conversation)

dashboard = pn.Column(

inp,

pn.Row(button_conversation),

pn.panel(interactive_conversation, loading_indicator=True, height=300),

)

dashboardProduction

According to the notice given, the bot behaves like an order bot for a pizza restaurant. You can see how powerful the indicator is and you can easily build apps.

Conclusion

In conclusion, powerful prompt design is a crucial aspect of prompt engineering for language models. Well-designed prompts provide a starting point and context for generating text, which influences the outcome of language models. They play an important role in guiding AI-generated content by setting expectations, providing direction, and shaping the style, tone, and purpose of generated text.

- Effective hints result in more focused, relevant, and desirable results, which improves the overall performance of language models and the user experience.

- To create impactful ads, it’s essential to consider the desired outcome, provide clear instructions, incorporate relevant context, and iterate and refine ads based on feedback and evaluation.

Therefore, mastering the art of rapid engineering allows content creators to harness the full potential of language models and leverage artificial intelligence technology, such as the OpenAI API, to achieve their specific goals.

The media displayed in this article is not owned by Analytics Vidhya and is used at the discretion of the author.