Text to image generation is a term we are all familiar with by now. The post-launch stablecast era has given image generation another meaning, and subsequent advancements made it really hard to tell the difference between AI-generated images today. With MidJourney constantly improving and Stability AI releasing updated models, the effectiveness of text-to-image models has reached an extremely high level.

We have also seen attempts to further customize these models. People have worked on developing models that can be used to edit an image with the help of AI, such as replacing an object, changing the background, etc., all with a given text message. This advanced text-to-image modeling capability has also led to a cool startup where you can generate your own custom AI avatars, and it became a hit all of a sudden.

Custom text-to-image generation has been a fascinating area of research, with the goal of generating new scenes or styles of a given concept while maintaining the same identity. This challenging task involves learning from a set of images and then generating new images with different poses, backgrounds, object placements, clothing, lighting, and styles. While existing approaches have made significant progress, they often rely on fine-tuning testing time, which can be time consuming and limit scalability.

Proposed approaches for custom image synthesis have generally relied on pre-trained text-to-image models. These models are capable of generating images, but require fine tuning to learn each new concept, which requires storing model weights per concept.

What if we could have an alternative to this? What if we could have a custom text-to-image generation model that doesn’t rely on testing time fine-tuning so we can scale it better and achieve customization in a short time? time to meet instant booth.

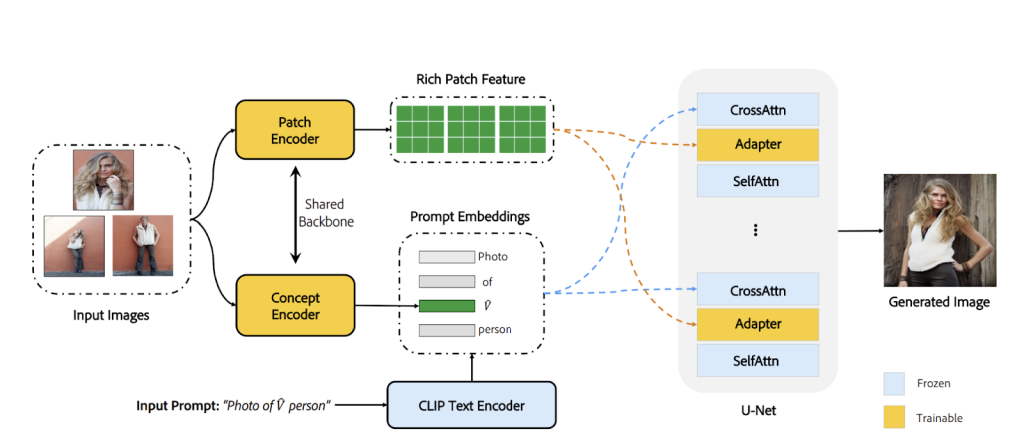

To deal with these limitations, instant booth proposes a novel architecture that learns the general concept of input images using an image encoder. It then maps these images to a compact textual embedding, ensuring generalization of unseen concepts.

While the compact embed captures the general idea, it does not include the detailed identity details needed to generate accurate images. To address this problem, instant booth features trainable adapter layers inspired by recent advances in language pretraining and vision modeling. These adapter layers extract rich identity information from the input images and inject it into the fixed backbone of the pretrained model. This ingenious approach successfully preserves the identity details of the input concept while retaining the buildability and language control of the pretrained model.

Besides, instant booth it eliminates the need for paired training data, making it more practical and feasible. Instead, the model is trained on text and image pairs without relying on paired images of the same concept. This training strategy allows the model to generalize well to new concepts. When presented with images of a new concept, the model can render objects with significant variations in pose and placement, while ensuring satisfactory preservation of identity and alignment between language and image.

In general, instant booth has three key contributions to the problem of custom text-to-image generation. Firstly, fine tuning of the test time is no longer required. Second, DreamBooth improves the generalization of unseen concepts by turning input images into text embeds. Furthermore, by injecting a rich representation of visual features into the pretrained model, it ensures identity preservation without sacrificing language controllability. Finally, instant booth achieves a noticeable 100x speed improvement while retaining similar visual quality to existing approaches.

review the Paper and Project. Don’t forget to join our 21k+ ML SubReddit, discord channel, and electronic newsletter, where we share the latest AI research news, exciting AI projects, and more. If you have any questions about the article above or if we missed anything, feel free to email us at [email protected]

🚀 Check out 100 AI tools at AI Tools Club

![]()

Ekrem Çetinkaya received his B.Sc. in 2018 and M.Sc. in 2019 from Ozyegin University, Istanbul, Türkiye. She wrote her M.Sc. thesis on denoising images using deep convolutional networks. She is currently pursuing a PhD. She graduated from the University of Klagenfurt, Austria, and working as a researcher in the ATHENA project. Her research interests include deep learning, machine vision, and multimedia networks.