People are excellent navigators of the physical world, due in part to their remarkable ability to build cognitive maps which form the basis of space memory — from locating landmarks at different ontological levels (such as a book on a shelf in the living room) to determining whether a design allows navigation from point A to point B. Building robots that are competent at navigation requires an interconnected understanding of (a) vision and natural language (to associate landmarks or follow instructions), and (b) spatial reasoning (to connect a map representing an environment with the true spatial distribution of objects). Although there have been many recent progress In training joint visual language models on Internet-scale data, figuring out how to best connect them to a spatial representation of the physical world that robots can use remains an open research question.

To explore this, we collaborated with researchers from the University of Freiburg and nuremberg develop visual language maps (VLMaps), a map representation that directly merges pre-trained visual language embeddings into a 3D reconstruction of the environment. VLMaps, which is configured to appear on ICRA 2023is a simple approach that allows robots to (1) index visual landmarks on the map using natural language descriptions, (2) employ Code as Policies to navigate to space targetssuch as “get between the sofa and the TV” or “move three meters to the right of the chair”, and (3) generate open vocabulary obstacle maps — allow multiple robots with different morphologies (mobile manipulators vs. drones, for example) to use the same VLMap for route planning. VLMaps can be used out-of-the-box without additional labeled data or model fine-tuning, and outperforms other zero-shot methods by more than 17% in challenging object-target and spatial-target navigation tasks in Habitat and Matterport3D. We are also launching the code used for our experiments together with an interactive simulated robot demo.

| VLMaps can be built by fusing pre-trained visual language embeddings into a 3D reconstruction of the environment. At runtime, a robot can query the VLMap to locate visual landmarks given natural language descriptions, or to build open vocabulary obstacle maps for route planning. |

Classic 3D maps with a modern multimodal twist

VLMaps combines the geometric structure of classical 3D reconstructions with the expression of modern visual language models pretrained on Internet-scale data. As the robot moves, VLMaps uses a pre-trained visual language model to calculate per-pixel dense keys from posed RGB camera views and integrates them into a map-sized 3D tensor aligned with an existing 3D reconstruction of the robot. physical world. . This representation allows the system to locate landmarks given their natural language descriptions (such as “a book on a shelf in the living room”) by comparing their text embeds to all locations in the tensor and finding the closest match. Querying these target locations can be used directly as target coordinates for language conditioned navigation, since the primitive API function requires Code as Policies to process spatial targets (for example, code writing models interpret “in the middle” as arithmetic between two locations), or to sequence multiple navigation targets for long-horizon instructions.

# move first to the left side of the counter, then move between the sink and the oven, then move back and forth to the sofa and the table twice. robot.move_to_left('counter') robot.move_in_between('sink', 'oven') pos1 = robot.get_pos('sofa') pos2 = robot.get_pos('table') for i in range(2): robot.move_to(pos1) robot.move_to(pos2) # move 2 meters north of the laptop, then move 3 meters rightward. robot.move_north('laptop') robot.face('laptop') robot.turn(180) robot.move_forward(2) robot.turn(90) robot.move_forward(3)

| VLMaps can be used to return the map coordinates of waypoints given natural language descriptions, which can be wrapped as a primitive API function call to Code as Policies to sequence multi-target long-horizon navigation instructions. |

Results

We evaluated VLMaps in challenging zero-shot spatial target and object-target navigation tasks in Habitat and Matterport3D, without additional training or adjustments. The robot is asked to navigate to four subgoals specified sequentially in natural language. We find that VLMaps significantly outperforms strong baselines (including Cow and LM-Nav) by up to 17% due to its improved visuo-lingual base.

| Tasks | Number of tracked subgoals | Independent subgoals |

||||||||

| 1 | 2 | 3 | 4 | |||||||

| LM-Nav | 26 | 4 | 1 | 1 | 26 | |||||

| Cow | 42 | fifteen | 7 | 3 | 36 | |||||

| MAP CLIP | 33 | 8 | 2 | 0 | 30 | |||||

| VLMaps (ours) | 59 | 3. 4 | 22 | fifteen | 59 | |||||

| GT Map | 91 | 78 | 71 | 67 | 85 | |||||

| The VLMaps approach performs favorably over open vocabulary alternative baselines in multi-object navigation (success rate [%]) and excels specifically at longer-term tasks with multiple secondary goals. |

A key advantage of VLMaps is its ability to understand spacesl Objectives, such as “get between the sofa and the television” or “move ten feet to the right of the chair.” Experiments for space navigation with long-term goals show an improvement of up to 29%. the regions on the map that are activated for different language queries, we visualize the heat maps for the object type “chair”.

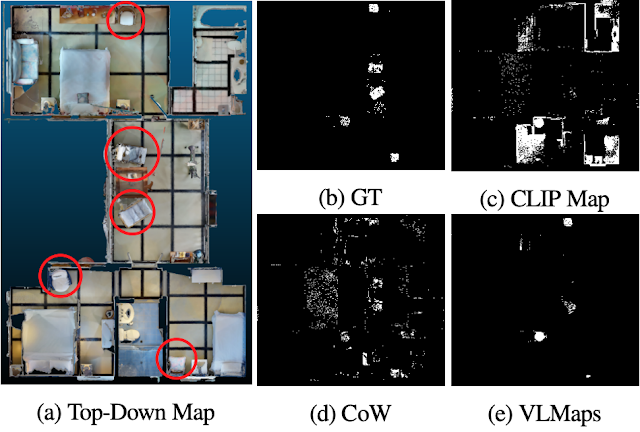

Open Vocabulary Obstacle Maps

A single VLMap of the same environment can also be used to create open vocabulary obstacle maps for route planning. This is done by taking the union of binary thresholded detection maps over a list of reference point categories that the robot may or may not traverse (such as “tables”, “chairs”, “walls”, etc.). This is useful as robots with different morphologies can move in the same environment in different ways. For example, “tables” are obstacles for a large mobile robot, but can be traversed by a drone. We found that using VLMaps to create multiple robot-specific obstacle maps improves navigation efficiency by up to 4% (measured in terms of path length-weighted task success rates) compared to using a single robot. single shared obstacle map for each robot. Watch the paper for more details.

|

| Experiments with a mobile robot (LoCoBot) and drone in AI2THOR simulated environments. Left: Top-down view of an environment. intermediate columns: Observations of agents during navigation. Good: Obstacle maps generated for different realizations with the corresponding navigation routes. |

Conclusion

VLMaps takes an initial step toward grounding pretrained visual language information into spatial map representations that robots can use for navigation. Experiments in simulated and real environments show that VLMaps can enable language-using robots to (i) index landmarks (or spatial locations relative to them) given their natural language descriptions, and (ii) generate vocabulary obstacle maps Open for route planning. Extending VLMaps to handle more dynamic environments (eg with people on the move) is an interesting avenue for future work.

open source release

We have released the code needed to reproduce our experiments and an interactive demo of the simulated robot on the project websitewhich also contains additional videos and codes to compare agents in simulation.

expressions of gratitude

We would like to thank the co-authors of this research: Chenguang Huang and Wolfram Burgard.