Text-to-image generation is a new and exciting area of research in the field of artificial intelligence (AI), where the goal is to generate realistic images based on textual descriptions. The ability to generate images from text has a wide range of applications, from art to entertainment, where it can be used to create images for books, movies, and video games.

One specific application of text-to-image generation is texture visualization, which involves creating images that represent different types of textures, such as fabrics, surfaces, and materials. Texture images represent essential applications in computer graphics, animation, and virtual reality, where realistic textures can enhance the immersive user experience.

Another area of interest in AI research is 3D texture transfer, which involves the transfer of texture information from one object to another in a 3D environment. This process creates true-to-life 3D models by transferring texture information from a source to a destination object. This approach can be used in fields such as product visualization, where realistic 3D models are essential.

Deep learning techniques have revolutionized the field of text-to-image generation, allowing the creation of highly realistic and detailed images. Using deep neural networks, researchers can train models to generate images that closely match textual descriptions or transfer textures between 3D objects.

Recent work on language-driven models indirectly exploits the well-known Stable Diffusion generative text-to-image model for sheet music distillation. This technique consists of distilling the knowledge of a large network to a smaller one, which is trained to predict the scores assigned to the images of the first network.

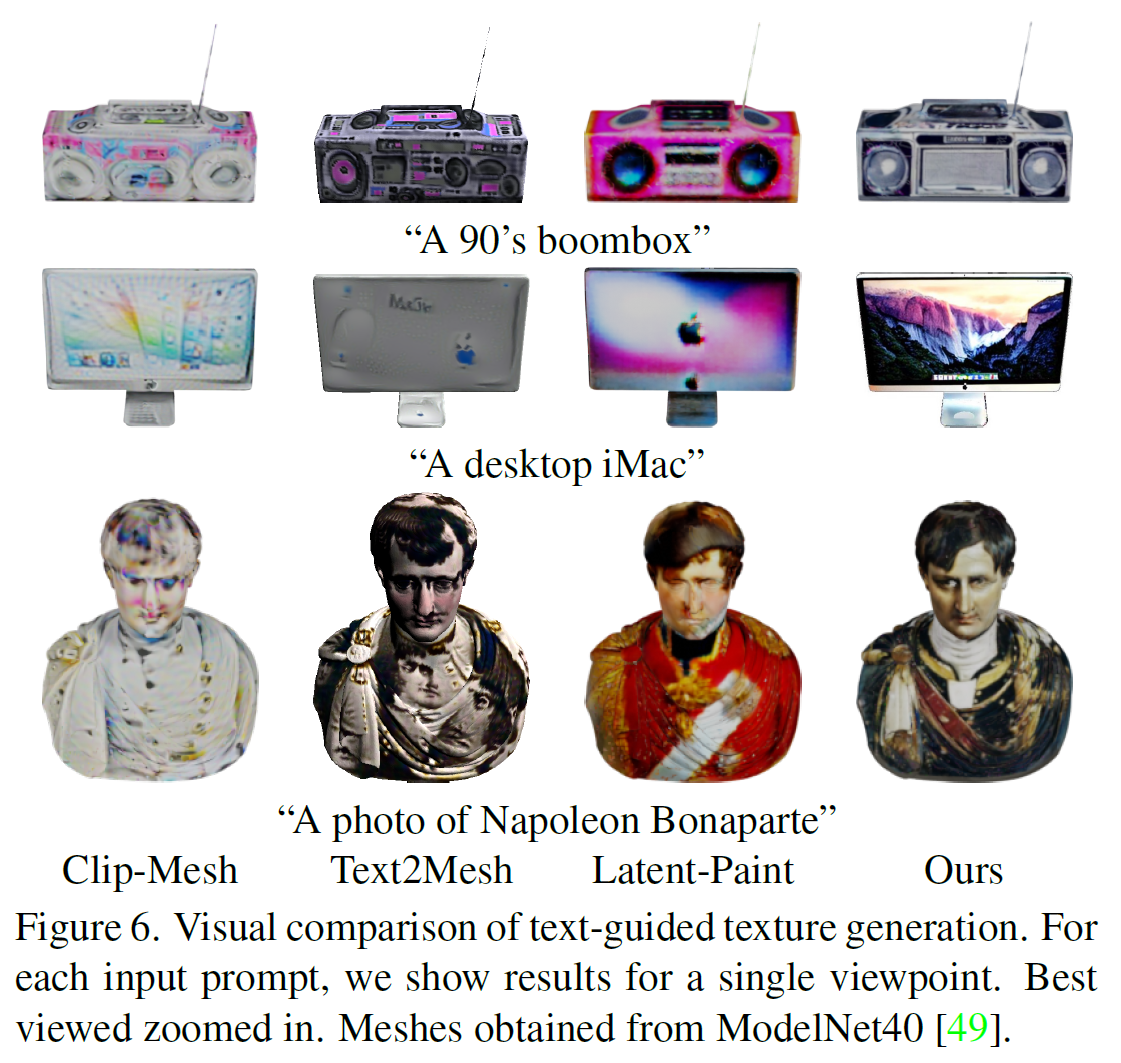

While representing a vast improvement over previously employed techniques, these models fall short in terms of the quality achieved for the 3D texture transfer process compared to their 2D counterparts.

To improve the accuracy of 3D texture transfer, a new AI framework called TEXTure has been proposed.

Below is an overview of the pipeline.

Unlike the approaches mentioned above, TEXTure applies a complete denoising process on the rendered images that takes advantage of a depth-conditioned diffusion model.

Given a 3D mesh to the texture, the core idea is to iteratively render from different viewpoints, apply a depth-based paint scheme, and project it back to an atlas.

However, the risk of applying this process naively is the generation of unrealistic or inconsistent textures due to the stochastic nature of the generation process.

To work around this problem, the selected 3D mesh is split into a clipping of “keep”, “refine” and “render” regions.

“Spawn” regions are parts of objects that need to be painted from the ground; “refine” refers to parts of objects that were textured from a different perspective and now need to be adjusted to a new point of view; “maintain” describes the act of preserving the painted texture.

According to the authors, the combination of these three techniques makes it possible to generate highly realistic results in a matter of minutes.

The results presented by the authors are reported below and compared with the most advanced approaches.

This was the brief for TEXTure, a new AI framework for texturing text-driven 3D meshes.

If you are interested or would like more information on this framework, you can find a link to the document and the project page.

review the Paper, Codeand project page. All credit for this research goes to the researchers of this project. Also, don’t forget to join our 16k+ ML SubReddit, discord channeland electronic newsletterwhere we share the latest AI research news, exciting AI projects, and more.

![]()

Daniele Lorenzi received his M.Sc. in ICT for Internet and Multimedia Engineering in 2021 from the University of Padua, Italy. He is a Ph.D. candidate at the Institute of Information Technology (ITEC) at the Alpen-Adria-Universität (AAU) Klagenfurt. He currently works at the Christian Doppler ATHENA Laboratory and his research interests include adaptive video streaming, immersive media, machine learning and QoS / QoE evaluation.

NEWSLETTER

NEWSLETTER

Recommended reading: Leveraging TensorLeap for effective transfer learning: bridging domain gaps

Recommended reading: Leveraging TensorLeap for effective transfer learning: bridging domain gaps