Amazon SageMaker Ground Truth Plus is a managed data labeling service that makes it easy to label data for machine learning (ML) applications. A common use case is semantic segmentation, which is a computer vision ML technique that involves assigning class labels to individual pixels in an image. For example, in video frames captured by a moving vehicle, class labels can include vehicles, pedestrians, roads, traffic signs, buildings, or backgrounds. It provides a highly accurate understanding of the locations of different objects in the image and is often used to build perception systems for autonomous vehicles or robotics. To build an ML model for semantic segmentation, it is first necessary to label a large volume of data at the pixel level. This labeling process is complex. Requires skilled taggers and a lot of time – some images can take up to 2 hours or more to tag accurately!

In 2019, we released an ML-powered interactive labeling tool called Auto-segment for Ground Truth that allows you to quickly and easily create high-quality segmentation masks. For more information, see Automatic targeting tool. This feature works by allowing you to click the top, left, bottom, and right “endpoints” of an object. An ML model running in the background will incorporate this user input and return a high-quality segmentation mask that is immediately rendered in the Ground Truth tagging tool. However, this feature only allows you to place four clicks. In certain cases, the ML-generated mask can inadvertently miss certain parts of an image, such as around the edge of the object where edges are blurry or where color, saturation, or shadows blend with the surroundings.

Click on extreme points with a flexible number of corrective clicks

We have now enhanced the tool to allow additional clicks on the boundary points, which provides real-time feedback to the ML model. This allows you to create a more precise segmentation mask. In the following example, the initial segmentation result is not accurate due to weak boundaries near the shadow. It is important to note that this tool works in a mode that allows feedback in real time, it does not require you to specify all points at once. Instead, you can first do four mouse clicks, which will trigger the ML model to produce a segmentation mask. You can then inspect this mask, spot possible inaccuracies, and then click additional ones as appropriate to “nudge” the model toward the correct result.

Our previous labeling tool allowed you to place exactly four mouse clicks (red dots). The initial segmentation result (shaded red area) is not accurate due to weak boundaries near the shadow (bottom left of red mask).

With our improved tagging tool, the user again first makes four mouse clicks (red dots in the figure above). You then have the opportunity to inspect the resulting segmentation mask (red shaded area in the figure above). You can make additional mouse clicks (green dots in the figure below) to make the model refine the mask (red shaded area in the figure below).

Compared to the original version of the tool, the improved version provides an improved result when objects are deformable, non-convex, and vary in shape and appearance.

We simulate the performance of this improved tool on sample data by first running the benchmark tool (with just four extreme clicks) to generate a segmentation mask and evaluate its Mean Intercept Over Union (mIoU), a common measure of accuracy for segmentation masks. segmentation. We then apply simulated corrective clicks and assess the improvement in mIoU after each simulated click. The following table summarizes these results. The first row shows the mIoU and the second row shows the error (which is given by 100% minus the mIoU). With just five extra mouse clicks, we can reduce the error by 9% for this task!

| . | . | Number of corrective clicks | . | |||

| . | Base | 1 | 2 | 3 | 4 | 5 |

| meow | 72.72 | 76.56 | 77.62 | 78.89 | 80.57 | 81.73 |

| Mistake | 27% | 23% | 22% | twenty-one% | 19% | 18% |

Integration with Ground Truth and performance profiles

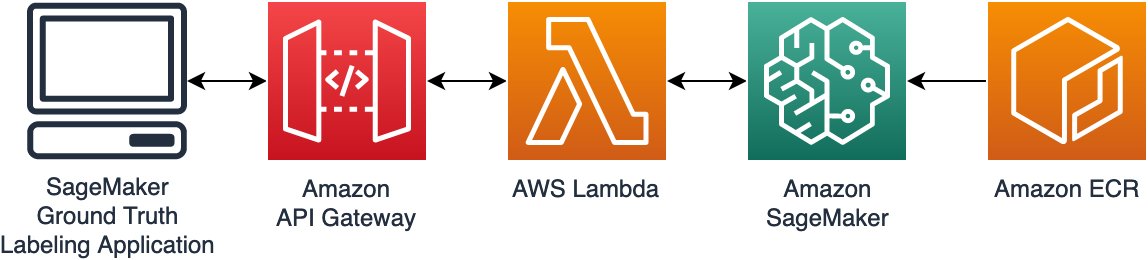

To integrate this model with Ground Truth, we follow a standard architecture pattern as shown in the following diagram. First, we build the ML model on a Docker image and deploy it to the Amazon Elastic Container Registry (Amazon ECR), a fully managed Docker container registry that makes it easy to store, share, and deploy container images. Using the SageMaker Inference Toolkit in building the Docker image allows us to easily use best practices for model serving and achieve low latency inference. We then create an Amazon SageMaker real-time endpoint to host the model. We introduced an AWS Lambda function as a proxy against the SageMaker endpoint to provide various types of data transformation. Finally, we use Amazon API Gateway as a way to integrate with our front end, the Ground Truth labeling application, to provide secure authentication to our back end.

You can follow this generic pattern for your own purpose-built machine learning tool use cases and integrate them with custom Ground Truth task UIs. For more information, see Create a Custom Data Labeling Workflow with Amazon SageMaker Ground Truth.

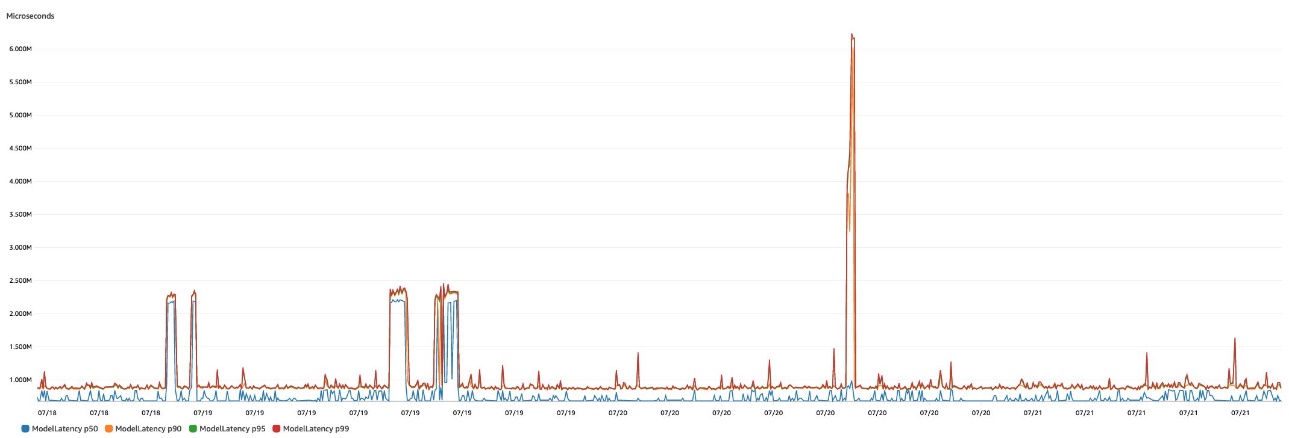

After provisioning this architecture and deploying our model using the AWS Cloud Development Kit (AWS CDK), we evaluated the latency characteristics of our model with different types of SageMaker instances. This is very easy to do because we use SageMaker real-time inference endpoints to serve our model. SageMaker real-time inference endpoints integrate seamlessly with Amazon CloudWatch and emit metrics such as memory utilization and model latency without configuration (see SageMaker Endpoint Invocation Metrics for more details). ).

In the following figure, we show the ModelLatency metric emitted natively by SageMaker real-time inference endpoints. We can easily use various metric math functions in CloudWatch to display latency percentiles, such as p50 or p90 latency.

The following table summarizes these results for our enhanced extreme click tool for semantic targeting for three instance types: p2.xlarge, p3.2xlarge, and g4dn.xlarge. Although the p3.2xlarge instance provides the lowest latency, the g4dn.xlarge instance provides the best cost-performance. The g4dn.xlarge instance is only 8% slower (35 milliseconds) than the p3.2xlarge instance, but is 81% less expensive per hour than the p3.2xlarge (see Amazon SageMaker Pricing for more details on types of SageMaker instances and prices).

| SageMaker instance type | p90 Latency (ms) | |

| 1 | p2.xlarge | 751 |

| 2 | p3.2xlarge | 424 |

| 3 | g4dn.xgrande | 459 |

Conclusion

In this post, we introduce an extension to Ground Truth’s auto-segment feature for semantic segmentation annotation tasks. While the original version of the tool allows you to make exactly four mouse clicks, which activates a model to provide a high-quality segmentation mask, the extension allows you to make corrective clicks, thereby updating and guiding the model. ML to make better predictions. We also present a basic architectural pattern that you can use to implement and integrate interactive tools into Ground Truth labeling UIs. Finally, we summarize model latency and show how using SageMaker real-time inference endpoints makes it easy to monitor model performance.

To learn more about how this tool can reduce the cost of labeling and increase accuracy, visit Amazon SageMaker Data Labeling to start a consultation today.

About the authors

jonathan dollar He is a software engineer at Amazon Web Services, working at the intersection of machine learning and distributed systems. His work involves producing machine learning models and developing novel machine learning-powered software applications to put the latest capabilities in the hands of clients.

jonathan dollar He is a software engineer at Amazon Web Services, working at the intersection of machine learning and distributed systems. His work involves producing machine learning models and developing novel machine learning-powered software applications to put the latest capabilities in the hands of clients.

Li Erran Li he is the manager of applied sciences at humain-in-the-loop services, AWS AI, Amazon. His research interests are 3D deep learning and language and vision representation learning. Previously, he was a Senior Scientist at Alexa AI, Head of Machine Learning at Scale AI, and Lead Scientist at Pony.ai. Prior to that, he was with the Uber ATG insight team and Uber’s machine learning platform team working on machine learning for autonomous driving, machine learning systems, and strategic AI initiatives. He began his career at Bell Labs and was an Adjunct Professor at Columbia University. He co-taught tutorials at ICML’17 and ICCV’19, and co-organized various workshops at NeurIPS, ICML, CVPR, ICCV on machine learning for autonomous driving, 3D vision and robotics, machine learning systems and adversarial machine learning. He has a PhD in computer science from Cornell University. He is a member of ACM and a member of IEEE.

Li Erran Li he is the manager of applied sciences at humain-in-the-loop services, AWS AI, Amazon. His research interests are 3D deep learning and language and vision representation learning. Previously, he was a Senior Scientist at Alexa AI, Head of Machine Learning at Scale AI, and Lead Scientist at Pony.ai. Prior to that, he was with the Uber ATG insight team and Uber’s machine learning platform team working on machine learning for autonomous driving, machine learning systems, and strategic AI initiatives. He began his career at Bell Labs and was an Adjunct Professor at Columbia University. He co-taught tutorials at ICML’17 and ICCV’19, and co-organized various workshops at NeurIPS, ICML, CVPR, ICCV on machine learning for autonomous driving, 3D vision and robotics, machine learning systems and adversarial machine learning. He has a PhD in computer science from Cornell University. He is a member of ACM and a member of IEEE.