Image blending is a primary method in computer vision, one of the best-known branches in the artificial intelligence component. The goal is to blend two or more images to produce a unique combination that incorporates the best aspects of each input image. This method is widely used in various application fields, including image editing, computer imaging, and medical imaging.

Image blending is frequently used in artificial intelligence activities such as image segmentation, object identification, and image super-resolution. It is critical for improving image clarity, which is essential for many uses, including robotics, automated driving, and surveillance.

Over the years, various image blending techniques have been created, which are mainly based on deformation of an image through a 2D affine transformation. However, these approaches do not take into account the discrepancy in 3D geometric features such as pose or shape. 3D alignment is much more difficult to achieve, as it requires inferring the 3D structure from a single view.

To address this problem, a 3D-compatible image blending method based on neurally generative radiation fields (NeRF) has been proposed.

The purpose of generative NeRFs is to learn a strategy for synthesizing 3D images using only 2D single-view image collections. Therefore, the authors project the input images to the volume density representation of the generative NeRFs. To reduce the dimensionality and complexity of data and operations, conscious 3D blending is then performed in the latent representation spaces of these NeRFs.

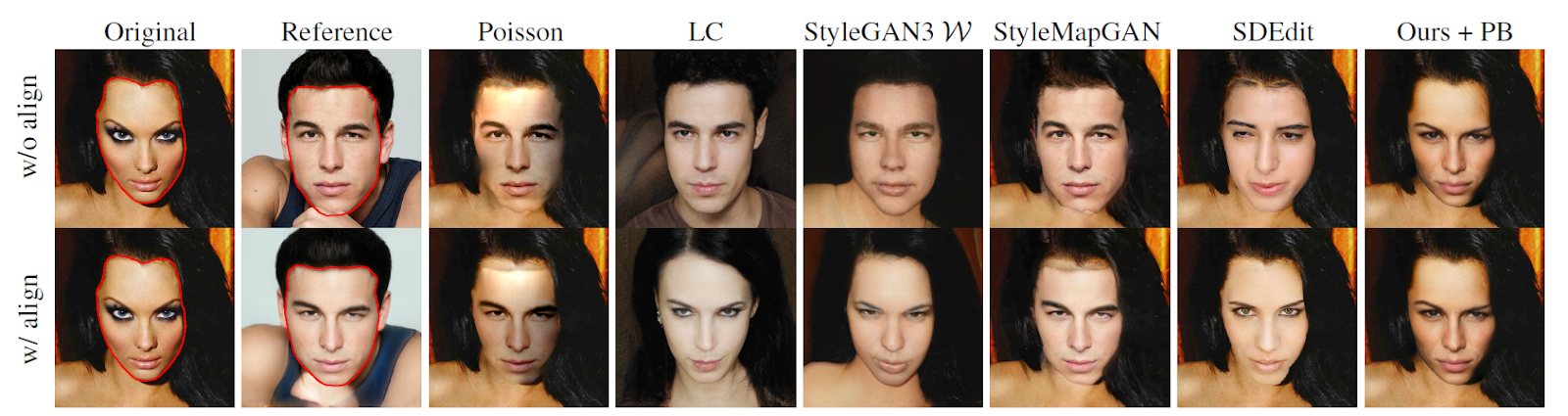

Specifically, the formulated optimization problem considers the impact of the latent code on the synthesis of the combined image. The goal is to edit the foreground based on the reference images and keep the background of the original image. For example, if the two images considered were faces, the framework should replace the facial characteristics and features of the original image with those of the reference image, keeping the rest unchanged (hair, neck, age, environment, etc.).

The following image provides an overview of the architecture compared to previous strategies.

The first method consists of the unique 2D combination of two 2D images without alignment. An improvement can be found in supporting this method of 2D blending with 3D-aware alignment with generative NeRFs. To further exploit the 3D information, the final architecture infers two images in the NeRF latent representation spaces instead of the 2D pixel space.

3D alignment is achieved by a CNN encoder, which infers the camera pose from each input image, and by the latent code of the image itself. Once the reference image is correctly rotated to reflect the original image, the NeRF representations of both images are computed. Finally, the 3D transformation matrix (scale, translation) is estimated from the original image and applied to the reference image to obtain a semantically accurate combination.

The results on unaligned images with different poses and scales are reported below.

According to the authors and their experiments, this method outperforms both classical and learning-based methods in terms of photorealism and fidelity to the input images. Additionally, by taking advantage of latent space representations, this method can untangle geometric and color changes during blending and create view-consistent results.

This was the brief for a new AI framework for the conscious combination of 3D with neurally generative radiation fields (NeRFs).

If you are interested or would like more information on this framework, you can find a link to the document and the project page below.

review the Paper, github, and Project. All credit for this research goes to the researchers of this project. Also, don’t forget to join our 15k+ ML SubReddit, discord channeland electronic newsletterwhere we share the latest AI research news, exciting AI projects, and more.

Daniele Lorenzi received his M.Sc. in ICT for Internet and Multimedia Engineering in 2021 from the University of Padua, Italy. He is a Ph.D. candidate at the Institute of Information Technology (ITEC) at the Alpen-Adria-Universität (AAU) Klagenfurt. He currently works at the Christian Doppler ATHENA Laboratory and his research interests include adaptive video streaming, immersive media, machine learning and QoS / QoE evaluation.