To truly become an expert in GenAI Ops, the key is not just knowing what to learn, but how to learn it and apply it effectively. The journey begins with gaining a broad understanding of foundational concepts such as prompt engineering, Retrieval-Augmented Generation (RAG), and ai agents. However, your focus should gradually shift to mastering the intersection of Large Language Models (LLMs) and ai agents with operational frameworks – LLMOps and AgentOps. These fields will enable you to build, deploy, and maintain intelligent systems at scale.

Here’s a structured, week-by-week GenAI Ops Roadmap to mastering these domains, emphasizing how you will move from learning concepts to applying them practically.

Click here to download the GenAI Ops roadmap!

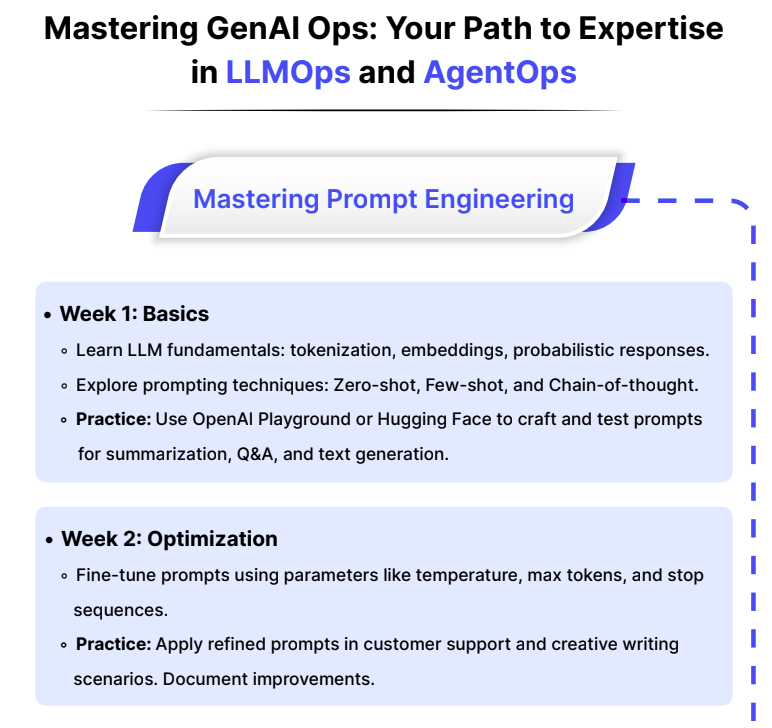

Week 1-2 of GenAI Ops Roadmap: Prompt Engineering Fundamentals

Establish a comprehensive understanding of how language models process prompts, interpret language and generate precise and meaningful responses. This week lays the foundation for effectively communicating with LLMs and harnessing their potential in various tasks.

Week 1: Learn the Basics of Prompting

Understanding LLMs

- Explore how LLMs, like GPT models, process input text to generate contextually relevant outputs.

- Learn the mechanics of:

- Tokenization: Breaking down input into manageable units (tokens).

- Contextual Embeddings: Representing language in a model’s context.

- Probabilistic Responses: How do LLMs predict the next token based on probability?

Prompting Techniques

- Zero-Shot Prompting: Directly ask the model a question or task without providing examples, relying entirely on the model’s pretraining knowledge.

- Few-Shot Prompting: Include examples within the prompt to guide the model toward a specific pattern or task.

- Chain-of-Thought Prompting: Use structured, step-by-step guidance in the prompt to encourage logical or multi-step outputs.

Practical Step

- Use platforms like OpenAI Playground or Hugging Face to interact with LLMs.

- Craft and test prompts for tasks such as summarization, text generation, or question-answering.

- Experiment with phrasing, examples, or structure, and observe the effects on the model’s responses.

Week 2: Optimizing Prompts

Refining Prompts for Specific Tasks:

- Adjust wording, formatting, and structure to align responses with specific goals.

- Create concise yet descriptive prompts to reduce ambiguity in outputs.

Advanced Prompt Parameters:

- Temperature:

- Lower values: Generate deterministic responses.

- Higher values: Add randomness and creativity.

- Max Tokens: Set output length limits to maintain brevity or encourage detail.

- Stop Sequences: Define patterns or keywords that signal the model to stop generating text, ensuring cleaner outputs.

- Top-p (nucleus): The cumulative probability cutoff for token selection. Lower values mean sampling from a smaller, more top-weighted nucleus.

- Top-k: Sample from the k most likely next tokens at each step. Lower k focuses on higher probability tokens.

Here’s the detailed article: 7 LLM Parameters to Enhance Model Performance (With Practical Implementation)

Practical Step:

- Apply refined prompts to real-world scenarios:

- Customer Support: Generate accurate and empathetic responses to customer inquiries.

- FAQ Generation: Automate the creation of frequently asked questions and answers.

- Creative Writing: Brainstorm ideas or develop engaging narratives.

- Compare results of optimized prompts with initial versions. Document improvements in relevance, accuracy, and clarity.

Resources:

Week 3-4 of GenAI Ops Roadmap: Exploring Retrieval-Augmented Generation (RAG)

Develop a deep understanding of how integrating retrieval mechanisms with generative models enhances accuracy and contextual relevance. These weeks focus on bridging generative ai capabilities with external knowledge bases, empowering models to provide informed and enriched responses.

Week 3: Introduction to RAG

What is RAG?

- Definition: Retrieval-Augmented Generation(RAG) combines:

- Why Use RAG?

- Overcome limitations of generative models relying solely on pretraining data, which may be outdated or incomplete.

- Dynamically adapt responses based on real-time or domain-specific data.

Key Concepts

- Knowledge Bases: Structured or unstructured repositories (e.g., FAQs, WIKI, datasets) serving as the source of truth.

- Relevance Ranking: Ensuring retrieved data is contextually appropriate before passing it to the LLM.

Practical Step: Initial Integration

- Set Up a Simple RAG System:

- Choose a knowledge source (e.g., FAQ file, product catalog, or domain-specific dataset).

- Implement basic retrieval using tools like vector search (e.g., FAISS) or keyword search.

- Combine retrieval with an LLM using frameworks like LangChain or custom scripts.

- Evaluation:

- Test the system with queries and compare model responses with and without retrieval augmentation.

- Analyze improvements in factual accuracy, relevance, and depth.

- Practical Example:

- Build a chatbot using a company FAQ file.

- Retrieve the most relevant FAQ entry for a user query and combine it with a generative model to craft a detailed, context-aware response.

Also read: A Guide to Evaluate RAG Pipelines with LlamaIndex and TRULens

Week 4: Advanced Integration of RAG

Dynamic Data Retrieval

- Design a system to fetch real-time or context-specific data dynamically (e.g., querying APIs, searching databases, or interacting with web services).

- Learn techniques to prioritize retrieval speed and accuracy for seamless integration.

Optimizing the Retrieval Process

- Use similarity search with embeddings (e.g., Sentence Transformers, OpenAI embeddings) to find contextually related information.

- Implement scalable retrieval pipelines using tools like Pinecone, Weaviate, or Elasticsearch.

Pipeline Design

- Develop a workflow where the retrieval module filters and ranks results before passing them to the LLM.

- Introduce feedback loops to refine retrieval accuracy based on user interactions.

Practical Step: Building a Prototype App

Create a functional app combining retrieval and generative capabilities for a practical application.

- Steps:

- Set up a document database or API as the knowledge source.

- Implement retrieval using tools like FAISS for vector similarity search or BM25 for keyword-based search.

- Connect the retrieval system to an LLM via APIs (e.g., OpenAI API).

- Design a simple user interface for querying the system (e.g., web or command-line app).

- Generate responses by combining retrieved data with the LLM’s generative outputs.

- Examples:

- Customer Support System: Fetch product details or troubleshooting steps from a database and combine them with generative explanations.

- Research Assistant: Retrieve academic papers or summaries and use an LLM to produce easy-to-understand explanations or comparisons.

Resources:

<h2 class="wp-block-heading" id="h-week-5-6-of-genai-ops-roadmap-deep-dive-into-ai-agents”>Week 5-6 of GenAI Ops Roadmap: Deep Dive into ai Agents

Leverage foundational skills from prompt engineering and retrieval-augmented generation (RAG) to design and build ai agents capable of performing tasks autonomously. These weeks focus on integrating multiple capabilities to create intelligent, action-driven systems.

<h3 class="wp-block-heading" id="h-week-5-understanding-ai-agents”>Week 5: Understanding ai Agents

<h4 class="wp-block-heading" id="h-what-are-ai-agents”>What are ai Agents?

ai agents are systems that autonomously combine language comprehension, reasoning, and action execution to perform tasks. They rely on:

- Language Understanding: Accurately interpreting user inputs or commands.

- Knowledge Integration: Using retrieval systems (RAG) for domain-specific or real-time data.

- Decision-Making: Determining the best course of action through logic, multi-step reasoning, or rule-based frameworks.

- Task Automation: Executing actions like responding to queries, summarizing content, or triggering workflows.

<h4 class="wp-block-heading" id="h-use-cases-of-ai-agents”>Use Cases of ai Agents

- Customer Support Chatbots: Retrieve and present product details.

- Virtual Assistants: Handle scheduling, task management, or data analysis.

- Research Assistants: Query databases and summarize findings.

Integration with Prompts and RAG

- Combining Prompt Engineering with RAG:

- Use refined prompts to guide query interpretation.

- Enhance responses with retrieval from external sources.

- Maintain consistency using structured templates and stop sequences.

- Multi-Step Decision-Making:

- Apply chain-of-thought prompting to simulate logical reasoning (e.g., breaking a query into subtasks).

- Use iterative prompting for refining responses through feedback cycles.

- Dynamic Interactions:

- Enable agents to ask clarifying questions to resolve ambiguity.

- Incorporate retrieval pipelines to improve contextual understanding during multi-step exchanges.

<h3 class="wp-block-heading" id="h-week-6-building-and-refining-ai-agents”>Week 6: Building and Refining ai Agents

<h4 class="wp-block-heading" id="h-practical-step-building-a-basic-ai-agent-prototype”>Practical Step: Building a Basic ai Agent Prototype

1. Define the Scope

- Domain Examples: Choose a focus area like customer support, academic research, or financial analysis.

- Tasks: Identify core activities such as data retrieval, summarization, query answering, or decision support.

- Agent Relevance:

- Use planning agents for multi-step workflows.

- Employ tool-using agents for integration with external resources or APIs.

2. Make Use of Specialized Agent Types

- Planning Agents:

- Role: Break tasks into smaller, actionable steps and sequence them logically.

- Use Case: Automating workflows in a task-heavy domain like project management.

- Tool-Using Agents:

- Role: Interact with external tools (e.g., databases, APIs, or calculators) to complete tasks beyond text generation.

- Use Case: Financial analysis using APIs for real-time market data.

- Reflection Agents:

- Role: Evaluate past responses and refine future outputs based on user feedback or internal performance metrics.

- Use Case: Continuous learning systems in customer support applications.

- Multi-Agent Systems:

- Role: Collaborate with other agents, each specializing in a particular task or domain.

- Use Case: One agent handles reasoning, while another performs data retrieval or validation.

3. Integrate Agent Patterns in the Framework

- Frameworks:

- Use tools like LangChain, Haystack, or OpenAI API for creating modular agent systems.

- Implementation of Patterns:

- Embed reflection loops for iterative improvement.

- Develop planning capabilities for dynamic task sequencing.

4. Advanced Prompt Design

- Align prompts with agent specialization:

- For Planning: “Generate a step-by-step plan to achieve the following goal…”

- For Tool Use: “Retrieve the required data from (API) and process it for user queries.”

- For Reflection: “Analyze the previous response and improve accuracy or clarity.”

5. Enable Retrieval and Multi-Step Reasoning

- Combine knowledge retrieval with chain-of-thought reasoning:

- Enable embedding-based retrieval for relevant data access.

- Use reasoning to guide agents through iterative problem-solving.

6. Multi-Agent Collaboration for Complex Scenarios

- Deploy multiple agents with defined roles:

- Planner Agent: Breaks the query into sub-tasks.

- Retriever Agent: Fetches external data.

- Reasoner Agent: Synthesizes data and generates an answer.

- Validator Agent: Cross-checks the final response for accuracy.

7. Develop a Scalable Interface

- Build interfaces that support multi-agent outputs dynamically:

- Chatbots for user interaction.

- Dashboards for visualizing multi-agent workflows and outcomes.

Testing and Refinement

- Evaluate Performance: Test the agent across scenarios and compare query interpretation, data retrieval, and response generation.

- Iterate: Improve response accuracy, retrieval relevance, and interaction flow by updating prompt designs and retrieval pipelines.

Example Use Cases

- Customer Query Assistant:

- Retrieves details about orders, product specifications, or FAQs.

- Provides step-by-step troubleshooting guidance.

- Financial Data Analyst:

- Queries datasets for summaries or insights.

- Generates reports on specific metrics or trends.

- Research Assistant:

- Searches academic papers for topics.

- Summarizes findings with actionable insights.

Resources

Week 7 of GenAI Ops Roadmap: Introduction to LLMOps

Concepts to Learn

LLMOps (Large Language Model Operations) is a critical discipline for managing the lifecycle of large language models (LLMs), ensuring their effectiveness, reliability, and scalability in real-world applications. This week focuses on key concepts, challenges, and evaluation metrics, laying the groundwork for implementing robust LLMOps practices.

- Importance of LLMOps

- Ensures that deployed LLMs remain effective and reliable over time.

- Provides mechanisms to monitor, fine-tune, and adapt models in response to changing data and user needs.

- Integrates principles from MLOps (Machine Learning Operations) and ModelOps, tailored for the unique challenges of LLMs.

- Challenges in Managing LLMs

- Model Drift:

- Occurs when the model’s predictions become less accurate over time due to shifts in data distribution.

- Requires constant monitoring and retraining to maintain performance.

- Data Privacy:

- Ensures sensitive information is handled securely, especially when dealing with user-generated content or proprietary datasets.

- Involves techniques like differential privacy and federated learning.

- Performance Monitoring:

- Involves tracking latency, throughput, and accuracy metrics to ensure the system meets user expectations.

- Cost Management:

- Balancing computational costs with performance optimization, especially for inference at scale.

- Model Drift:

Tools & Technologies

- Monitoring and Evaluation

- Arize ai: Tracks LLM performance, including model drift, bias, and predictions in production.

- <a target="_blank" href="https://github.com/confident-ai/deepeval” target=”_blank” rel=”noreferrer noopener nofollow”>DeepEval: A framework for evaluating the quality of responses from LLMs based on human and automated scoring.

- RAGAS: Evaluates RAG pipelines using metrics like retrieval accuracy, generative quality, and response coherence.

- Retrieval and Optimization

- <a target="_blank" href="https://ai.meta.com/tools/faiss/” target=”_blank” rel=”noreferrer noopener nofollow”>FAISS: A library for efficient similarity search and clustering of dense vectors, critical for embedding-based retrieval.

- OPIK: Helps optimize prompt engineering and improve response quality for specific use cases.

- Experimentation and Deployment

- Weights & Biases: Enables tracking of experiments, data, and model metrics with detailed dashboards.

- LangChain: Simplifies the integration of LLMs with RAG workflows, chaining prompts, and external tool usage.

- Advanced LLMOps Platforms

- MLOps Suites: Comprehensive platforms like Seldon and MLFlow for managing LLM lifecycles.

- ModelOps Tools: Tools like Cortex and BentoML for scalable model deployment across diverse environments.

Evaluation Metrics for LLMs and Retrieval-Augmented Generation (RAG) Systems

To measure the effectiveness of LLMs and RAG systems, you need to focus on both language generation metrics and retrieval-specific metrics:

- Language Generation Metrics

- Perplexity: Measures the uncertainty in the model’s predictions. Lower perplexity indicates better language modeling.

- BLEU (Bilingual Evaluation Understudy): Evaluates how closely generated text matches reference text. Commonly used for translation tasks.

- ROUGE (Recall-Oriented Understudy for Gisting Evaluation): Compares overlap between generated and reference text, widely used for summarization.

- METEOR: Focuses on semantic alignment between generated and reference text, with higher sensitivity to synonyms and word order.

- Retrieval-Specific Metrics

- Precision@k: Measures the proportion of relevant documents retrieved in the top-k results.

- Recall@k: Determines how many of the relevant documents were retrieved out of all possible relevant documents.

- Mean Reciprocal Rank (MRR): Evaluates the rank of the first relevant document in a list of retrieved documents.

- Normalized Discounted Cumulative Gain (NDCG): Accounts for the relevance and ranking position of retrieved documents.

- Human Evaluation Metrics

- Relevance: How well the generated response aligns with the query or context.

- Fluency: Measures grammatical and linguistic correctness.

- Helpfulness: Determines whether the response adds value or resolves the user’s query effectively.

- Safety: Ensures generated content avoids harmful, biased, or inappropriate language.

Week 8 of GenAI Ops Roadmap: Deployment and Versioning

Concepts to Learn:

- Focus on how to deploy LLMs in production environments.

- Understand version control and model governance practices.

Tools & Technologies:

- vLLM: A powerful framework designed for efficient serving and deployment of large language models like Llama. vLLM supports various techniques such as FP8 quantization and pipeline parallelism, allowing deployment of extremely large models while managing GPU memory efficiently

- <a target="_blank" href="https://aws.amazon.com/sagemaker/?trk=b5c1cff2-854a-4bc8-8b50-43b965ba0b13&sc_channel=ps&ef_id=Cj0KCQiAx9q6BhCDARIsACwUxu4QuqqJ6YvyJ5VW0lzd7RqB4sYmhNzvc9LZQii1FNXjQb6ebViY3YkaAsM5EALw_wcB:G:s&s_kwcid=AL!4422!3!532435768482!e!!g!!sagemaker!11539707798!109299504381&gclid=Cj0KCQiAx9q6BhCDARIsACwUxu4QuqqJ6YvyJ5VW0lzd7RqB4sYmhNzvc9LZQii1FNXjQb6ebViY3YkaAsM5EALw_wcB” target=”_blank” rel=”noreferrer noopener nofollow”>SageMaker: AWS SageMaker offers a fully managed environment for training, fine-tuning, and deploying machine learning models, including LLMs. It provides scalability, versioning, and integration with a range of AWS services, making it a popular choice for deploying models in production environments

- Llama.cpp: This is a high-performance library for running Llama models on CPUs and GPUs. It is known for its efficiency and is increasingly being used for running models that require significant computational resources

- MLflow: A tool for managing the lifecycle of machine learning models, MLflow helps with versioning, deployment, and monitoring of LLMs in production. It integrates well with frameworks like Hugging Face Transformers and LangChain, making it a robust solution for model governance

- Kubeflow: Kubeflow allows for the orchestration of machine learning workflows, including the deployment and monitoring of models in Kubernetes environments. It’s especially useful for scaling and managing models that are part of a larger ML pipeline

Week 9 of GenAI Ops Roadmap: Monitoring and Observability

Concepts to Learn:

- LLM Response Monitoring: Understanding how LLMs perform in real-world applications is essential. Monitoring LLM responses involves tracking:

- Response Quality: Using metrics like accuracy, relevance, and latency.

- Model Drift: Evaluating if the model’s predictions change over time or diverge from expected outputs.

- User Feedback: Collecting feedback from users to continuously improve model performance.

- Retrieval Monitoring: Since many LLM systems rely on retrieval-augmented generation (RAG) techniques, it’s crucial to:

- Track Retrieval Effectiveness: Measure the relevance and accuracy of retrieved information.

- Evaluate Latency: Ensure that the retrieval systems (e.g., FAISS, Elasticsearch) are optimized for fast responses.

- Monitor Data Consistency: Ensure that the knowledge base is up-to-date and relevant to the queries being asked.

- Agent Monitoring: For systems with agents (whether they’re planning agents, tool-using agents, or multi-agent systems), monitoring is especially important:

- Task Completion Rate: Track how often agents successfully complete their tasks.

- Agent Coordination: Monitor how well agents work together, especially in multi-agent systems.

- Reflection and Feedback Loops: Ensure agents can learn from previous tasks and improve future performance.

- Real-Time Inference Monitoring: Real-time inference is critical in production environments. Monitoring these systems can help prevent issues before they impact users. This involves observing inference speed, model response time, and ensuring high availability.

- Experiment Tracking and A/B Testing: A/B testing allows you to compare different versions of your model to see which performs better in real-world scenarios. Monitoring helps in tracking:

- Conversion Rates: For example, which model version has a higher user engagement.

- Statistical Significance: Ensuring that your tests are meaningful and reliable.

Tools & Technologies:

- Prometheus & Datadog: These are widely used for infrastructure monitoring. Prometheus tracks system metrics, while Datadog can offer end-to-end observability across the application, including response times, error rates, and service health.

- Arize ai: This tool specializes in ai observability, focusing on tracking performance metrics for machine learning models, including LLMs. It helps detect model drift, monitor relevance of generated outputs, and ensure models are producing accurate results over time.

- MLflow: MLflow offers model tracking, versioning, and performance monitoring. It integrates with models deployed in production, offering a centralized location for logging experiments, performance, and metadata, making it useful for continuous monitoring in the deployment pipeline.

- vLLM: vLLM helps monitor the performance of LLMs, especially in environments that require low-latency responses for large models. It tracks how well models scale in terms of response time, and can also be used to monitor model drift and resource usage.

- <a target="_blank" href="https://aws.amazon.com/sagemaker/?trk=b5c1cff2-854a-4bc8-8b50-43b965ba0b13&sc_channel=ps&ef_id=Cj0KCQiAx9q6BhCDARIsACwUxu4QuqqJ6YvyJ5VW0lzd7RqB4sYmhNzvc9LZQii1FNXjQb6ebViY3YkaAsM5EALw_wcB:G:s&s_kwcid=AL!4422!3!532435768482!e!!g!!sagemaker!11539707798!109299504381&gclid=Cj0KCQiAx9q6BhCDARIsACwUxu4QuqqJ6YvyJ5VW0lzd7RqB4sYmhNzvc9LZQii1FNXjQb6ebViY3YkaAsM5EALw_wcB” target=”_blank” rel=”noreferrer noopener nofollow”>SageMaker Model Monitor: AWS SageMaker offers built-in model monitoring tools to track data and model quality over time. It can alert users when performance degrades or when the data distribution changes, which is especially valuable for keeping models aligned with real-world data

- LangChain: As a framework for building RAG-based systems and LLM-powered agents, LangChain includes monitoring features that track agent performance and ensure that the retrieval pipeline and LLM generation are effective.

- RAGAS (Retrieval-Augmented Generation Agent System): RAGAS focuses on monitoring the feedback loop between retrieval and generation in RAG-based systems. It helps in ensuring the relevance of retrieved information and the accuracy of responses based on the retrieved data

Week 10 of GenAI Ops Roadmap: Automating Retraining and Scaling

Concepts to Learn:

- Automated Retraining: Learn how to set up pipelines that continuously update LLMs with new data to maintain performance.

- Scaling: Understand horizontal (adding more nodes) and vertical (increasing resources of a single machine) scaling techniques in production environments to manage large models efficiently.

Tools & Technologies:

- Apache Airflow: Automates workflows for model retraining.

- Kubernetes & Terraform: Manage infrastructure, enabling scalable deployments and horizontal scaling.

- Pipeline Parallelism: Split models across multiple stages or workers to optimize memory usage and compute efficiency. Techniques like GPipe and TeraPipe improve training scalability

Week 11 of GenAI Ops Roadmap: Security and Ethics in LLMOps

Concepts to Learn:

- Understand the ethical considerations when deploying LLMs, such as bias, fairness, and safety.

- Study security practices in handling model data, including user privacy and compliance with regulations like GDPR.

Tools & Technologies:

- Explore tools for secure model deployment and privacy-preserving techniques.

- Study ethical frameworks for responsible ai development.

Week 12 of GenAI Ops Roadmap: Continuous Improvement and Feedback Loops

Concepts to Learn:

- Building Feedback Loops: Learn how to implement mechanisms to track and improve LLMs’ performance over time by capturing user feedback and real-world interactions.

- Model Performance Tracking: Study strategies for evaluating models over time, addressing issues like model drift, and refining the model based on continuous input.

Tools & Technologies:

- Model Drift Detection: Use tools like Arize ai and Verta to detect model drift in real-time, ensuring that models adapt to changing patterns.

- MLflow and Kubeflow: These tools help in managing the model lifecycle, enabling continuous monitoring, versioning, and feedback integration. Kubeflow Pipelines can be used to automate feedback loops, while MLflow allows for experiment tracking and model management.

- Other Tools: Seldon and Weights & Biases offer advanced tracking and real-time monitoring features for continuous improvement, ensuring that LLMs remain aligned with business needs and real-world changes.

Week 13 of GenAI Ops Roadmap: Introduction to AgentOps

Concepts to Learn:

- Understand the principles behind <a target="_blank" href="https://docs.agentops.ai/v1/quickstart” target=”_blank” rel=”noreferrer noopener nofollow”>AgentOps, including the management and orchestration of ai agents.

- Explore the role of agents in automating tasks, decision-making, and enhancing workflows in complex environments.

Tools & Technologies:

- Introduction to frameworks like LangChain and Haystack for building agents.

- Learn about agent orchestration using OpenAI API and Chaining techniques.

Week 14 of GenAI Ops Roadmap: Building Agents

Concepts to Learn:

- Study how to design intelligent agents capable of interacting with data sources and APIs.

- Explore the design patterns for autonomous agents and the management of their lifecycle.

Tools & Technologies:

Week 15 of GenAI Ops Roadmap: Advanced Agent Orchestration

Concepts to Learn:

- Dive deeper into multi-agent systems, where agents collaborate to solve tasks.

- Understand agent communication protocols and orchestration techniques.

Tools & Technologies:

- Study tools like Ray for large-scale agent coordination.

- Learn about OpenAI’s Agent API for advanced automation.

Week 16 of GenAI Ops Roadmap: Performance Monitoring and Optimization

Concepts to Learn:

- Explore performance monitoring strategies for agent systems in production.

- Understand agent logging, failure handling, and optimization.

Tools & Technologies:

- Study frameworks like Datadog and Prometheus for tracking agent performance.

- Learn about optimization strategies using ModelOps principles for efficient agent operation.

Week 17 of GenAI Ops Roadmap: Security and Privacy in AgentOps

Concepts to Learn:

- Understand the security and privacy challenges specific to autonomous agents.

- Study techniques for securing agent communications and ensuring privacy during operations.

Tools & Technologies:

- Explore encryption tools and access controls for agent operations.

- Learn about API security practices for agents interacting with sensitive data.

Week 18 of GenAI Ops Roadmap: Ethical Considerations in AgentOps

Concepts to Learn:

- Study the ethical implications of using agents in decision-making.

- Explore bias mitigation and fairness in agent operations.

Tools & Technologies:

- Use frameworks like Fairness Indicators for evaluating agent outputs.

- Learn about governance tools for responsible ai deployment in agent systems.

Week 19 of GenAI Ops Roadmap: Scaling and Continuous Learning for Agents

Concepts to Learn:

- Learn about scaling agents for large-scale operations.

- Study continuous learning mechanisms, where agents adapt to changing environments.

Tools & Technologies:

Week 20 of GenAI Ops Roadmap: Capstone Project

The final week is dedicated to applying everything you’ve learned in a comprehensive project. This capstone project should incorporate LLMOps, AgentOps, and advanced topics like multi-agent systems and security.

Create a Real-World Application

This project will allow you to combine various concepts from the course to design and build a complete system. The goal is to solve a real-world problem while integrating operational practices, ai agents, and LLMs.

Practical Step: Capstone Project

- Task: Develop a project that integrates multiple concepts, such as creating a personalized assistant, automating a business workflow, or designing an ai-powered recommendation system.

- Scenario: A personalized assistant could use LLMs to understand user preferences and agents to manage tasks, such as scheduling, reminders, and automated recommendations. This system would integrate external tools like calendar APIs, CRM systems, and external databases.

- Skills: System design, integration of multiple agents, external APIs, real-world problem-solving, and project management.

Resources for GenAI Ops

Courses for GenAI Ops

Conclusion

You’re now ready to explore the exciting world of ai agents with this GenAI Ops roadmap. With the skills you’ve learned, you can design smarter systems, automate tasks, and solve real-world problems. Keep practising and experimenting as you build your expertise.

Remember, learning is a journey. Each step brings you closer to achieving something great. Best of luck as you grow and create amazing ai solutions!