Multimodal large language models (MLLM) are advancing rapidly, allowing machines to interpret and reason about textual and visual data simultaneously. These models have transformative applications in image analysis, visual question answering, and multimodal reasoning. By bridging the gap between vision and language, they play a crucial role in improving the ability of artificial intelligence to comprehensively understand and interact with the world.

Despite their promise, these systems must overcome significant challenges. A primary limitation is the reliance on natural language supervision for training, which often results in suboptimal visual representation quality. While increasing dataset size and computational complexity have led to modest improvements, they need more specific optimization for visual understanding within these models to ensure they achieve the desired performance on vision-based tasks. Current methods often need to balance computational efficiency and improved performance.

Existing techniques for training MLLM typically involve using visual encoders to extract features from images and feed them into the language model along with natural language data. Some methods employ multiple visual encoders or cross-attention mechanisms to improve comprehension. However, these approaches come at the cost of significantly higher compute and data requirements, which limits their scalability and practicality. This inefficiency underscores the need for a more efficient way to optimize MLLMs for visual understanding.

Researchers at SHI Labs at Georgia tech and Microsoft Research introduced a novel approach called OLA-VLM to address these challenges. The method aims to improve MLLMs by distilling auxiliary visual information into its hidden layers during pre-training. Instead of increasing the complexity of the visual encoder, OLA-VLM leverages integration optimization to improve the alignment of visual and textual data. Introducing this optimization in intermediate layers of the language model ensures better visual reasoning without additional computational overhead during inference.

The technology behind OLA-VLM involves incorporating loss functions to optimize the representations of specialized visual encoders. These encoders are trained for image segmentation, depth estimation, and image generation tasks. The distilled features are mapped to specific layers of the language model using predictive embedding optimization techniques. Additionally, special task-specific tokens are added to the input sequence, allowing the model to seamlessly incorporate auxiliary visual information. This design ensures that visual features are effectively integrated into MLLM representations without disrupting the primary training goal of next token prediction. The result is a model that learns more robust, vision-focused representations.

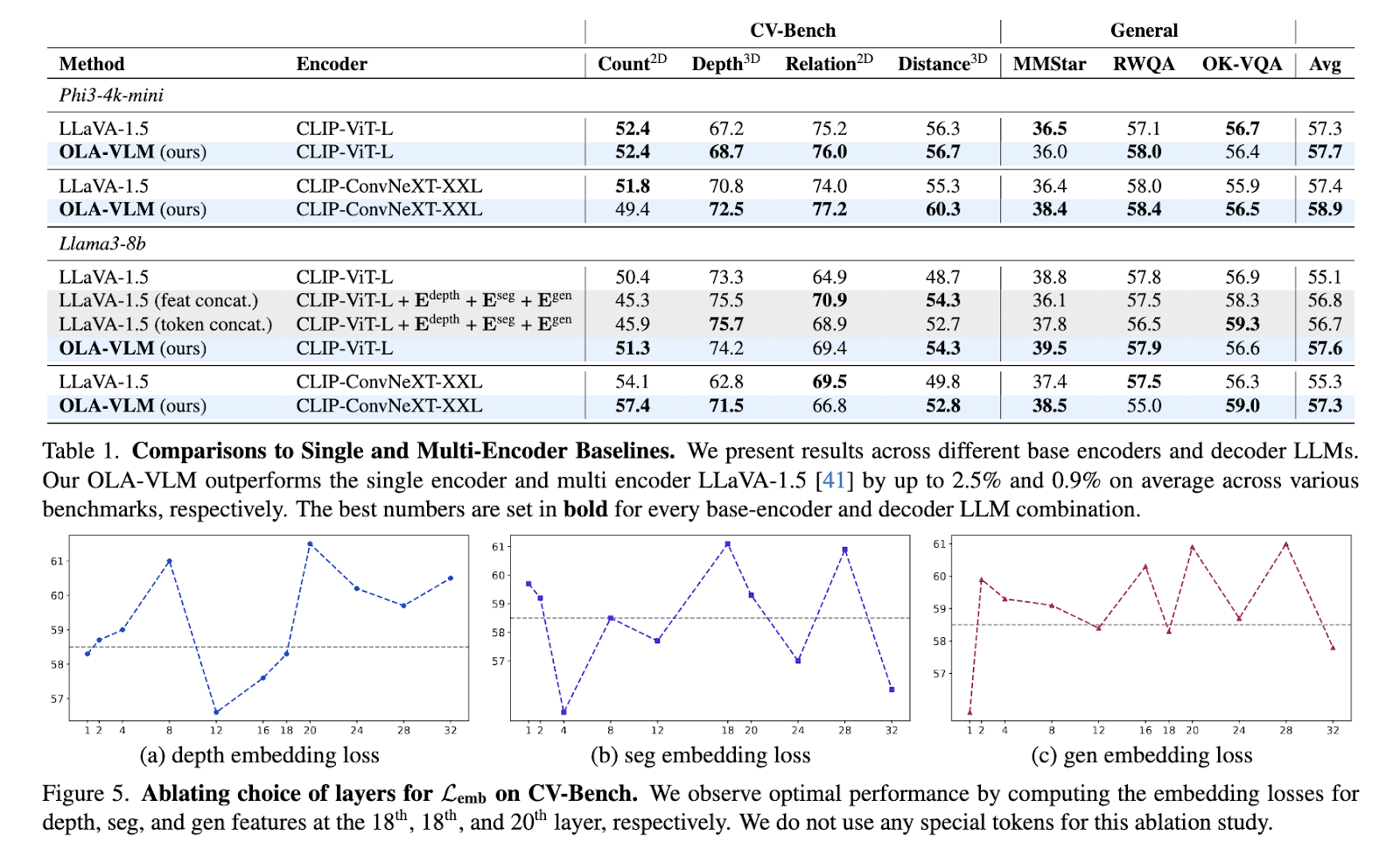

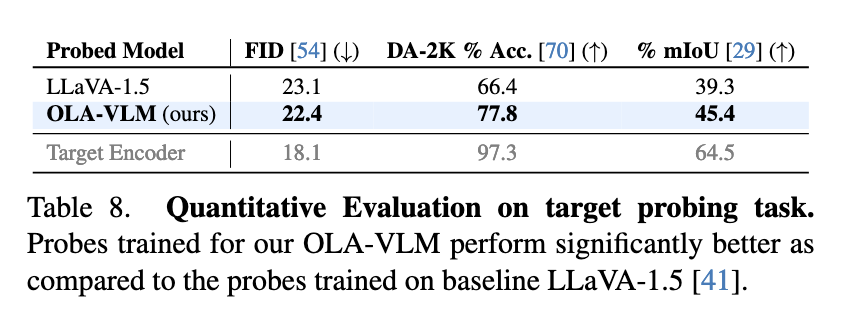

The performance of OLA-VLM was rigorously tested on several benchmarks, showing substantial improvements over existing single- and multi-encoder models. On CV-Bench, a vision-focused benchmark suite, OLA-VLM outperformed the LLaVA-1.5 baseline by up to 8.7% on depth estimation tasks, achieving an accuracy of 77.8%. For segmentation tasks, it achieved an average Intersection Over Union (mIoU) score of 45.4%, a significant improvement over the baseline's 39.3%. The model also demonstrated consistent improvements in 2D and 3D vision tasks, achieving an average improvement of up to 2.5% in benchmarks such as distance and relationship reasoning. OLA-VLM achieved these results by using a single visual encoder during inference, making it much more efficient than multi-encoder systems.

To further validate its effectiveness, the researchers analyzed the representations learned by OLA-VLM. Probing experiments revealed that the model achieved superior visual alignment of features in its intermediate layers. This alignment significantly improved the model's subsequent performance on various tasks. For example, the researchers noted that integrating special tokens for specific tasks during training contributed to better optimizing features for depth, segmentation, and image generation tasks. The results underlined the efficiency of the predictive embedding optimization approach, demonstrating its ability to balance high-quality visual understanding with computational efficiency.

OLA-VLM sets a new standard for integrating visual information into MLLM by focusing on optimizing embedding during pre-training. This research addresses the gap in current training methods by introducing a vision-centric perspective to improve the quality of visual representations. The proposed approach improves performance on vision and language tasks and achieves this with fewer computational resources compared to existing methods. OLA-VLM exemplifies how targeted optimization during pre-training can substantially improve multimodal model performance.

In conclusion, research conducted by SHI Labs and Microsoft Research highlights a groundbreaking advance in multimodal ai. By optimizing visual representations within MLLM, OLA-VLM closes a critical gap in performance and efficiency. This method demonstrates how integrated optimization can effectively address challenges in vision-language alignment, paving the way for more robust and scalable multimodal systems in the future.

Verify he Paper and GitHub page. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on <a target="_blank" href="https://twitter.com/Marktechpost”>twitter and join our Telegram channel and LinkedIn Grabove. Don't forget to join our SubReddit over 60,000 ml.

Trending: LG ai Research launches EXAONE 3.5 – three frontier-level bilingual open-source ai models that deliver unmatched instruction following and broad context understanding for global leadership in generative ai excellence….

Nikhil is an internal consultant at Marktechpost. He is pursuing an integrated double degree in Materials at the Indian Institute of technology Kharagpur. Nikhil is an ai/ML enthusiast who is always researching applications in fields like biomaterials and biomedical science. With a strong background in materials science, he is exploring new advances and creating opportunities to contribute.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>