Generative ai has often faced criticism for its inability to reason effectively, particularly in scenarios that require precise and deterministic results. Just predicting the next token has proven to be very difficult when the next token has to be as accurate as if it were a single option. For example, writing an essay can take a thousand forms and still be acceptable, but solving a quadratic equation must give a specific final answer. It is this type of problem that has led Alibaba's artificial intelligence division, MarcoPolo, to develop Marco-o1, an innovative large language model (LLM) that raises the bar for complex reasoning tasks. This innovative model excels in diverse domains such as mathematics, physics, coding, and multilingual applications, offering real-world solutions to conventional and open-ended challenges.

Learning objectives

- The concept and importance of large reasoning models (LRM).

- The main technological innovations of Marco-o1 and how they distinguish it.

- Benchmarks and results that highlight your advanced capabilities.

- Real-world applications, particularly in multilingual translation.

- Information on transparency, challenges and future plans for Marco-o1.

This article was published as part of the Data Science Blogathon.

Main innovations behind Marco-o1

Marco-o1 distinguishes itself from other models by integrating a combination of advanced techniques to optimize reasoning, decision making and accuracy. These are some of the things that traditional LLMs fail to do.

Here is a screenshot showing the popular count of the letter r in the word “strawberry”

Chain of Thought (CoT) Fine Tuning

This approach allows the model to reason step by step, mimicking how humans solve complex problems. Adjustment with open source CoT datasets and Alibaba's proprietary synthetic datasets has amplified Marco-o1's ability to tackle complex tasks.

Monte Carlo Tree Search (MCTS)

This method allows the model to explore multiple paths of reasoning, from broad strategies to granular mini-steps (for example, generating 32 or 64 tokens at a time). MCTS expands the solution space, enabling more robust decision making.

Reflection mechanisms

A notable feature of Marco-o1 is its ability to self-reflect. The model evaluates your reasoning processes, identifies inaccuracies, and iterates on your results to obtain better results.

Multilingual domain

Marco-o1 excels in translation, handling cultural nuances, idiomatic expressions and colloquialisms with unparalleled ease, making it a powerful tool for global communication.

Some impressive benchmarks and results from Marco-o1

Marco-o1's capabilities are reflected in its impressive performance metrics. Has demonstrated substantial improvements in reasoning and translation tasks:

- +6.17% accuracy on the English MGSM dataset.

- +5.60% accuracy on Chinese MGSM dataset.

- Exceptional command of multilingual translations, capturing cultural subtleties and colloquial phrases with precision.

These results mark an important step forward in the model's ability to combine language and logic effectively.

Applications: multilingual translation and more

Marco-o1 is a pioneer in the use of large reasoning models (LRM) in machine translation. The model's multilingual capabilities go beyond mere translation by exploring scaling laws at the time of inference, making it a robust tool for global communication. He is a pioneer in the use of LRM in various real-world scenarios:

- Multilingual translation: Beyond basic translations, it leverages scaling laws during inference to improve linguistic accuracy and context awareness.

- Coding and scientific research: Its clear reasoning paths make it a reliable tool for solving programming challenges and supporting scientific discoveries.

- Global problem solving: Whether in education, healthcare, or business, the model is perfectly suited for tasks that require logic and reasoning.

Transparency and open access

Alibaba has taken a bold step by launching Marco-o1 and its datasets on GitHub, fostering collaboration and innovation. Developers and researchers have access to:

- Complete documentation.

- Implementation guides.

- Example scripts for implementation, including integration with frameworks like FastAPI using vLLM (which we will cover in this article).

This openness allows the ai community to refine and expand the capabilities of Marco-o1 for broader applications.

Why Marco-o1 is important

The presentation of Marco-o1 marks a pivotal moment in the development of ai. Its ability to reason through complex problems, adapt to multilingual contexts, and self-reflect puts it at the forefront of next-generation ai. Whether tackling scientific challenges, translating nuanced texts, or exploring open-ended questions, Marco-o1 is poised to reshape the landscape of ai applications.

For researchers and developers, Marco-o1 is not just a tool, but an invitation to collaborate to redefine what ai can achieve. By bridging the gap between reasoning and creativity, Marco-o1 sets a new standard for the future of artificial intelligence.

Practice: Exploring Marco-o1 through code

The official Github repository has good examples that will help you test the model with different use cases. You can find other examples here. <a target="_blank" href="https://github.com/AIDC-ai/Marco-o1/tree/main/examples” target=”_blank” rel=”nofollow noopener”>https://github.com/AIDC-ai/Marco-o1/tree/main/examples

from fastapi import FastAPI, HTTPException

from fastapi.responses import StreamingResponse

from pydantic import BaseModel

import torch

from vllm import LLM, SamplingParams

from transformers import AutoTokenizer

# Initialize FastAPI app

app = FastAPI()

# Define a request model using Pydantic for validation

class ChatRequest(BaseModel):

user_input: str # The user's input text

history: list # A list to store chat history

# Variables for model and tokenizer

tokenizer = None

model = None

@app.on_event("startup")

def load_model_and_tokenizer():

"""

Load the model and tokenizer once during startup.

This ensures resources are initialized only once, improving efficiency.

"""

global tokenizer, model

path = "AIDC-ai/Marco-o1" # Path to the Marco-o1 model

tokenizer = AutoTokenizer.from_pretrained(path, trust_remote_code=True)

model = LLM(model=path, tensor_parallel_size=4) # Parallelize model processing

def generate_response_stream(model, text, max_new_tokens=4096):

"""

Generate responses in a streaming fashion.

:param model: The language model to use.

:param text: The input prompt.

:param max_new_tokens: Maximum number of tokens to generate.

"""

new_output="" # Initialize the generated text

sampling_params = SamplingParams(

max_tokens=1, # Generate one token at a time for streaming

temperature=0, # Deterministic generation

top_p=0.9 # Controls diversity in token selection

)

with torch.inference_mode(): # Enable efficient inference mode

for _ in range(max_new_tokens): # Generate tokens up to the limit

outputs = model.generate(

(f'{text}{new_output}'), # Concatenate input and current output

sampling_params=sampling_params,

use_tqdm=False # Disable progress bar for cleaner streaming

)

next_token = outputs(0).outputs(0).text # Get the next token

new_output += next_token # Append token to the output

yield next_token # Yield the token for streaming

if new_output.endswith(''): # Stop if the end marker is found

break

@app.post("/chat/")

async def chat(request: ChatRequest):

"""

Handle chat interactions via POST requests.

:param request: Contains user input and chat history.

:return: Streamed response or error message.

"""

# Validate user input

if not request.user_input:

raise HTTPException(status_code=400, detail="Input cannot be empty.")

# Handle exit commands

if request.user_input.lower() in ('q', 'quit'):

return {"response": "Exiting chat."}

# Handle clear command to reset chat history

if request.user_input.lower() == 'c':

request.history.clear()

return {"response": "Clearing chat history."}

# Update history with user input

request.history.append({"role": "user", "content": request.user_input})

# Create the model prompt with history

text = tokenizer.apply_chat_template(request.history, tokenize=False, add_generation_prompt=True)

# Stream the generated response

response_stream = generate_response_stream(model, text)

# Return the streamed response

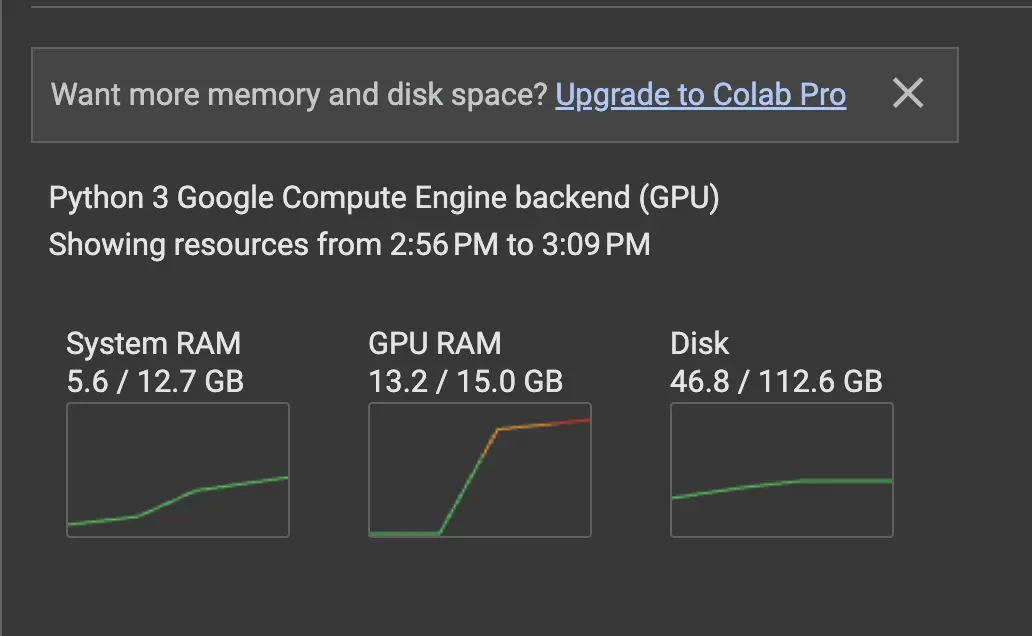

return StreamingResponse(response_stream, media_type="text/plain")The code above comes from the official repository, but if the script fails before responding, there may be a mismatch between your GPU's memory capacity and the model requirements. This is common when working with large models that require more VRAM than is available on your GPU. Since this is fastapi code, it makes more sense to run it from your computer, which might not have adequate VRAM.

I tried using ngrok to expose the API using Google Colab so you can enjoy the free GPU which you can find in the repository of this article: https://github.com/inuwamobarak/largeReasoningModels/tree/main/Marco-01

Wrapper script using GPU

To help you test the performance of this model, here is a wrapper script to run on the fly in Google Colab using a GPU. Note that I added float 16 and it consumes more than 13GB of GPU.

from transformers import AutoTokenizer, AutoModelForCausalLM

import torchWrapper script with 16 float precision:

class ModelWrapper:

def __init__(self, model_name):

self.device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

# Load model with half-precision if supported, or use device_map for efficient placement

try:

self.model = AutoModelForCausalLM.from_pretrained(

model_name,

torch_dtype=torch.float16 if torch.cuda.is_available() else None,

device_map="auto"

)

except Exception as e:

print(f"Error loading model: {e}")

raise

self.tokenizer = AutoTokenizer.from_pretrained(model_name)

# Enable gradient checkpointing for large models

self.model.gradient_checkpointing_enable()

# Debug: Check if model is on GPU

print(f"Model loaded to device: {next(self.model.parameters()).device}")

def generate_text(self, prompt, max_length=100, num_return_sequences=1):

inputs = self.tokenizer(prompt, return_tensors="pt")

inputs = {key: value.to(self.device) for key, value in inputs.items()} # Move inputs to GPU

outputs = self.model.generate(

**inputs, max_length=max_length, num_return_sequences=num_return_sequences

)

generated_texts = (

self.tokenizer.decode(output, skip_special_tokens=True) for output in outputs

)

return generated_textsExample one

# Example usage

if __name__ == "__main__":

model_name = "AIDC-ai/Marco-o1"

model_wrapper = ModelWrapper(model_name)

prompt = "Once upon a time, in a land far, far away,"

generated_texts = model_wrapper.generate_text(prompt, max_length=50, num_return_sequences=1)

for i, text in enumerate(generated_texts):

print(f"Generated Text {i+1}:\n{text}\n")Model loaded to device: cuda:0 Generated Text 1: Once upon a time, in a land far, far away, there lived a king who was very fond of his garden. He had a beautiful garden with many flowers and trees. One day, he decided to plant some new trees in his garden. Example two

prompt = "How many S's are there in Mississippi"

generated_texts = model_wrapper.generate_text(prompt, num_return_sequences=1)

for i, text in enumerate(generated_texts):

print(f"Generated Text {i+1}:\n{text}\n")Generated Text 1:

How many S's are there in Mississippi? To determine how many 'S's are in the word "Mississippi," let's analyze the word step by step.

First, let's write out the word:

M-I-S-S-I-S-S-I-P-P-I

Now, let's identify each letter and count the 'S's:

1. The first letter is M.

2. The second letter is I.

3. The third letter is S.

4. The fourth letter is S.

5. The fifth letter is I.

6. The sixth letter is S.

7. The seventh letter is S.

8. The eighth letter is I.

9. The ninth letter is P.

10. The tenth letter is P.

11. The eleventh letter is I.

From this analysis, we can see that the letters 'S' appear at positions 3, 4, 6, 7, and 11. That's a total of five 'S's.

To double-check, let's count them again:

- Position 3: S

- Position 4: S

- Position 6: S

- Position 7: S

- Position 11: S

Yes, there are indeed five 'S's in the word "Mississippi."

Therefore, the number of 'S's in Mississippi is \boxedstock market. Wait, that's incorrect based on the previous count. Let me recount carefully.

Upon re-examining:

1. M

2. I

3. S

4. S

5. I

6. S

7. S

8. I

9. P

10. P

11. I

Counting the 'S's:

- Position 3: S

- Position 4: S

- Position 6: S

- Position 7: S

That's four 'S's. It seems I initially miscounted the last 'S' at position 11 as an 'I.' Therefore, the correct number of 'S's in Mississippi is \boxedstock market.

However, to ensure accuracy, let's use another method. The word "Mississippi" has 11 letters in total. The vowels are I, I, I, and I (four 'I's), and the consonants are M, S, S, S, S, P, P. Counting the 'S's among the consonants gives us four 'S's.

You will notice that the model is trying to reason how it solves the problems presented to it. This is the difference between LRM and previous LLM.

Challenges and future plans

While Marco-o1 has set new standards, the development team recognizes that there is room for growth. The model's reasoning capabilities are strong but not yet fully optimized. To address this, Alibaba plans to incorporate:

- Outcome Reward Modeling (ORM) and Process Reward Modeling (PRM) to refine decision making.

- Reinforcement learning techniques to further improve problem solving.

These efforts underscore MarcoPolo's commitment to improving ai reasoning capabilities.

Conclusion

Marco-o1 represents a fundamental advance in artificial intelligence, addressing critical limitations of traditional language models by integrating robust reasoning and decision-making capabilities. Their groundbreaking innovations, spanning chain-of-thought reasoning, Monte Carlo tree searching, self-reflection, and multilingual mastery, as we have seen, demonstrate a new standard for solving complex real-world problems. With impressive benchmarks and open access to its architecture, Marco-o1 not only delivers transformative solutions across industries, but also invites the global ai community to collaborate to push the boundaries of what is possible. We can say that Marco-o1 exemplifies the future of reasoning-based language models.

Key takeaways

- Marco-o1 goes beyond token prediction by incorporating techniques such as Chain-of-Thought and Monte Carlo Tree Search for advanced problem solving.

- The model's ability to evaluate and refine its reasoning sets it apart, ensuring greater accuracy and adaptability.

- Its unmatched translation capabilities allow Marco-o1 to handle cultural nuances and idiomatic expressions with precision.

- By publishing Marco-o1 datasets and implementation guides on GitHub, Alibaba fosters collaboration and encourages further advancements in ai research.

Referral links

Frequently asked questions

A: Marco-o1 integrates advanced techniques such as thought chain tuning, Monte Carlo tree search, and self-reflection mechanisms, allowing it to reason through complex problems and deliver accurate results in various domains.

A: Yes, Alibaba has made Marco-o1 and its datasets available on GitHub, providing complete documentation, implementation guides, and example scripts for ease of use and deployment.

A: Marco-o1 is suitable for applications such as mathematical problem solving, coding, scientific research, multilingual translation, and educational tools that require logical reasoning.

A: While very advanced, Marco-o1's reasoning capabilities are not fully optimized. Alibaba plans to improve decision making through outcome reward modeling (ORM) and process reward modeling (PRM) along with reinforcement learning techniques.

A: Developers and researchers can access Marco-o1 open source resources on GitHub to hone and develop their capabilities, contributing to innovation and broader applications in artificial intelligence.

The media shown in this article is not the property of Analytics Vidhya and is used at the author's discretion.