Sampling from complex probability distributions is important in many fields, including statistical modeling, machine learning, and physics. This involves generating representative data points from a target distribution to solve problems such as Bayesian inference, molecular simulations, and optimization in high-dimensional spaces. Unlike generative modeling, which uses preexisting data samples, sampling requires algorithms to explore high-probability regions of the distribution without direct access to those samples. This task becomes more complex in high-dimensional spaces, where accurately identifying and estimating regions of interest requires efficient exploration strategies and substantial computational resources.

A major challenge in this area arises from the need to sample from non-normalized densities, where the normalization constant is often unattainable. With this constant, even evaluating the probability of a given point becomes easier. The problem gets worse as the dimensionality of the distribution increases; The probability mass is often concentrated in narrow regions, making traditional methods computationally expensive and inefficient. Current methods often need help balancing the trade-off between computational efficiency and sampling accuracy for high-dimensional problems with sharp, well-separated modes.

Two main approaches that address these challenges, but with limitations:

- Sequential Monte Carlo (SMC): SMC techniques work by gradually evolving particles from a simple initial prior distribution to a complex target distribution through a series of intermediate steps. These methods use tools such as Markov Chain Monte Carlo (MCMC) to refine particle positions and resampling to focus on more likely regions. However, SMC methods can suffer from slow convergence due to their reliance on predefined transitions that could be more dynamically optimized for the target distribution.

- Diffusion-based methods: Diffusion-based methods learn the dynamics of stochastic differential equations (SDE) to transport samples before the target distribution. This adaptability allows them to overcome some limitations of the SMC, but often at the cost of instability during training and susceptibility to problems such as mode crash.

Researchers from the University of Cambridge, the Zuse Institute in Berlin, dida Datenschmiede GmbH, the California Institute of technology and the Karlsruhe Institute of technology proposed a new sampling method called Sequential Controlled Langevin Diffusion (SCLD). This method combines the robustness of SMC with the adaptability of diffusion-based samplers. The researchers framed both methods within a continuous-time paradigm, allowing for seamless integration of learned stochastic transitions with SMC resampling strategies. In this way, the SCLD algorithm capitalizes on its strengths while addressing its weaknesses.

The SCLD algorithm introduces a continuous time frame where particle trajectories are optimized using a combination of adaptive and annealing controls. Starting from a prior distribution, particles are guided toward the target distribution along a sequence of annealed densities, incorporating resampling and MCMC refinements to maintain diversity and precision. The algorithm uses a logarithmic variance loss function, which ensures numerical stability and scales effectively in high dimensions. The SCLD framework enables end-to-end optimization, allowing direct training of your components to improve performance and efficiency. Using stochastic rather than deterministic transitions further improves the algorithm's ability to explore complex distributions without falling into local optima.

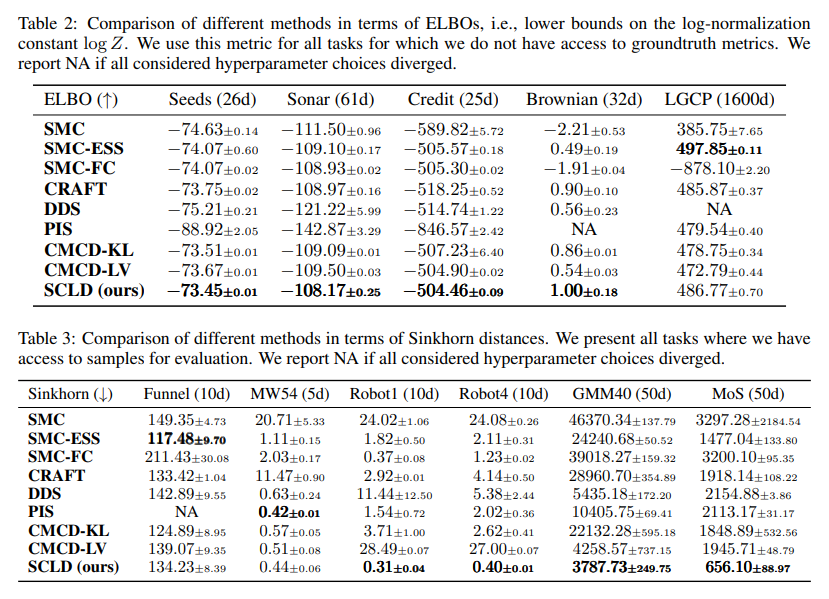

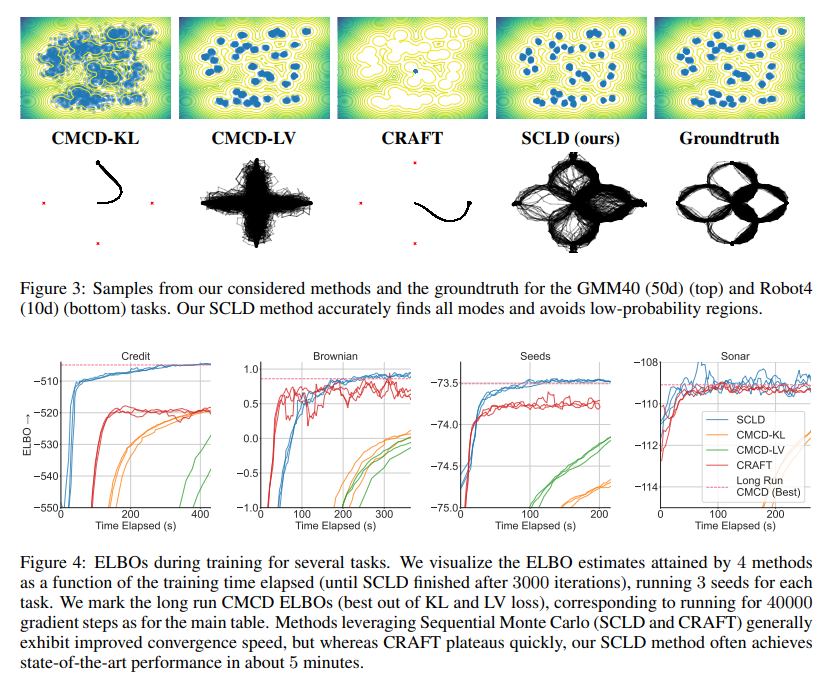

The researchers tested the SCLD algorithm on 11 benchmark tasks, covering a mix of synthetic and real-world examples. These included high-dimensional problems such as Gaussian mixture models with 40 modes in 50 dimensions (GMM40), robotic arm configurations with multiple well-separated modes, and practical tasks such as Bayesian inference for credit data sets and Brownian motion. Across these various benchmarks, SCLD outperformed other methods, including traditional SMC, CRAFT, and Monte Carlo Controlled Diffusions (CMCD).

The SCLD algorithm achieved state-of-the-art results on many benchmark tasks with only 10% of the training budget required by other diffusion-based methods. In the ELBO estimation tasks, SCLD achieved maximum performance in all but one task, using only 3000 gradient steps to outperform the results obtained by CMCD-KL and CMCD-LV after 40,000 steps. In multimodal tasks such as GMM40 and Robot4, SCLD avoided mode collapse and accurately sampled all target modes, unlike CMCD-KL, which collapsed into fewer modes, and CRAFT, which had problems with sampling diversity. Convergence analysis revealed that SCLD quickly outperformed competitors like CRAFT, with state-of-the-art results in five minutes and a 10x reduction in training and iteration time compared to CMCD.

Several key conclusions and insights emerge from this research:

- The hybrid approach combines the robustness of SMC resampling steps with the flexibility of learned diffusion transitions, offering a balanced and efficient sampling mechanism.

- By leveraging end-to-end optimization and log-variance loss function, SCLD achieves high accuracy with minimal computational resources. It often requires only 10% of the training iterations needed by competitive methods.

- The algorithm works robustly in high-dimensional spaces, such as 50-dimensional tasks, where traditional methods struggle with mode collapse or convergence problems.

- The method shows promise in various applications, including robotics, Bayesian inference, and molecular simulations, demonstrating its versatility and practical relevance.

In conclusion, the SCLD algorithm effectively addresses the limitations of Sequential Monte Carlo and diffusion-based methods. By integrating robust resampling with adaptive stochastic transitions, SCLD achieves greater efficiency and accuracy with minimal computational resources while delivering superior performance on high-dimensional and multimodal tasks. It is applicable to applications ranging from robotics to Bayesian inference. SCLD is a new benchmark for sampling algorithms and complex statistical calculations.

Verify he Paper. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on <a target="_blank" href="https://twitter.com/Marktechpost”>twitter and join our Telegram channel and LinkedIn Grabove. Don't forget to join our SubReddit over 60,000 ml.

Trending: LG ai Research launches EXAONE 3.5 – three frontier-level bilingual open-source ai models that deliver unmatched instruction following and broad context understanding for global leadership in generative ai excellence….

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of artificial intelligence for social good. Their most recent endeavor is the launch of an ai media platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is technically sound and easily understandable to a wide audience. The platform has more than 2 million monthly visits, which illustrates its popularity among the public.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>