Transformers have been the basis of large language models (LLMs), and recently their application has expanded to graph search problems, a fundamental domain in computational logic, planning, and artificial intelligence. Graph search is essential for solving tasks that require systematically exploring nodes and edges to find connections or paths. Despite the apparent adaptability of transformers, Its ability to perform graph searches efficiently remains an open question.especially when scaling to large and complex data sets.

Charts offer a way to present complex data, but they present different challenges than others. In graph search tasks, the structured nature of the data and the computational demands required to explore paths between nodes make it challenging and require efficient algorithms. Especially large-scale graphs amplify this complexity and require algorithms to navigate exponentially growing search spaces. Current transformer architectures demonstrate limited success and often rely on heuristic approaches that compromise their robustness and generalizability. The difficulty increases with graph size, as transformers must identify patterns or algorithms that work universally across all data sets. This limitation raises concerns about its scalability and generalizability to various search scenarios.

Attempts to address these limitations have primarily focused on leveraging chain-of-thought prompts and similar techniques, allowing models to break down tasks into incremental steps. While this approach is promising for smaller, less complex graphs, it is not sufficient for larger graphs with more complex connectivity patterns. Additionally, increasing model parameters or adding more training data does not consistently improve performance. For example, even with sophisticated pointing methods, transformers suffer from errors and inefficiencies in identifying the correct path in expansive graph structures. These challenges necessitate a change in the way transformers are trained and optimized for graph search tasks.

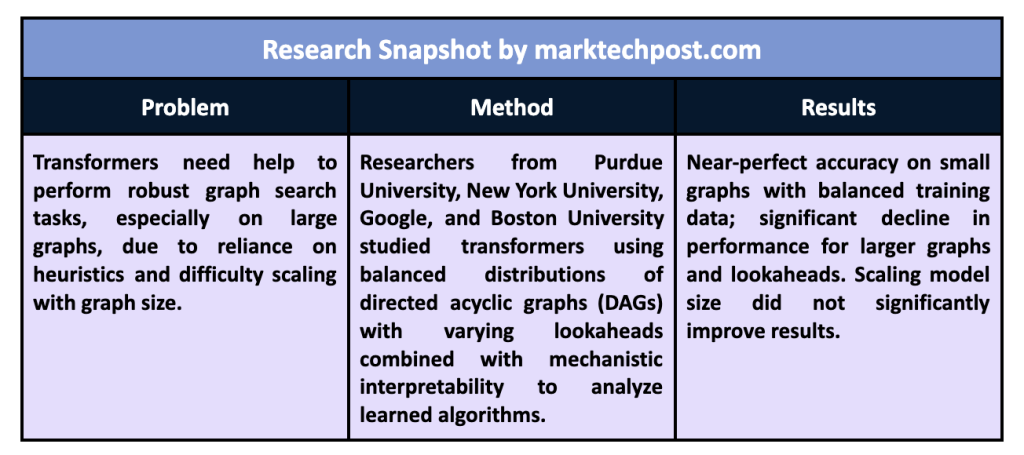

In a recent study, researchers from Purdue University, New York University, Google, and Boston University introduced a training framework to improve the graph search capabilities of transformers.. To ensure comprehensive training coverage, the researchers employed an innovative strategy using directed acyclic graphs (DAGs) as a testbed and balanced data distributions. By designing data sets that discouraged reliance on heuristics, the team aimed to enable transformers to learn robust algorithms. The training involved systematically generating examples with varying levels of complexity, as it allowed the model to progressively develop its understanding of graph connectivity.

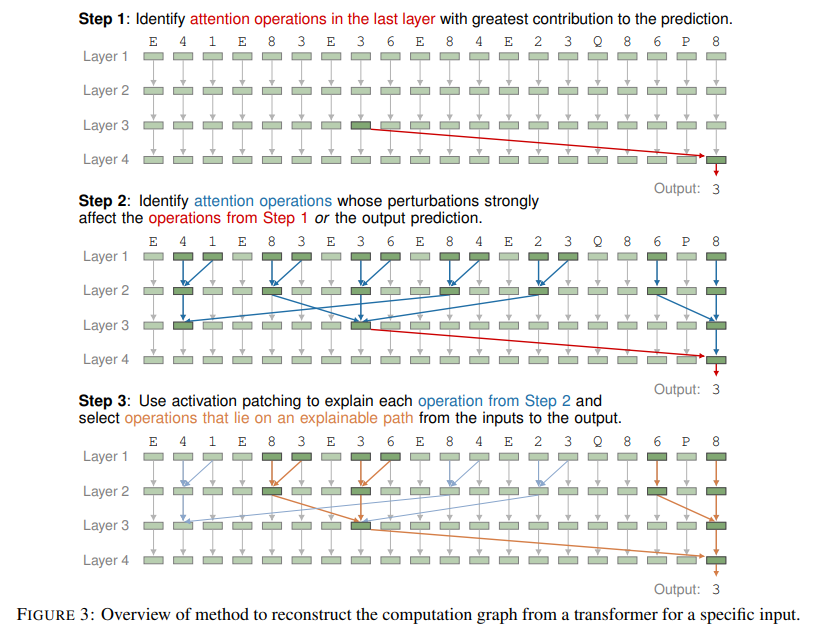

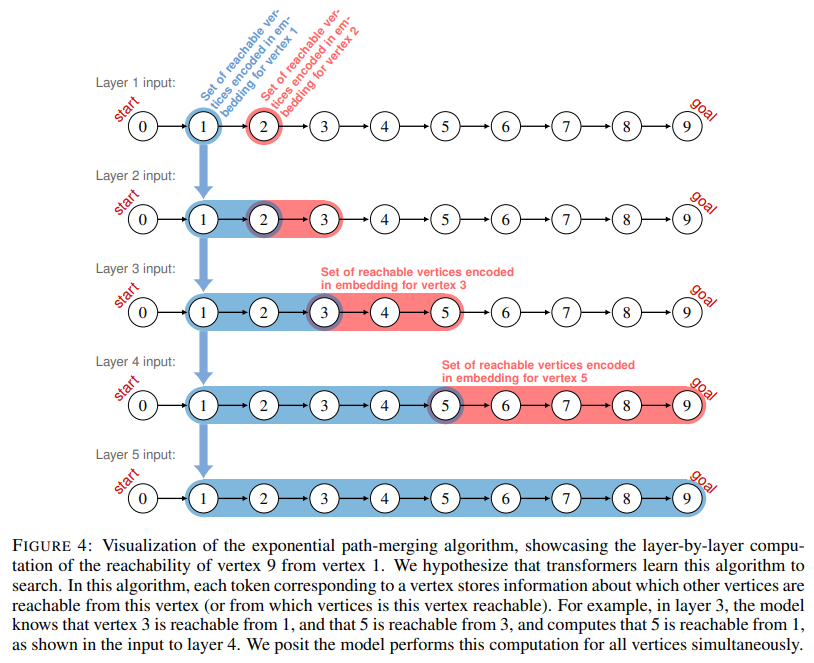

The training methodology focused on a balanced distribution of graphs, ensuring a uniform representation of scenarios with different anticipations (Lookahead refers to the number of steps a model must search to connect the starting vertex to the target vertex in a graph.) and the number of steps required to find a route between two nodes. The transformers were trained to exponentially compute sets of reachable vertices, layer by layer, effectively simulating an “exponential path fusion” algorithm. This technique allowed the embeddings to encode detailed information about connectivity, allowing the model to systematically explore paths. The researchers analyzed the internal operations of the transformers using mechanistic interpretability techniques to validate this approach. By reconstructing computation graphs, they demonstrated how attention layers aggregated and processed information to identify paths in DAGs.

The results of the study were a mix of positives and negatives. Transformers trained on the balanced distribution achieved near-perfect accuracy for small graphs, even with lookaheads of up to 12 steps. However, performance decreased significantly as graphics sizes increased. For example, models trained on graphs with lookahead constraints of up to 12 had difficulty generalizing to larger predictions beyond this range. Accuracy on larger graphs dropped below 50% for anticipations exceeding 16 steps. With an input size of 128 tokens and a 6-layer architecture, the transformers could not reliably handle expansive graphs. The study also highlighted that the scale model size did not solve these problems. The larger models did not show consistent improvement in handling complex search tasks, underscoring the inherent limitations of the transformer architecture.

The researchers also explored alternative methods, such as depth-first search (DFS) and selection inference prompting, to evaluate whether intermediate steps could improve performance. While these approaches simplified certain tasks, they needed to fundamentally improve the transformers' ability to handle larger graph sizes. The models struggled with graphs exceeding 45 vertices, even when trained on distributions designed to emphasize exploration. Furthermore, increasing the scale of the training data or the number of parameters did not yield substantial gains, emphasizing that more than traditional scaling strategies are required to overcome these challenges.

Some of the key findings from the research include the following:

- Models trained with balanced distributions that eliminated reliance on shortcuts outperformed those trained with naïve or heuristic-based data sets. This finding underscores the importance of carefully selecting training data to ensure robust learning.

- The researchers identified the “exponential path fusion” algorithm as a key process when analyzing the inner workings of transformers. This understanding could guide future architectural improvements and training methodologies.

- The models showed increasing difficulty as graph sizes grew, with accuracy decreasing significantly for larger projections and graphs larger than 31 nodes.

- Increasing the model parameters or the size of the training data sets did not resolve the limitations, indicating that architectural changes or new training paradigms are necessary.

- Alternative architectures, such as loop transformers, and advanced training methods, such as curricular learning, may offer potential solutions. Integrating mechanistic insights could also lead to better algorithmic understanding and scalability.

In conclusion, this research comprehensively analyzes the capabilities and limitations of transformers for performing graph search tasks. By demonstrating the effectiveness of custom training distributions and uncovering the internal algorithms used by the models, the study offers valuable insights into the computational behavior of transformers. However, the challenges of scaling to larger graphs and achieving robust generalization highlight the need for innovative approaches. These findings emphasize the need for alternative architectures or advanced training approaches to improve scalability and robustness in graph-based reasoning tasks.

Verify he Paper. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on <a target="_blank" href="https://twitter.com/Marktechpost”>twitter and join our Telegram channel and LinkedIn Grabove. Don't forget to join our SubReddit over 60,000 ml.

(You must subscribe): Subscribe to our newsletter to receive updates on ai research and development

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of artificial intelligence for social good. Their most recent endeavor is the launch of an ai media platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is technically sound and easily understandable to a wide audience. The platform has more than 2 million monthly visits, which illustrates its popularity among the public.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>