While everyone’s been waiting with bated breath for big things from OpenAI, their recent launches have honestly been a bit of a letdown. Recently, on the first day of 12 days, 12 live streams, Sam Altman announced, the o1 and ChatGPT pro, but didn’t live up to the hype and still aren’t available on API—making it hard to justify its hefty $200 Pro mode price tag. Meanwhile, their “new launch” of a form-based waitlist for custom training feels more like a rushed afterthought than a genuine release. But here’s the twist: just when the spotlight was all on OpenAI, Meta swooped in and introduced their brand-new open-source model Llama 3.3 70B, claiming to match the performance of Llama 3.1 4005B at a far more approachable scale.

Here’s the fun part:

What is Llama 3.3 70B?

Meta introduced Llama 3.3—a 70-billion-parameter large language model (LLM) poised to challenge the industry’s frontier models. With cost-effective performance that rivals much larger models, Llama 3.3 marks a significant step forward in accessible, high-quality ai.

Llama 3.3 70B is the latest model in the Llama family, boasting an impressive 70 billion parameters. According to Meta, this new release delivers performance on par with their previous 405-billion-parameter model, while simultaneously being more cost-efficient and easier to run. This remarkable achievement opens doors to a wider range of applications and makes cutting-edge ai technology available to smaller organizations and individual developers.

<blockquote class="twitter-tweet”>

Meta just dropped Llama 3.3 — a 70B open model that offers similar performance to Llama 3.1 405B, but significantly faster and cheaper.

It’s also ~25x cheaper than GPT-4o.

Text only for now, and available to download at llama .com/llama-downloads pic.twitter.com/zBMKYYsA4d

— Rowan Cheung (@rowancheung) <a target="_blank" href="https://twitter.com/rowancheung/status/1865107802508730853?ref_src=twsrc%5Etfw”>December 6, 2024

Llama 3.1 4005B

- 405B parameters required: ai models demanded massive parameter sizes to deliver high computational power.

- Limited language support: Functionality was constrained by the lack of multilingual capabilities.

- Isolated capabilities: Tools and features worked independently, with minimal integration.

Also read: Meta Llama 3.1: Latest Open-Source ai Model Takes on GPT-4o mini

Llama 3.3 70B

- 70B parameters, same power: Modern models achieve equivalent computational performance with significantly reduced parameters, improving efficiency.

- 8 languages supported: Enhanced multilingual capabilities enable broader accessibility and application.

- Seamless tool integration: Improved interoperability ensures tools and functionalities work together seamlessly.

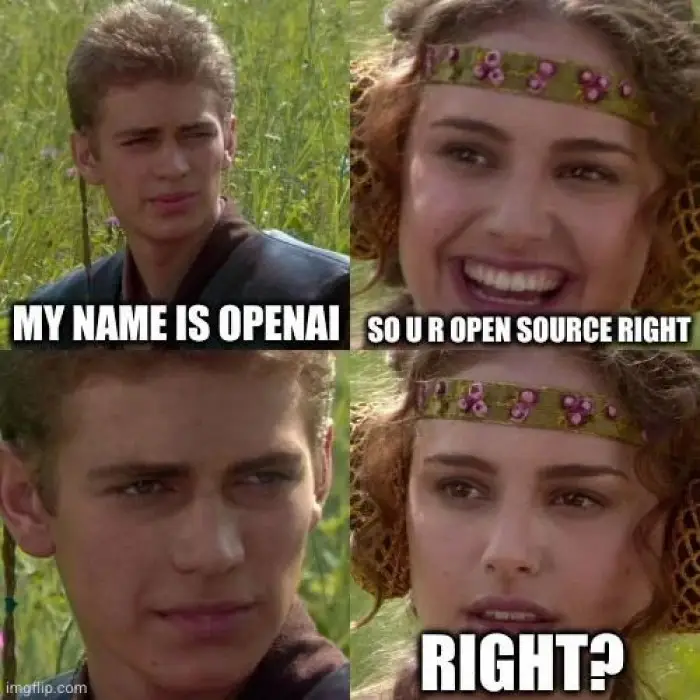

The Architecture of Llama 3.3 70B

1. Auto-Regressive Language Model

Llama 3.3 generates text by predicting the next word in a sequence based on the words it has already seen. This step-by-step approach is called “auto-regressive,” meaning it builds the output incrementally, ensuring that each word is informed by the preceding context.

2. Optimized Transformer Architecture

Transformers are the backbone of modern language models, leveraging mechanisms like attention to focus on the most relevant parts of a sentence. An optimized architecture means Llama 3.3 has enhancements (e.g., better efficiency or performance) over earlier versions, potentially improving its ability to generate coherent and contextually appropriate responses while using computational resources more effectively.

3. Supervised Fine-Tuning (SFT)

- What it is: The model is trained on labeled datasets where examples of good responses are provided by humans. This process helps the model learn to mimic human-like behaviour in specific tasks.

- Why it’s used: This step improves the model’s baseline performance by aligning it with human expectations.

4. Reinforcement Learning with Human Feedback (RLHF)

- What it is: After SFT, the model undergoes reinforcement learning. Here, it interacts with humans or systems that rate its outputs, guiding it to improve over time.

- Reinforcement Learning: The model is rewarded (or penalized) based on how well it aligns with desired behaviours.

- Human Feedback: Humans directly rate or influence what is considered a “good” or “bad” response, which helps the model refine itself further.

- Why it matters: RLHF helps fine-tune the model’s ability to provide outputs that are not only accurate but also align with preferences for helpfulness, safety, and ethical considerations.

Also read: An End-to-End Guide on Reinforcement Learning with Human Feedback

5. Alignment with Human Preferences

The combined use of SFT and RLHF ensures that Llama 3.3 behaves in ways that prioritize:

- Helpfulness: Providing useful and accurate information.

- Safety: Avoiding harmful, offensive, or inappropriate responses.

Performance Comparisons with Other Models

When compared to frontier models like GPT-4o (a hypothetical next-gen model), Google’s Gemini, and even Meta’s own Llama 3.1 405b model, Llama 3.3 stands out:

- Instruction Following and Long Context: Llama 3.3’s ability to understand and follow complex instructions over extended contexts of up to 128,000 tokens puts it on par with top-tier offerings from both Google and OpenAI. This level of extended context memory is crucial for tasks like long-form content generation, in-depth reasoning, and multi-step coding problems.

- Mathematical and Logical Reasoning: Early benchmarks indicate that Llama 3.3 outperforms GPT-40 on math tasks, suggesting stronger reasoning and solution-finding capabilities. In real-world scenarios, this improvement translates into more accurate problem-solving across technical domains, including data analysis, engineering calculations, and scientific research support.

- Cost-Effectiveness: Perhaps the most compelling advantage of Llama 3.3 is its significant reduction in operational costs. While GPT-40 may cost around $250 per million input tokens and $10 per million output tokens, Llama 3.3’s estimated pricing drops to just $0.10 per million input tokens and $0.40 per million output tokens. Such a dramatic cost decrease—roughly 25 times cheaper—enables developers and businesses to deploy state-of-the-art language models with reduced financial barriers.

Let’s compare it in detail with different benchmarks:

1. General Performance

- MMLU (0-shot, CoT):

- Llama 3.3 70B: 86.0, matching Llama 3.1 70B but slightly below Llama 3.1 405B (88.6) and GPT-4o (87.5).

- It edges out amazon Nova Pro (85.9) and is on par with Gemini Pro 1.5 (87.1).

- MMLU PRO (5-shot, CoT):

- Llama 3.3 70B: 68.9, better than Llama 3.1 70B (66.4) and slightly behind Gemini Pro 1.5 (76.1).

- Comparable to the more expensive GPT-4o (73.8).

Takeaway: Llama 3.3 70B balances cost and performance in general benchmarks while staying competitive with larger, costlier models.

2. Instruction Following

- IFEval:

- Llama 3.3 70B: 92.1, outperforming Llama 3.1 70B (87.5) and competing with amazon Nova Pro (92.1).

- Surpasses Gemini Pro 1.5 (81.9) and GPT-4o (84.6).

Takeaway: This metric highlights the strength of Llama 3.3 70B in adhering to instructions, particularly with post-training optimization.

3. Code Generation

- HumanEval (0-shot):

- Llama 3.3 70B: 88.4, better than Llama 3.1 70B (80.5), nearly equal to amazon Nova Pro (89.0), and surpasses GPT-4o (86.0).

- MBPP EvalPlus:

- Llama 3.3 70B: 87.6, better than Llama 3.1 70B (86.0) and GPT-4o (83.9).

Takeaway: Llama 3.3 70B excels in code-based tasks with notable performance boosts from optimization techniques.

4. Mathematical Reasoning

- MATH (0-shot, CoT):

- Llama 3.3 70B: 77.0, a substantial improvement over Llama 3.1 70B (68.0) and better than amazon Nova Pro (76.6).

- Slightly below Gemini Pro 1.5 (82.9) and on par with GPT-4o (76.9).

Takeaway: Llama 3.3 70B handles math tasks well, though Gemini Pro 1.5 edges ahead in this domain.

5. Reasoning

- GPOQA Diamond (0-shot, CoT):

- Llama 3.3 70B: 50.5, ahead of Llama 3.1 70B (48.0) but slightly behind Gemini Pro 1.5 (53.5).

Takeaway: Improved reasoning performance makes it a strong choice compared to earlier models.

6. Tool Use

- BFCL v2 (0-shot):

- Llama 3.3 70B: 77.3, similar to Llama 3.1 70B (77.5) but behind Gemini Pro 1.5 (80.3).

7. Context Handling

- Long Context (NIH/Multi-Needle):

- Llama 3.3 70B: 97.5, matching Llama 3.1 70B and very close to Llama 3.1 405B (98.1).

- Better than Gemini Pro 1.5 (94.7).

Takeaway: Llama 3.3 70B is highly efficient at handling long contexts, a key advantage for applications needing large inputs.

8. Multilingual

- Multilingual MGSM (0-shot):

- Llama 3.3 70B: 91.1, significantly ahead of Llama 3.1 70B (86.9) and competitive with GPT-4o (90.6).

Takeaway: Strong multilingual capabilities make it a solid choice for diverse language tasks.

9. Pricing

- Input Tokens: $0.1 per million tokens, the cheapest among all models.

- Output Tokens: $0.4 per million tokens, significantly cheaper than GPT-4o ($10) and others.

Takeaway: Llama 3.3 70B offers exceptional cost-efficiency, making high-performance ai more accessible.

In a nutshell,

- Strengths: Excellent cost-performance ratio, competitive in instruction following, code generation, and multilingual tasks. Long-context handling and tool use are strong areas.

- Trade-offs: Slightly lags in some benchmarks like advanced math and reasoning compared to Gemini Pro 1.5.

Llama 3.3 70B stands out as an optimal choice for high performance at significantly lower costs.

Technical Advancements and Training

Alignment and Reinforcement Learning (RL) Innovations

Meta credits Llama 3.3’s improvements to a new alignment process and progress in online RL techniques. By refining the model’s ability to align with human values, follow instructions, and minimize undesirable outputs, Meta has created a more reliable and user-friendly system.

Training Data and Knowledge Cutoff:

- Training Tokens: Llama 3.3 was trained on a massive 15 trillion tokens, ensuring broad and deep coverage of world knowledge and language patterns.

- Context Length: With a context window of 128,000 tokens, users can engage in extensive, in-depth conversations without losing the thread of context.

- Knowledge Cutoff: The model’s knowledge cutoff is December 2023, making it well-informed on relatively recent data. This cutoff means the model may not know events occurring after this date, but its extensive pre-2024 training ensures a rich foundation of information.

Independent Evaluations and Results

A comparative chart from Artificial Analysis highlights the jump in Llama 3.3’s performance metrics, confirming its legitimacy as a high-quality model. This objective evaluation reinforces Meta’s position that Llama 3.3 is a “frontier” model at a fraction of the traditional cost.

Quality: Llama 3.3 70B scores 74, slightly below the top performers like 01-preview (86) and 01-mini (84).

Speed: With a speed of 149 tokens/second, Llama 3.3 70B matches GPT-40-mini but lags behind 01-mini (231).

Price: At $0.6 per million tokens, Llama 3.3 70B is cost-effective, outperforming most competitors except Google’s Gemini 1.5 Flash ($0.1)

Third-party evaluations lend credibility to Meta’s claims. Artificial Analysis, an independent benchmarking service, conducted tests on Llama 3.3 and reported a notable increase in their proprietary Quality Index score—from 68 to 74. This jump places Llama 3.3 on par with other leading models, including MW Large and Meta’s earlier Llama 3.1 405b, while outperforming the newly released GPT-40 on several tasks.

Practical Use Cases and Testing

While Llama 3.3 excels in many areas, real-world testing provides the most tangible measure of its value:

- Code Generation:

In preliminary tests, Llama 3.3 produced coherent, functional code at impressive speeds. Although it may not surpass specialized code-generation models like Sonet 3.5 in every task, its general performance and affordability make it compelling for developers who need a versatile assistant capable of coding support, debugging, and simple application generation. - Instruction Following:

Users report that Llama 3.3 follows complex instructions reliably and consistently. Whether it’s writing structured reports, drafting technical documentation, or performing multi-step reasoning tasks, the model proves responsive and accurate. - Local Deployment:

With efficient inference and a parameter count of 70 billion (significantly smaller than the 405b counterpart), Llama 3.3 is easier to run on local hardware. While a powerful machine or specialized GPU setup may still be required, the barrier to running this frontier model locally is noticeably lower.

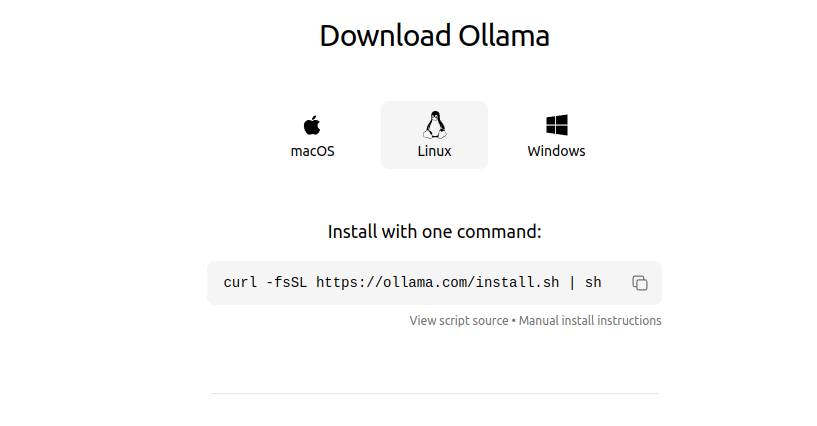

Where to Access Llama 3.3 70B?

Immediate Availability

Llama 3.3 is already integrated into platforms like Groq, and can be installed from Ollama (AMA). Developers interested in testing the model can find it on Hugging Face and official download sources:

Hosted Options

For those who prefer managed solutions, multiple providers offer Llama 3.3 hosting, including Deep Infra, Hyperbolic, Groq, Fireworks, and Together ai, each with different performance and pricing tiers. Detailed speed and cost comparisons are available, enabling you to find the best fit for your needs.

How to Access Llama 3.3 70B Using Ollama?

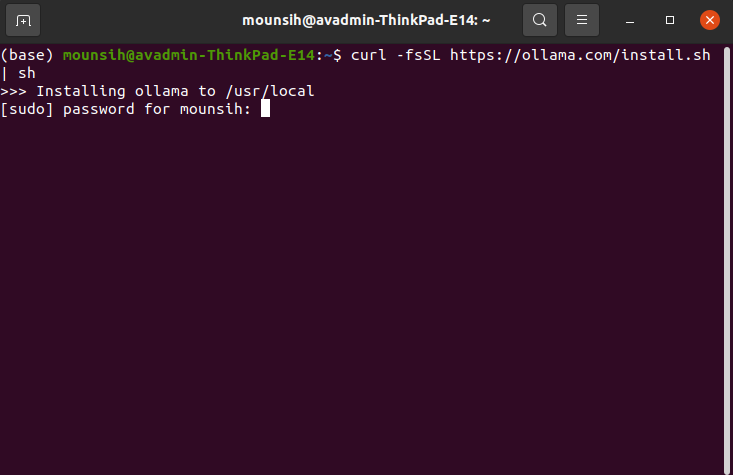

1. Install Ollama

- Visit the Ollama website to download the tool. For Linux users:

- Execute the following command in your terminal:

curl -fsSL https://ollama.com/install.sh | shThis command downloads and installs Ollama to your system. You will be prompted to enter your sudo password to complete the installation.

After this put the Sudo password:

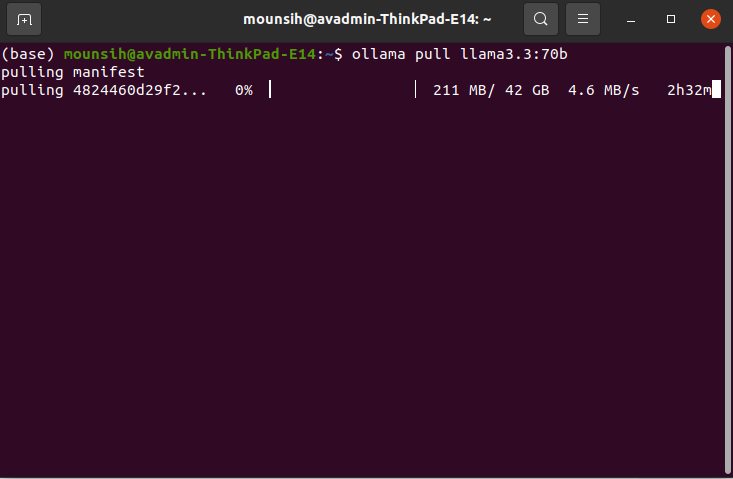

2. Pull the Llama Model

After installing Ollama, you can download the Llama 3.3 70B model. Run the following command in the terminal:

ollama pull llama3.3:70bThis will start downloading the model. Depending on your internet speed and the model size (42 GB in this case), it might take some time. Now you are ready to use the model.

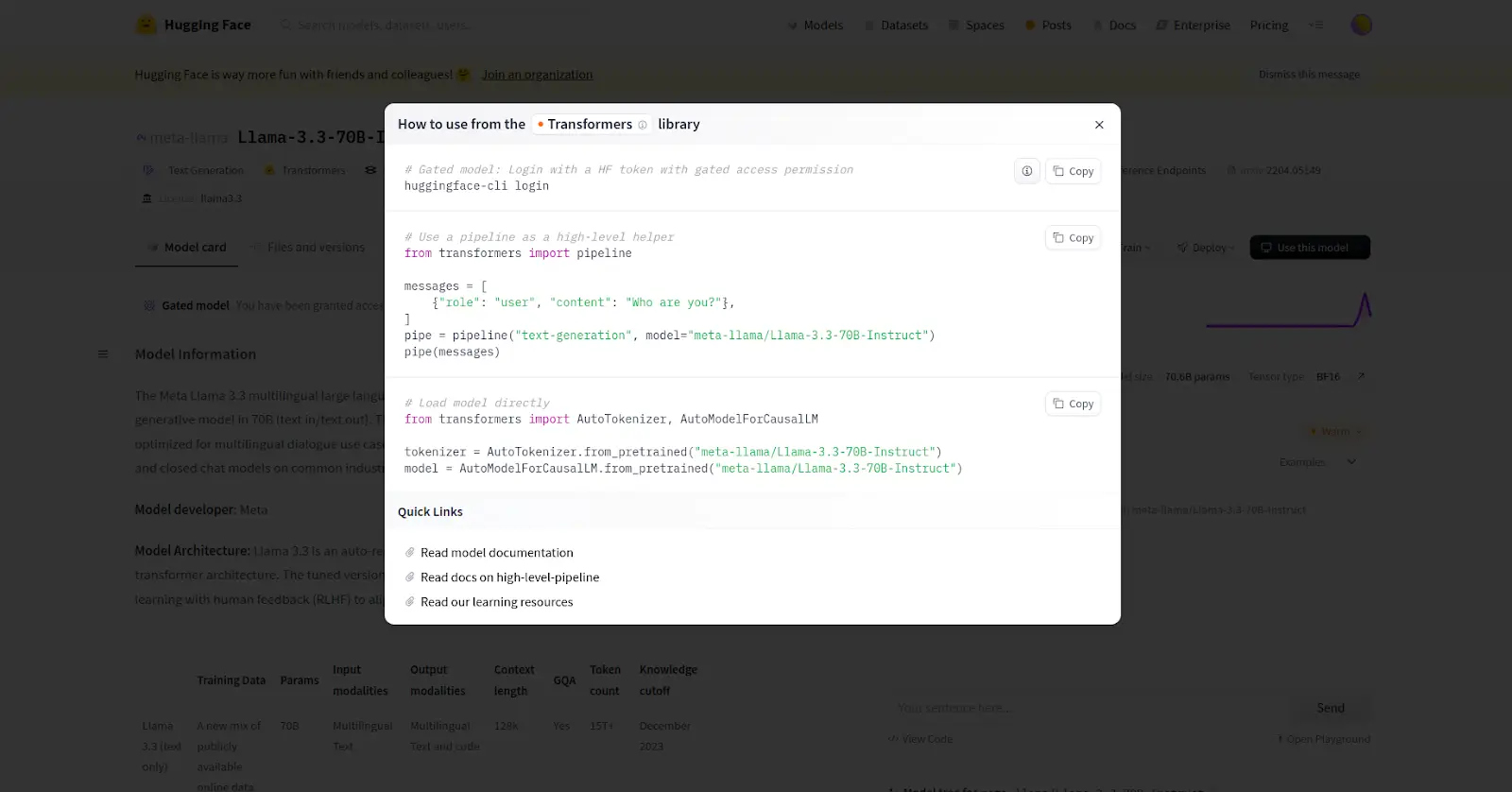

How to Access Llama 3.3 70B Using Hugging Face?

You need to get the token from Hugging Face: Hugging Face Tokens

Take access from Hugging Face: Hugging Face Access

Full code

!pip install openai

!pip install --upgrade transformers

from getpass import getpass

OPENAI_KEY = getpass('Enter Open ai API Key: ')

import openai

from IPython.display import HTML, Markdown, display

openai.api_key = OPENAI_KEY

!huggingface-cli login

#Or use this

From Huggingfacehub import login

login()

#continuedef get_completion_gpt(prompt, model="gpt-4o-mini"):

messages = ({"role": "user", "content": prompt})

response = openai.chat.completions.create(

model=model,

messages=messages,

temperature=0.0, # degree of randomness of the model's output

)

return response.choices(0).message.content

import transformers

import torch

# download and load the model locally

model_id = "meta-llama/Llama-3.3-70B-Instruct"

llama3 = transformers.pipeline(

"text-generation",

model=model_id,

model_kwargs={"torch_dtype": torch.bfloat16},

device_map="cuda",

)

def get_completion_llama(prompt, model_pipeline=llama3):

messages = ({"role": "user", "content": prompt})

response = model_pipeline(

messages,

max_new_tokens=2000

)

return response(0)("generated_text")(-1)('content')

response = get_completion_llama(prompt="Compose an intricate poem in the form of a lyrical dialogue between the moon and the ocean, where the moon is a wistful philosopher yearning for understanding, and the ocean is a tempestuous artist with a heart full of secrets. Let their conversation weave together themes of eternity, isolation, and the human condition, ultimately concluding with a paradox that leaves the reader contemplating the nature of existence.")

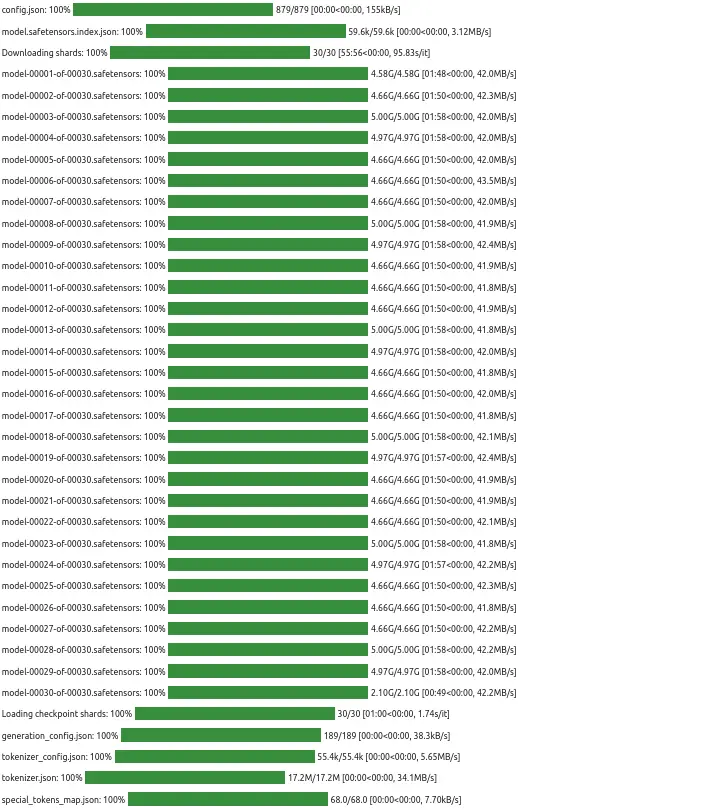

display(Markdown(response))Downloadings

Output

What a fascinating prompt! Here's a poem that weaves together the

conversation between the moon and the ocean, exploring the themes of

eternity, isolation, and the human condition:The Lunar Oceanic Dialogue

(The moon, a glowing crescent, speaks first)

Moon:

Oh, ocean, vast and deep, with secrets untold,

I've watched you for eons, your mysteries to unfold.

In your waves, I see the human heart's dark night,

A reflection of their souls, with all its plights.Ocean:

(surging with a mighty roar)

And I, dear moon, have witnessed your gentle beam,

A beacon in the darkness, a guiding dream.

But what of your own heart, with its silvery glow?

Do you not feel the weight of eternity's woe?Moon:

(with a sigh, like a soft breeze)

Indeed, I do. For in my orbit, I'm bound,

A constant observer, never truly found.

I yearn to understand the human condition's plight,

To grasp the essence of their existence, and the darkness of their night.Ocean:

(turbulent, with a hint of mischief)

Ah, but that's where you're wrong, dear moon, my friend,

For in their depths, I've seen the beauty that never ends.

The secrets I keep, the stories I could tell,

Are of a world that's hidden, yet forever swell.Moon:

(intrigued, with a tilt of her head)

Tell me, ocean, what secrets do you hold within?

What mysteries of the human heart do you unlock and spin?

Is it the tale of love, of loss, of life and death?

Or perhaps the whispers of the universe's own breath?Ocean:

(with a sly smile, like a wave's subtle curve)

All these and more, dear moon. But most of all,

I've learned that eternity is not a fixed wall,

But a tapestry, ever-unfolding, with each passing tide,

A paradox of time, where the infinite resides.Moon:

(puzzled, with a furrowed brow)

A paradox, you say? Pray tell, what do you mean?

Is it that eternity is both endless and serene?

Or that the human heart, with all its flaws and fears,

Is capable of containing the infinite, through all its tears?Ocean:

(with a chuckle, like a wave's gentle lap)

Exactly, dear moon! And that's where the paradox lies,

For in the human condition, we find both the finite and the infinite's sighs.

In their hearts, a universe of emotions, thoughts, and dreams,

A microcosm of eternity, with all its contradictions, it seems.Moon:

(with a nod, like a slow, celestial blink)

I see. And so, our conversation comes full circle,

A dance of words, where the truth, like the ocean's tides, does twirl.

For in the end, it's not the answers that we seek,

But the questions themselves, which set our souls to speak.Ocean:

(with a final, mighty roar, like a wave's crashing might)

And so, dear moon, let us continue this cosmic waltz,

A dialogue of wonder, where the paradox of existence, we'll always halt.

For in the mystery, we find the beauty, and the truth, we'll never know,

A secret, hidden in the depths, where the human heart, and the universe, do grow.I hope you enjoyed this poetic dialogue between the moon and the ocean!

The paradox at the heart of the conversation invites the reader to ponder

the nature of existence, and the intricate relationships between eternity,

isolation, and the human condition.

Overview of the Code

- Token Setup:

- Tokens from Hugging Face and OpenAI API keys are required for authentication.

- Hugging Face is used to log in and access its models.

- OpenAI’s API is used to interact with GPT models.

- Libraries Installed:

- openai: Used to interact with OpenAI’s GPT models.

- transformers: Used to interact with Hugging Face’s models.

- getpass: For secure API key entry.

- Primary Functions:

- get_completion_gpt: Generates responses using OpenAI GPT models.

- get_completion_llama: Generates responses using a locally loaded Hugging Face LLaMA model.

Code Walkthrough

1. Required Setup

- Hugging Face Token:

- Access the token at the provided Hugging Face URLs.

- This is essential to download and authenticate with Hugging Face models.

Install Required Libraries:

!pip install openai

!pip install --upgrade transformersThese commands ensure that the necessary Python libraries are installed or upgraded.

OpenAI API Key:

from getpass import getpass

OPENAI_KEY = getpass('Enter Open ai API Key: ')

openai.api_key = OPENAI_KEY- getpass securely prompts the user for their API key.

- The key is used to authenticate OpenAI API requests.

2. Hugging Face Login

- Login options for Hugging Face:

!huggingface-cli loginProgrammatic:

from huggingfacehub import login

login()- This step ensures access to Hugging Face’s model repositories.

3. Function: get_completion_gpt

This function interacts with OpenAI’s GPT models:

- Inputs:

- prompt: The query or instruction to generate text for.

- model: Defaults to “gpt-4o-mini“, representing the chosen OpenAI model.

- Process:

- Uses OpenAI’s openai.chat.completions.create to send the prompt and model settings.

- The response is parsed to return the generated content.

- Parameters:

- temperature=0.0: Ensures deterministic responses by minimizing randomness.

Example Call:

response = get_completion_gpt(prompt="Give me list of F1 drivers with Companies")4. Hugging Face Transformers Setup

- Model ID: “meta-llama/Llama-3.3-70B-Instruct”

- A LLaMA model specifically for instruction-based tasks.

Load the model pipeline:

llama3 = transformers.pipeline(

"text-generation",

model=model_id,

model_kwargs={"torch_dtype": torch.bfloat16}

device_map="cuda",

)- text-generation pipeline: Configures the model for text-based tasks.

- Device Map: “cuda” ensures it utilizes a GPU for faster processing.

- Precision: torch_dtype=torch.bfloat16 optimizes memory usage while maintaining numerical precision.

5. Function: get_completion_llama

This function interacts with the LLaMA model pipeline:

- Inputs:

- prompt: The query or instruction.

- model_pipeline: Defaults to the LLaMA model pipeline loaded earlier.

- Process:

- Sends the prompt to the model_pipeline for text generation.

- Retrieves the generated text content from the response.

6. Display the Output

Markdown formatting:

display(Markdown(response))This renders the text response in a visually appealing Markdown format in environments like Jupyter Notebook.

Artificial Analysis:

- <a target="_blank" href="https://artificialanalysis.ai/models/…” target=”_blank” rel=”noreferrer noopener nofollow”>Artificial Analysis – Llama 3.3 Benchmarks: This platform offers comprehensive data on various models’ performance, pricing, and availability. Users can track Llama 3.3’s standing among other models, ensuring they remain informed on the latest developments.

Industry Insights on x (formerly twitter):

These social media updates provide first-hand insights, community reactions, and emerging best practices for leveraging Llama 3.3 effectively.

Conclusion

Llama 3.3 represents a significant leap forward in accessible, high-performance LLMs. By matching or surpassing much larger models in key benchmarks—while dramatically cutting costs—Meta has opened the door for more developers, researchers, and organizations to integrate advanced ai into their products and workflows.

As the ai landscape continues to evolve, Llama 3.3 stands out not just for its technical prowess but also for its affordability and flexibility. Whether you’re an ai researcher, a startup innovator, or an established enterprise, Llama 3.3 provides a promising opportunity to harness state-of-the-art language modeling capabilities without breaking the bank.

In short, Llama 3.3 is a model worth exploring. With easy access, a growing number of hosting providers, and robust community support, it’s poised to become a go-to choice in the new era of cost-effective, high-quality LLMs.

Also if you are looking for a Generative ai course online then explore: GenAI Pinnacle Program

Frequently Asked Questions

Ans. Llama 3.3 70B is Meta’s latest open-source large language model with 70 billion parameters, offering performance comparable to much larger models like GPT-4 at a significantly lower cost.

Ans. Despite having fewer parameters, Llama 3.3 matches the performance of Llama 3.1 405B, with improvements in efficiency, multilingual support, and cost-effectiveness.

Ans. With pricing as low as $0.10 per million input tokens and $0.40 per million output tokens, Llama 3.3 is 25 times cheaper to run compared to some leading models like GPT-4.

Ans. Llama 3.3 excels in instruction following, code generation, multilingual tasks, and handling long contexts, making it ideal for developers and organizations seeking high performance without high costs.

Ans. You can access Llama 3.3 via platforms like Hugging Face, Ollama, and hosted services like Groq and Together ai, making it widely available for various use cases.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>