Large language models (LLMs) have transformed artificial intelligence applications, powering tasks such as language translation, virtual assistants, and code generation. These models rely on resource-intensive infrastructure, particularly GPUs with high-bandwidth memory, to handle their computational demands. However, providing high-quality service to numerous users simultaneously presents significant challenges. Efficient allocation of these limited resources is critical to meeting service level objectives (SLOs) for time-sensitive metrics, ensuring that the system can serve more users without compromising performance.

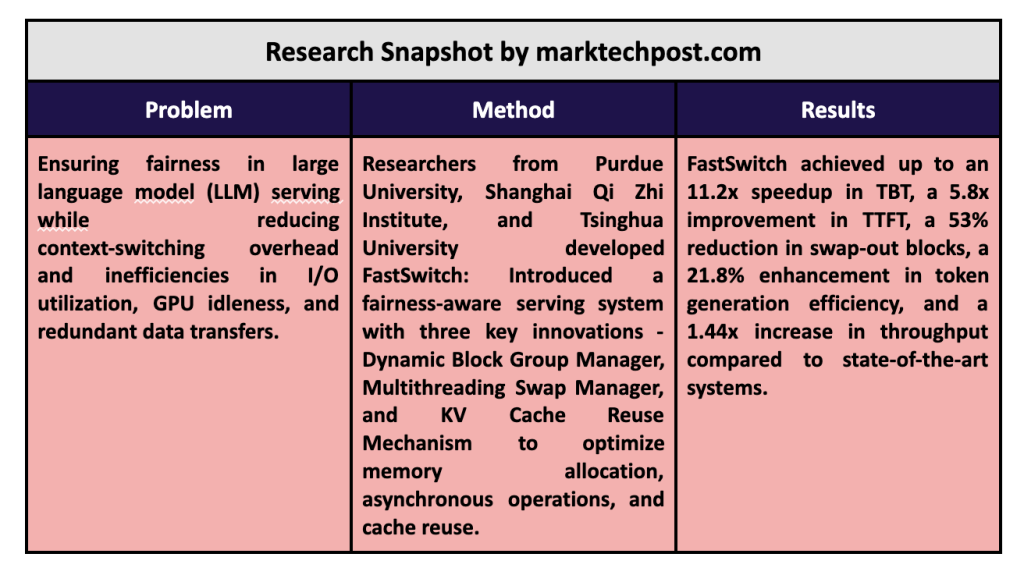

A persistent problem in LLM service delivery systems is achieving fair distribution of resources while maintaining efficiency. Existing systems often prioritize performance, neglecting fairness requirements such as balancing latency between users. Preemptive scheduling mechanisms, which dynamically adjust request priorities, address this problem. However, these mechanisms introduce context switching overheads, such as GPU idleness and inefficient I/O utilization, which degrade key performance indicators such as time to first token (TTFT) and time between tokens (TBT). . For example, the stall time caused by preemption in high-stress scenarios can reach up to 59.9% of P99 latency, leading to a significant decrease in user experience.

Current solutions, such as vLLM, rely on paging-based memory management to address GPU memory limitations by exchanging data between GPU memory and CPU. While these approaches improve performance, they face limitations. Problems such as fragmented memory allocation, low I/O bandwidth utilization, and redundant data transfers during multi-turn conversations persist, undermining their effectiveness. For example, vLLM's fixed 16-token block size results in suboptimal granularity, reducing PCIe bandwidth efficiency and increasing latency during preemptive context switching.

Researchers from Purdue University, Shanghai Qi Zhi Institute and Tsinghua University developed Quick changean equity-aware LLM service system that addresses inefficiencies in context change. FastSwitch features three main optimizations: a dynamic block pool manager, a multi-threaded swap manager, and a KV cache reuse mechanism. These innovations combine to improve I/O utilization, reduce GPU idle, and minimize redundant data transfers. The system design is based on vLLM but focuses on coarse-grained memory allocation and asynchronous operations to improve resource management.

FastSwitch's dynamic block pool manager optimizes memory allocation by grouping contiguous blocks, increasing transfer granularity. This approach reduces latency by up to 3.11 times compared to existing methods. The multi-threaded swap manager improves token generation efficiency by enabling asynchronous swapping, mitigating GPU downtime. It incorporates detailed synchronization to avoid conflicts between new and ongoing requests, ensuring seamless operation during overlapping processes. Meanwhile, the KV cache reuse mechanism preserves partially valid data in CPU memory, reducing preemption latency by avoiding redundant KV cache transfers. These components collectively address key challenges and improve the overall performance of LLM service systems.

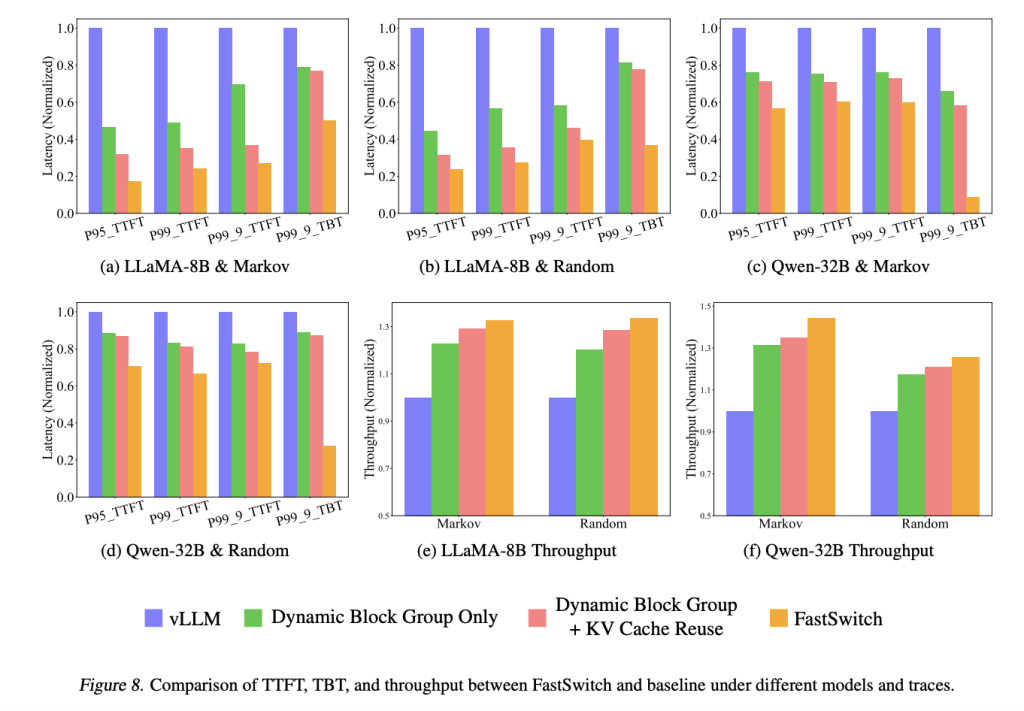

The researchers evaluated FastSwitch using the LLaMA-8B and Qwen-32B models on GPUs such as the NVIDIA A10 and A100. Test scenarios included high-frequency priority updates and multi-turn conversations derived from the ShareGPT dataset, which averages 5.5 turns per conversation. FastSwitch outperformed vLLM on several metrics. It achieved speedups of 4.3-5.8x on P95 TTFT and 3.6-11.2x on P99.9 TBT for different models and workloads. Additionally, FastSwitch improved performance by up to 1.44x, demonstrating its ability to handle complex workloads efficiently. The system also substantially reduced context switching overhead, improving I/O utilization by 1.3 times and GPU utilization by 1.42 times compared to vLLM.

FastSwitch optimizations resulted in tangible benefits. For example, its KV cache reuse mechanism reduced swap blocks by 53%, significantly reducing latency. The multi-threaded exchange manager improved token generation efficiency, achieving a 21.8% improvement in P99 latency compared to baseline systems. The dynamic block group manager maintained granularity by allocating memory in larger chunks, balancing efficiency and utilization. These advancements highlight FastSwitch's ability to maintain fairness and efficiency in high-demand environments.

Key findings from the research include:

- Dynamic Block Group Manager: Improved I/O bandwidth utilization through larger memory transfers, reducing context switch latency by 3.11x.

- Multi-threaded exchange manager: Increased token generation efficiency by 21.8% with P99 latency, minimizing GPU downtime with asynchronous operations.

- KV cache reuse mechanism: Reducing swap volume by 53%, enabling efficient reuse of cache data and reducing preemption latency.

- Performance metrics: FastSwitch achieved speedups of up to 11.2x in TBT and improved performance by 1.44x under high-priority workloads.

- Scalability: Solid performance demonstrated on models such as LLaMA-8B and Qwen-32B, showing versatility in various operational scenarios.

In conclusion, FastSwitch addresses fundamental inefficiencies in LLM service by introducing innovative optimizations that balance fairness and efficiency. Reducing context switching overhead and improving resource utilization ensure high-quality, scalable service delivery for multi-user environments. These advancements make it a transformative solution for modern LLM implementations.

Verify he Paper. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on <a target="_blank" href="https://twitter.com/Marktechpost”>twitter and join our Telegram channel and LinkedIn Grabove. If you like our work, you will love our information sheet.. Don't forget to join our SubReddit over 55,000ml.

Sana Hassan, a consulting intern at Marktechpost and a dual degree student at IIT Madras, is passionate about applying technology and artificial intelligence to address real-world challenges. With a strong interest in solving practical problems, he brings a new perspective to the intersection of ai and real-life solutions.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>