The evolution of machine learning has brought significant advances in language models, which are essential for tasks such as text generation and question answering. Among them, transformers and state space models (SSMs) are critical, but their efficiency in handling long sequences has posed challenges. As sequence length increases, traditional transformers suffer from quadratic complexity, resulting in prohibitive computational and memory demands. To address these issues, researchers and organizations have explored alternative architectures, such as Mamba, a state space model with linear complexity that provides scalability and efficiency for long-context tasks.

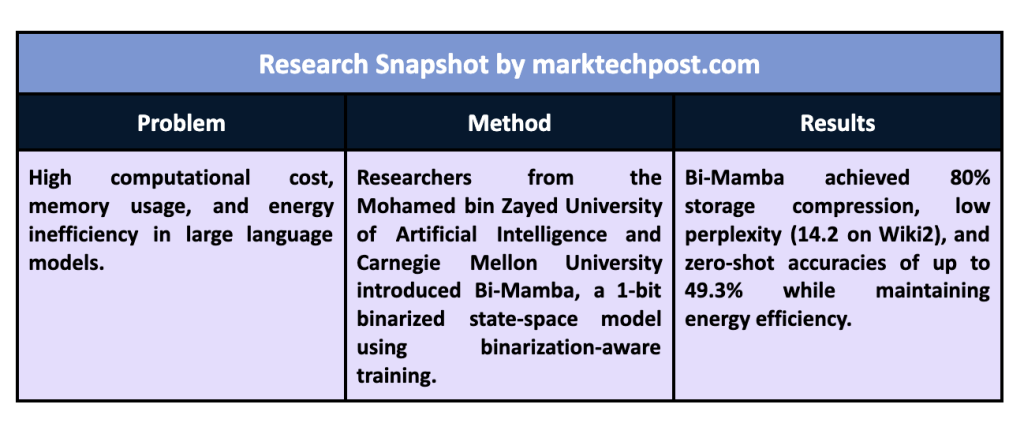

Large-scale language models often face challenges in managing computational costs, especially when they scale to billions of parameters. For example, while Mamba offers linear complexity advantages, its increasing size results in significant power consumption and training costs, making implementation difficult. These limitations are exacerbated by the high resource demands of models such as GPT-based architectures, which are traditionally trained and inferred with full precision (e.g., FP16 or BF16). Furthermore, as the demand for efficient and scalable ai grows, the exploration of extreme quantification methods has become critical to ensure practical implementation in resource-constrained environments.

Researchers have explored techniques such as pruning, low-bit quantization, and key-value cache optimizations to mitigate these challenges. Quantization, which reduces the bitwidth of model weights, has shown promising results in compressing models without substantial performance degradation. However, most of these efforts focus on transformer-based models. The behavior of SSMs, particularly Mamba, still needs to be explored under extreme quantization, creating a gap in the development of scalable and efficient state space models for real-world applications.

Researchers from the Mohamed bin Zayed University of artificial intelligence and Carnegie Mellon University presented Follow-Mambaa scalable 1-bit Mamba architecture designed for high-efficiency, low-memory scenarios. This innovative approach applies binarization-aware training to the Mamba state-space framework, enabling extreme quantization while maintaining competitive performance. Bi-Mamba was developed on model sizes of 780 million, 1.3 billion, and 2.7 billion parameters and trained from scratch using an autoregressive distillation loss. The model uses high-precision teaching models, such as LLaMA2-7B, to guide training, ensuring robust performance.

The Bi-Mamba architecture employs selective binarization of its linear modules while preserving other components with complete precision to balance efficiency and performance. The input and output projections are binarized using FBI-Linear modules, which integrate learnable scale and offset factors for optimal weight representation. This ensures that binary parameters align closely with their full precision counterparts. Model training used 32 NVIDIA A100 GPUs to process large data sets, including 1.26 trillion tokens from sources such as RefinedWeb and StarCoder.

Extensive experiments demonstrated the competitive advantage of Bi-Mamba over existing models. On data sets such as Wiki2, PTB, and C4, Bi-Mamba achieved perplexity scores of 14.2, 34.4, and 15.0, significantly outperforming alternatives such as GPTQ and Bi-LLM, which exhibited up to 10 times higher perplexities. Additionally, Bi-Mamba achieved zero-shot accuracies of 44.5% for the 780M model, 49.3% for the 2.7B model, and 46.7% for the 1.3B variant in subsequent tasks such as BoolQ and HellaSwag. This demonstrated its robustness across various tasks and data sets while maintaining energy-efficient performance.

The study findings highlight several key conclusions:

- Efficiency gains: Bi-Mamba achieves more than 80% storage compression compared to full precision models, reducing storage size from 5.03GB to 0.55GB for the 2.7B model.

- Performance Consistency: The model retains performance comparable to its highest-precision counterparts with significantly reduced memory requirements.

- Scalability: The Bi-Mamba architecture allows for effective training on multiple model sizes, with competitive results for even the largest variants.

- Robustness in binarization: By selectively binarizing linear modules, Bi-Mamba avoids the performance degradation typically associated with naive binarization methods.

In conclusion, Bi-Mamba represents an important step forward in addressing the dual challenges of scalability and efficiency in large language models. By leveraging binarization-aware training and focusing on key architectural optimizations, the researchers demonstrated that state space models could achieve high performance under extreme quantization conditions. This innovation improves energy efficiency, reduces resource consumption, and lays the foundation for future developments in low-bit ai systems, opening avenues to deploy large-scale models in practical, resource-constrained environments. Bi-Mamba's strong results underscore its potential as a transformative approach for more sustainable and efficient ai technologies.

Verify he Paper. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on <a target="_blank" href="https://twitter.com/Marktechpost”>twitter and join our Telegram channel and LinkedIn Grabove. If you like our work, you will love our information sheet.. Don't forget to join our SubReddit over 55,000ml.

(FREE VIRTUAL CONFERENCE ON ai) SmallCon: Free Virtual GenAI Conference with Meta, Mistral, Salesforce, Harvey ai and More. Join us on December 11 for this free virtual event to learn what it takes to build big with small models from ai pioneers like Meta, Mistral ai, Salesforce, Harvey ai, Upstage, Nubank, Nvidia, Hugging Face and more.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of artificial intelligence for social good. Their most recent endeavor is the launch of an ai media platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is technically sound and easily understandable to a wide audience. The platform has more than 2 million monthly visits, which illustrates its popularity among the public.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>