.toc-list {

position: relative;

}

.toc-list {

overflow: hidden;

list-style: none;

}

.gh-toc .is-active-link::before {

background-color: var(–ghost-accent-color); /* Defines TOC accent color based on Accent color set in Ghost Admin */

}

.gl-toc__header {

align-items: center;

color: var(–foreground);

cursor: pointer;

display: flex;

gap: 2rem;

justify-content: space-between;

padding: 1rem;

width: 100%;

}

.gh-toc-title {

font-size: 15px !important;

font-weight: 600 !important;

letter-spacing: .0075rem;

line-height: 1.2;

margin: 0;

text-transform: uppercase;

}

.gl-toc__icon {

transition: transform .2s ease-in-out;

}

.gh-toc li {

color: #404040;

font-size: 14px;

line-height: 1.3;

margin-bottom: .75rem;

}

.gh-toc {

display: none;

}

.gh-toc.active {

display: block;

}

.gl-toc__icon svg{

transition: transform 0.2s ease-in-out;

}

.gh-toc.active + .gl-toc__header .gl-toc__icon .rotated{

transform: rotate(180deg);

}

.gl-toc__icon .rotated{

transform: rotate(180deg);

}

.gh-toc-container-sidebar{

display: none;

}

.gh-toc-container-content{

display: block;

width: 100%;

}

a.toc-link{

background-image: none !important;

}

.gh-toc-container-content .toc-list-item{

margin-left: 0 !important;

}

.gh-toc-container-content .toc-list-item::marker{

content: none;

}

.gh-toc-container-content .toc-list{

padding: 0 !important;

margin: 0 !important;

}

@media only screen and (min-width: 1200px) {

.gh-sidebar-wrapper{

margin: 0;

position: sticky;

top: 6rem;

left: calc((( 100vw – 928px)/ 2 ) – 16.25rem – 60px);

z-index: 3;

}

.gh-sidebar {

align-self: flex-start;

background-color: transparent;

flex-direction: column;

grid-area: toc;

max-height: calc(100vh – 6rem);

width: 16.25rem;

z-index: 3;

position: sticky;

top: 80px;

}

.gh-sidebar:before {

-webkit-backdrop-filter: blur(30px);

backdrop-filter: blur(30px);

background-color:hsla(0, 0%, 100%, .5);;

border-radius: .5rem;

content: “”;

display: block;

height: 100%;

left: 0;

position: absolute;

top: 0;

width: 100%;

z-index: -1;

}

.gl-toc__header {

cursor: default;

flex-shrink: 0;

pointer-events: none;

}

.gl-toc__icon {

display: none;

}

.gh-toc {

display: block;

flex: 1;

overflow-y: auto;

}

.gh-toc-container-sidebar{

display: block;

}

.gh-toc-container-content{

display: none;

}

}

))>

What are LLMs?

A Large Language Model (LLM) is an advanced ai system designed to perform complex natural language processing (NLP) tasks like text generation, summarization, translation, and more. At its core, an LLM is built on a deep neural network architecture known as a transformer, which excels at capturing the intricate patterns and relationships in language. Some of the widely recognized LLMs include ChatGPT by OpenAI, LLaMa by Meta, Claude by Anthropic, Mistral by Mistral ai, Gemini by Google and a lot more.

The Power of LLMs in Today’s Generation:

- Understanding Human Language: LLMs have the ability to understand complex queries, analyze context, and respond in ways that sound human-like and nuanced.

- Knowledge Integration Across Domains: Due to training on vast, diverse data sources, LLMs can provide insights across fields from science to creative writing.

- Adaptability and Creativity: One of the most exciting aspects of LLMs is their adaptability. They’re capable of generating stories, writing poetry, solving puzzles, and even holding philosophical discussions.

Problem-Solving Potential: LLMs can handle reasoning tasks by identifying patterns, making inferences, and solving logical problems, demonstrating their capability in supporting complex, structured thought processes and decision-making.

For developers looking to streamline document workflows using ai, tools like the Nanonets PDF ai offer valuable integration options. Coupled with Ministral’s capabilities, these can significantly enhance tasks like document extraction, ensuring efficient data handling. Additionally, tools like Nanonets’ PDF Summarizer can further automate processes by summarizing lengthy documents, aligning well with Ministral’s privacy-first applications.

Automating Day-to-Day Tasks with LLMs:

LLMs can transform the way we handle everyday tasks, driving efficiency and freeing up valuable time. Here are some key applications:

- Email Composition: Generate personalized email drafts quickly, saving time and maintaining professional tone.

- Report Summarization: Condense lengthy documents and reports into concise summaries, highlighting key points for quick review.

- Customer Support Chatbots: Implement LLM-powered chatbots that can resolve common issues, process returns, and provide product recommendations based on user inquiries.

- Content Ideation: Assist in brainstorming and generating creative content ideas for blogs, articles, or marketing campaigns.

- Data Analysis: Automate the analysis of data sets, generating insights and visualizations without manual input.

- Social Media Management: Craft and schedule engaging posts, interact with comments, and analyze engagement metrics to refine content strategy.

- Language Translation: Provide real-time translation services to facilitate communication across language barriers, ideal for global teams.

To further enhance the capabilities of LLMs, we can leverage Retrieval-Augmented Generation (RAG). This approach allows LLMs to access and incorporate real-time information from external sources, enriching their responses with up-to-date, contextually relevant data for more informed decision-making and deeper insights.

One-Click LLM Bash Helper

We will explore an exciting way to utilize LLMs by developing a real time application called One-Click LLM Bash Helper. This tool uses a LLM to simplify bash terminal usage. Just describe what you want to do in plain language, and it will generate the correct bash command for you instantly. Whether you’re a beginner or an experienced user looking for quick solutions, this tool saves time and removes the guesswork, making command-line tasks more accessible than ever!

How it works:

- Open the Bash Terminal: Start by opening your Linux terminal where you want to execute the command.

- Describe the Command: Write a clear and concise description of the task you want to perform in the terminal. For example, “Create a file named abc.txt in this directory.”

- Select the Text: Highlight the task description you just wrote in the terminal to ensure it can be processed by the tool.

- Press Trigger Key: Hit the F6 key on your keyboard as default (can be changed as needed). This triggers the process, where the task description is copied, processed by the tool, and sent to the LLM for command generation.

- Get and Execute the Command: The LLM processes the description, generates the corresponding Linux command, and pastes it into the terminal. The command is then executed automatically, and the results are displayed for you to see.

Build On Your Own

Since the One-Click LLM Bash Helper will be interacting with text in a terminal of the system, it’s essential to run the application locally on the machine. This requirement arises from the need to access the clipboard and capture key presses across different applications, which is not supported in online environments like Google Colab or Kaggle.

To implement the One-Click LLM Bash Helper, we’ll need to set up a few libraries and dependencies that will enable the functionality outlined in the process. It is best to set up a new environment and then install the dependencies.

Steps to Create a New Conda Environment and Install Dependencies

- Open your terminal

- Create a new Conda environment. You can name the environment (e.g., llm_translation) and specify the Python version you want to use (e.g., Python 3.9):

conda create -n bash_helper python=3.9

- Activate the new environment:

conda activate bash_helper- Install the required libraries:

- Ollama: It is an open-source project that serves as a powerful and user-friendly platform for running LLMs on your local machine. It acts as a bridge between the complexities of LLM technology and the desire for an accessible and customizable ai experience. Install ollama by following the instructions at https://github.com/ollama/ollama/blob/main/docs/linux.md and also run:

pip install ollama- To start ollama and install LLaMa 3.1 8B as our LLM (one can use other models) using ollama, run the following commands after ollama is installed:

ollama serveRun this in a background terminal. And then execute the following code to install the llama3.1 using ollama:

ollama run llama3.1Here are some of the LLMs that Ollama supports – one can choose based on their requirements

| Model | Parameters | Size | Download |

|---|---|---|---|

| Llama 3.2 | 3B | 2.0GB | ollama run llama3.2 |

| Llama 3.2 | 1B | 1.3GB | ollama run llama3.2:1b |

| Llama 3.1 | 8B | 4.7GB | ollama run llama3.1 |

| Llama 3.1 | 70B | 40GB | ollama run llama3.1:70b |

| Llama 3.1 | 405B | 231GB | ollama run llama3.1:405b |

| Phi 3 Mini | 3.8B | 2.3GB | ollama run phi3 |

| Phi 3 Medium | 14B | 7.9GB | ollama run phi3:medium |

| Gemma 2 | 2B | 1.6GB | ollama run gemma2:2b |

| Gemma 2 | 9B | 5.5GB | ollama run gemma2 |

| Gemma 2 | 27B | 16GB | ollama run gemma2:27b |

| Mistral | 7B | 4.1GB | ollama run mistral |

| Moondream 2 | 1.4B | 829MB | ollama run moondream2 |

| Neural Chat | 7B | 4.1GB | ollama run neural-chat |

| Starling | 7B | 4.1GB | ollama run starling-lm |

| Code Llama | 7B | 3.8GB | ollama run codellama |

| Llama 2 Uncensored | 7B | 3.8GB | ollama run llama2-uncensored |

| LLAVA | 7B | 4.5GB | ollama run llava |

| Solar | 10.7B | 6.1GB | ollama run solar |

- Pyperclip: It is a Python library designed for cross-platform clipboard manipulation. It allows you to programmatically copy and paste text to and from the clipboard, making it easy to manage text selections.

pip install pyperclip- Pynput: Pynput is a Python library that provides a way to monitor and control input devices, such as keyboards and mice. It allows you to listen for specific key presses and execute functions in response.

pip install pynputCode sections:

Create a python file “helper.py” where all the following code will be added:

- Importing the Required Libraries: In the helper.py file, start by importing the necessary libraries:

import pyperclip

import subprocess

import threading

import ollama

from pynput import keyboard- Defining the CommandAssistant Class: The

CommandAssistantclass is the core of the application. When initialized, it starts a keyboard listener usingpynputto detect keypresses. The listener continuously monitors for the F6 key, which serves as the trigger for the assistant to process a task description. This setup ensures the application runs passively in the background until activated by the user.

class CommandAssistant:

def __init__(self):

# Start listening for key events

self.listener = keyboard.Listener(on_press=self.on_key_press)

self.listener.start()- Handling the F6 Keypress: The

on_key_pressmethod is executed whenever a key is pressed. It checks if the pressed key is F6. If so, it calls theprocess_task_descriptionmethod to start the workflow for generating a Linux command. Any invalid key presses are safely ignored, ensuring the program operates smoothly.

def on_key_press(self, key):

try:

if key == keyboard.Key.f6:

# Trigger command generation on F6

print("Processing task description...")

self.process_task_description()

except AttributeError:

pass

- Extracting Task Description: This method begins by simulating the “Ctrl+Shift+C” keypress using

xdotoolto copy selected text from the terminal. The copied text, assumed to be a task description, is then retrieved from the clipboard viapyperclip. A prompt is constructed to instruct the Llama model to generate a single Linux command for the given task. To keep the application responsive, the command generation is run in a separate thread, ensuring the main program remains non-blocking.

def process_task_description(self):

# Step 1: Copy the selected text using Ctrl+Shift+C

subprocess.run(('xdotool', 'key', '--clearmodifiers', 'ctrl+shift+c'))

# Get the selected text from clipboard

task_description = pyperclip.paste()

# Set up the command-generation prompt

prompt = (

"You are a Linux terminal assistant. Convert the following description of a task "

"into a single Linux command that accomplishes it. Provide only the command, "

"without any additional text or surrounding quotes:\n\n"

f"Task description: {task_description}"

)

# Step 2: Run command generation in a separate thread

threading.Thread(target=self.generate_command, args=(prompt,)).start()

- Generating the Command: The

generate_commandmethod sends the constructed prompt to the Llama model (llama3.1) via theollamalibrary. The model responds with a generated command, which is then cleaned to remove any unnecessary quotes or formatting. The sanitized command is passed to thereplace_with_commandmethod for pasting back into the terminal. Any errors during this process are caught and logged to ensure robustness.

def generate_command(self, prompt):

try:

# Query the Llama model for the command

response = ollama.generate(model="llama3.1", prompt=prompt)

generated_command = response('response').strip()

# Remove any surrounding quotes (if present)

if generated_command.startswith("'") and generated_command.endswith("'"):

generated_command = generated_command(1:-1)

elif generated_command.startswith('"') and generated_command.endswith('"'):

generated_command = generated_command(1:-1)

# Step 3: Replace the selected text with the generated command

self.replace_with_command(generated_command)

except Exception as e:

print(f"Command generation error: {str(e)}")

- Replacing Text in the Terminal: The

replace_with_commandmethod takes the generated command and copies it to the clipboard usingpyperclip. It then simulates keypresses to clear the terminal input using “Ctrl+C” and “Ctrl+L” and pastes the generated command back into the terminal with “Ctrl+Shift+V.” This automation ensures the user can immediately review or execute the suggested command without manual intervention.

def replace_with_command(self, command):

# Copy the generated command to the clipboard

pyperclip.copy(command)

# Step 4: Clear the current input using Ctrl+C

subprocess.run(('xdotool', 'key', '--clearmodifiers', 'ctrl+c'))

subprocess.run(('xdotool', 'key', '--clearmodifiers', 'ctrl+l'))

# Step 5: Paste the generated command using Ctrl+Shift+V

subprocess.run(('xdotool', 'key', '--clearmodifiers', 'ctrl+shift+v'))

- Running the Application: The script creates an instance of the

CommandAssistantclass and keeps it running in an infinite loop to continuously listen for the F6 key. The program terminates gracefully upon receiving a KeyboardInterrupt (e.g., when the user presses Ctrl+C), ensuring clean shutdown and freeing system resources.

if __name__ == "__main__":

app = CommandAssistant()

# Keep the script running to listen for key presses

try:

while True:

pass

except KeyboardInterrupt:

print("Exiting Command Assistant.")

Save all the above components as ‘helper.py’ file and run the application using the following command:

python helper.pyAnd that’s it! You’ve now built the One-Click LLM Bash Helper. Let’s walk through how to use it.

Workflow

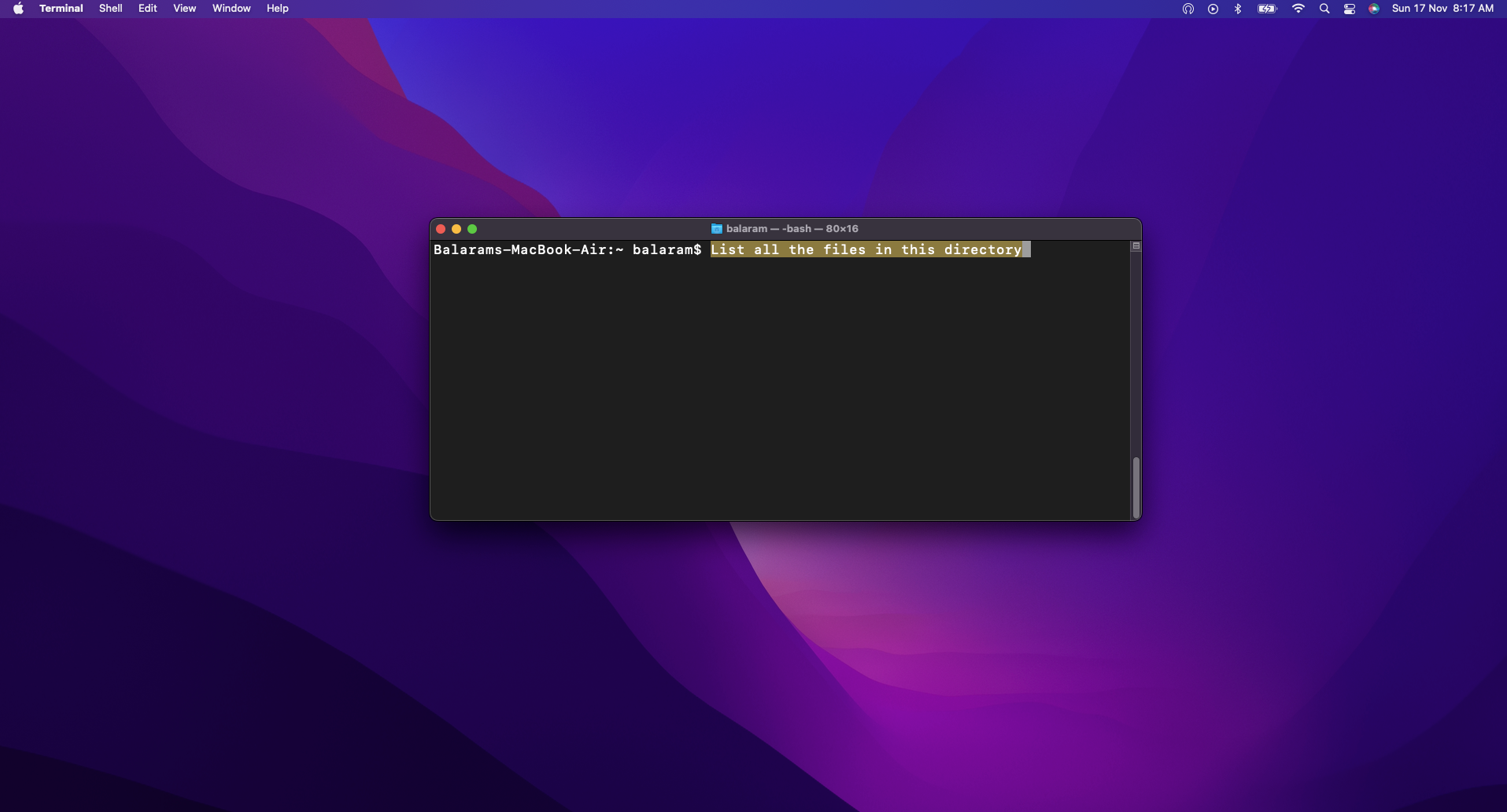

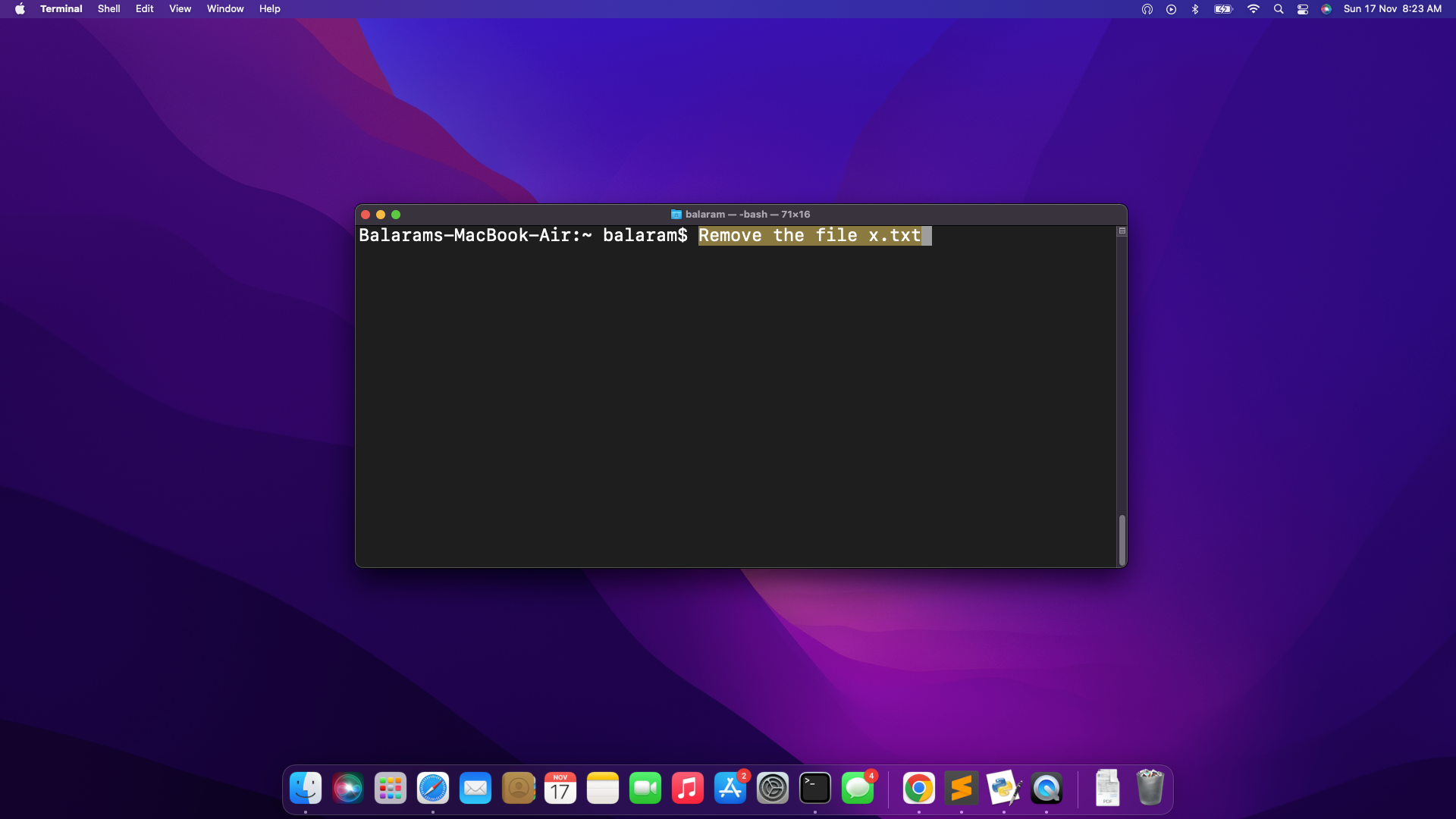

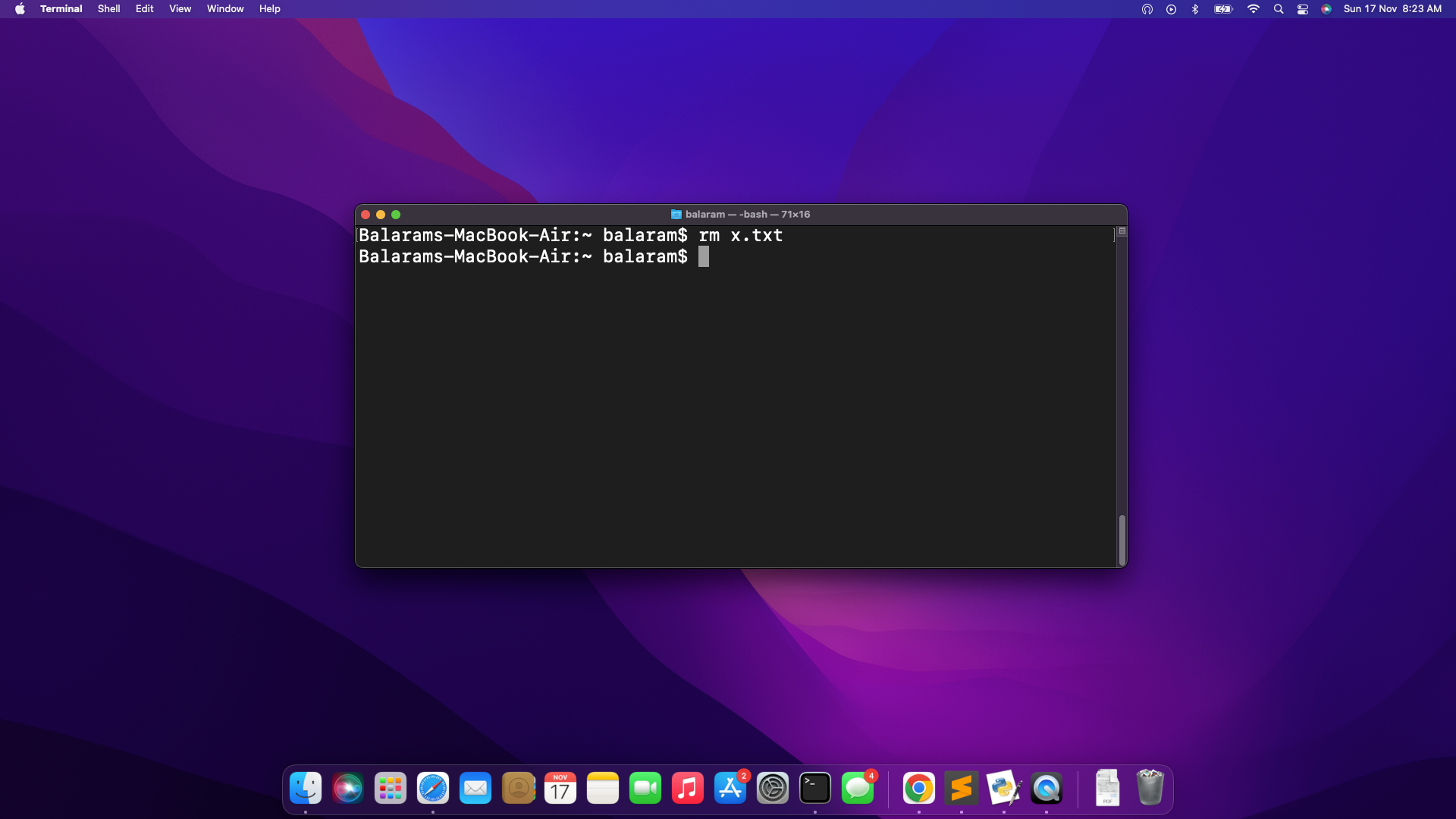

Open terminal and write the description of any command to perform. And then follow the below steps:

- Select Text: After writing the description of the command you need to perform in the terminal, select the text.

- Trigger Translation: Press the F6 key to initiate the process.

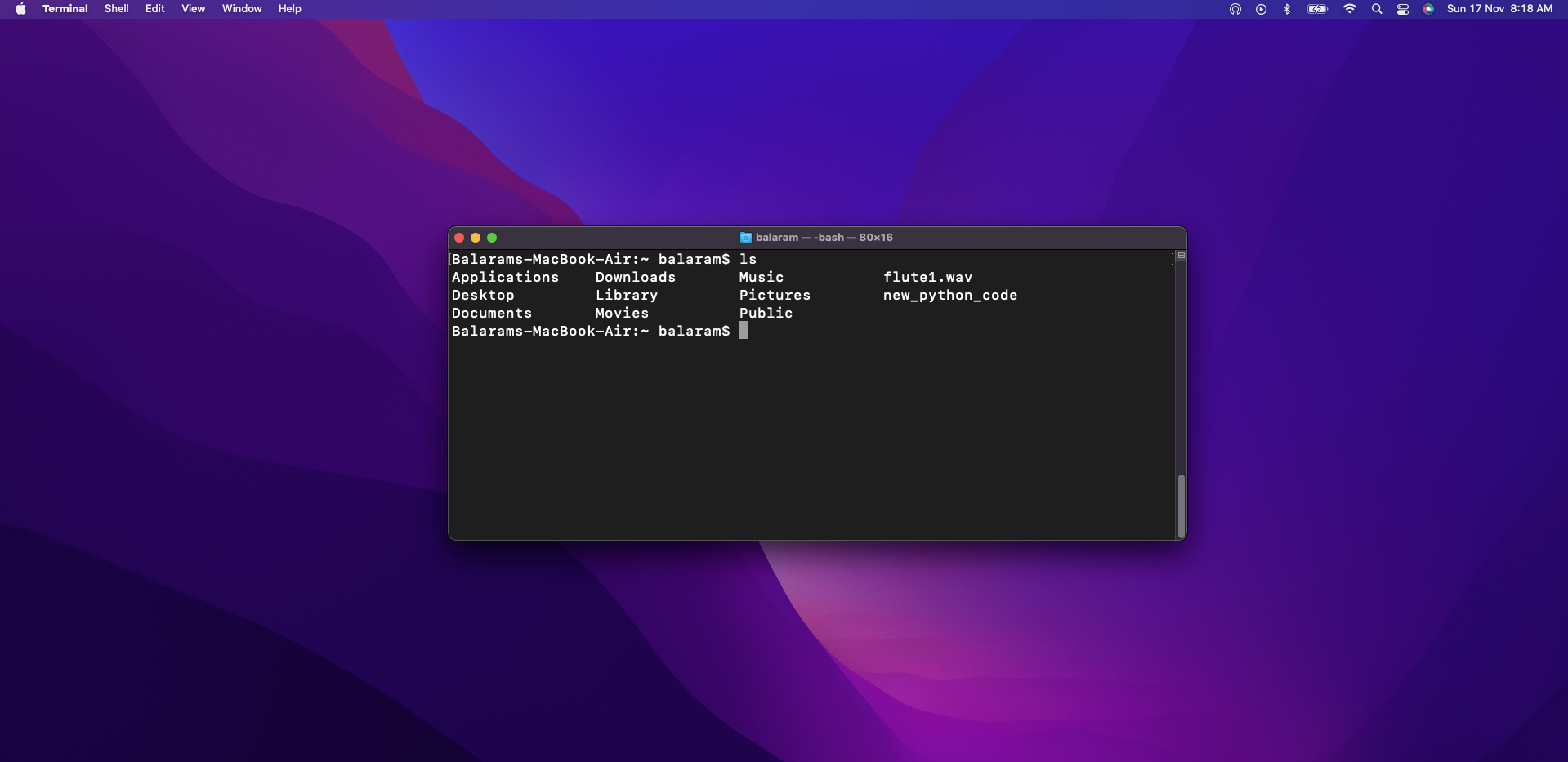

- View Result: The LLM finds the proper code to execute for the command description given by the user and replace the text in the bash terminal. Which is then automatically executed.

As in this case, for the description – “List all the files in this directory” the command given as output from the LLM was -“ls”.

For access to the complete code and further details, please visit this GitHub repo link.

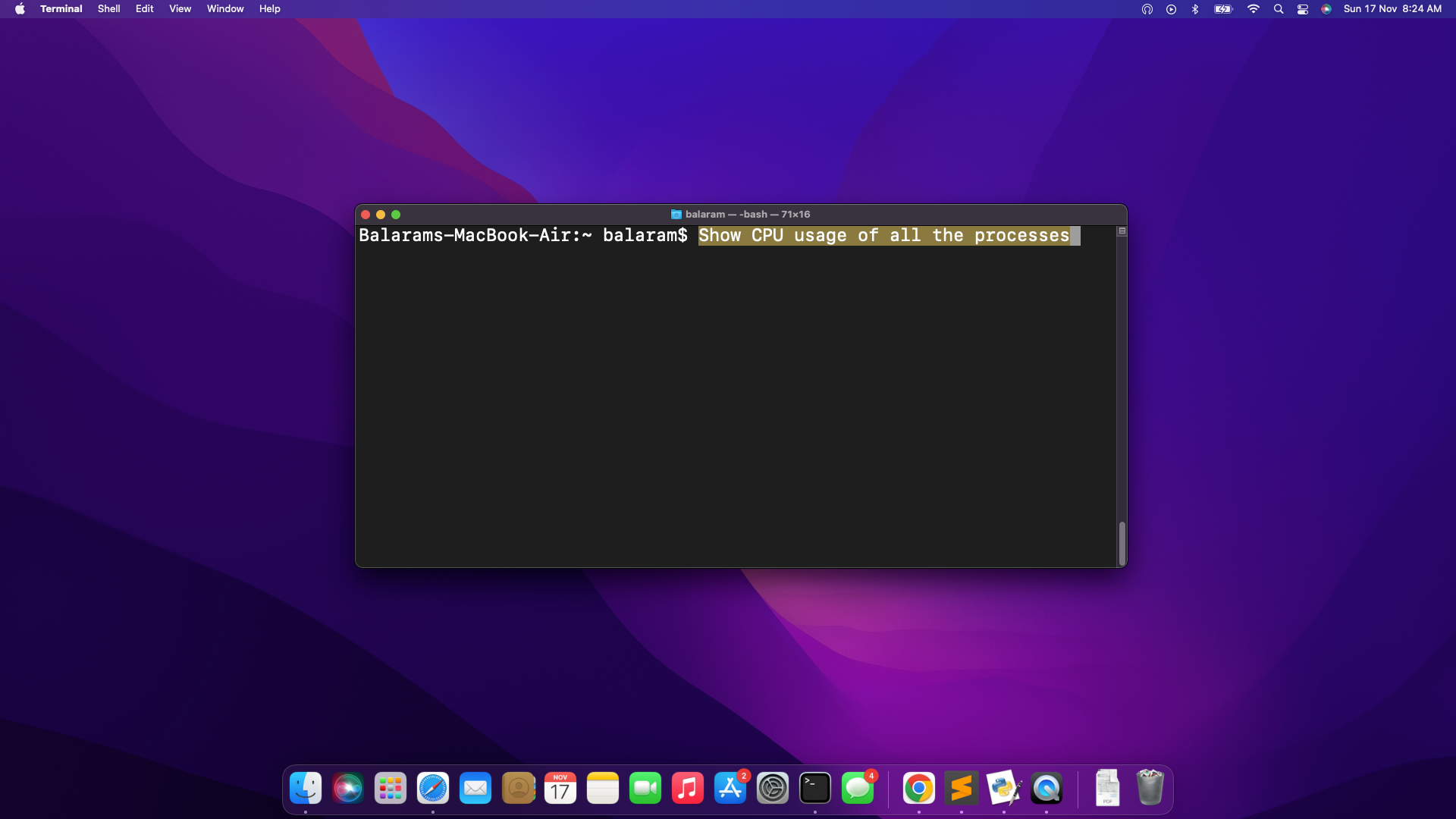

Here are a few more examples of the One-Click LLM Bash Helper in action:

It gave the code “top” upon pressing the trigger key (F6) and after execution it gave the following output:

- Deleting a file with filename

Tips for customizing the assistant

- Choosing the Right Model for Your System: Picking the right language model first.

Got a Powerful PC? (16GB+ RAM)

- Use llama2:70b or mixtral – They offer amazing quality code generation but need more compute power.

- Perfect for professional use or when accuracy is crucial

Running on a Mid-Range System? (8-16GB RAM)

- Use llama2:13b or mistral – They offer a great balance of performance and resource usage.

- Great for daily use and most generation needs

Working with Limited Resources? (4-8GB RAM)

- llama2:7b or phi are good in this range.

- They’re faster and lighter but still get the job done

Although these models are recommended, one can use other models according to their needs.

- Personalizing Keyboard Shortcut : Want to change the F6 key? One can change it to any key! For example to use ‘T’ for translate, or F2 because it’s easier to reach. It’s super easy to change – just modify the trigger key in the code, and it’s good to go.

- Customising the Assistant: Maybe instead of bash helper, one needs help with writing code in a certain programming language (Java, Python, C++). One just needs to modify the command generation prompt. Instead of linux terminal assistant change it to python code writer or to the programming language preferred.

Limitations

- Resource Constraints: Running large language models often requires substantial hardware. For example, at least 8 GB of RAM is required to run the 7B models, 16 GB to run the 13B models, and 32 GB to run the 33B models.

- Platform Restrictions: The use of

xdotooland specific key combinations makes the tool dependent on Linux systems and may not work on other operating systems without modifications. - Command Accuracy: The tool may occasionally produce incorrect or incomplete commands, especially for ambiguous or highly specific tasks. In such cases, using a more advanced LLM with better contextual understanding may be necessary.

- Limited Customization: Without specialized fine-tuning, generic LLMs might lack contextual adjustments for industry-specific terminology or user-specific preferences.

For tasks like extracting information from documents, tools such as Nanonets’ Chat with PDF have evaluated and used several LLMs like Ministral and can offer a reliable way to interact with content, ensuring accurate data extraction without risk of misrepresentation.