ai has made significant progress in developing large language models (LLMs) that excel at complex tasks such as text generation, summarization, and conversational ai. Models such as LaPM 540B and Llama-3.1 405B demonstrate advanced language processing capabilities, but their computational demands limit their applicability in real-world environments with limited resources. These LLMs are typically cloud-based and require a large amount of hardware and GPU memory, raising privacy concerns and preventing immediate deployment on the device. In contrast, small language models (SLMs) are being explored as an efficient and adaptable alternative, capable of performing domain-specific tasks with lower computational requirements.

The main challenge of LLMs, as addressed by SLMs, is their high computational cost and latency, particularly for specialized applications. For example, models like Llama-3.1, which contain 405 billion parameters, require more than 200 GB of GPU memory, making them impractical for deployment on mobile devices or edge systems. In real-time scenarios, these models suffer from high latency; Processing 100 tokens on a Snapdragon 685 mobile processor with the Llama-2 7B model, for example, can take up to 80 seconds. These delays hinder real-time applications, making them unsuitable for environments such as healthcare, finance, and personal assistance systems that require immediate responses. The operating expenses associated with LLMs also restrict their use, as their adjustment for specialized fields such as healthcare or law requires significant resources, limiting accessibility for organizations without large computing budgets.

Currently, several methods address these limitations, including cloud-based APIs, data batching, and model pruning. However, these solutions often fall short as they must completely alleviate high latency issues, reliance on extensive infrastructure, and privacy concerns. Techniques such as pruning and quantization can reduce model size, but often decrease accuracy, which is detrimental for high-risk applications. The absence of low-cost, scalable solutions to tune LLMs for specific domains further emphasizes the need for an alternative approach to deliver targeted performance without prohibitive costs.

Researchers from Pennsylvania State University, the University of Pennsylvania, UTHealth Houston, amazon, and Rensselaer Polytechnic Institute conducted a comprehensive survey of SLMs and examined a systematic framework for developing SLMs that balance efficiency with LLM-like capabilities. This research adds advances in tuning, parameter sharing, and knowledge distillation to create models designed for efficient, domain-specific use cases. Compact architectures and advanced data processing techniques allow SLMs to operate in low-power environments, making them accessible for real-time applications on edge devices. Institutional collaborations helped define and categorize SLMs, ensuring that the taxonomy supports deployment in low-memory and resource-limited environments.

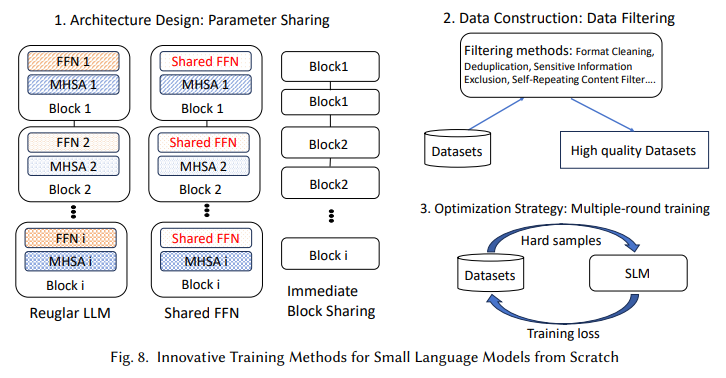

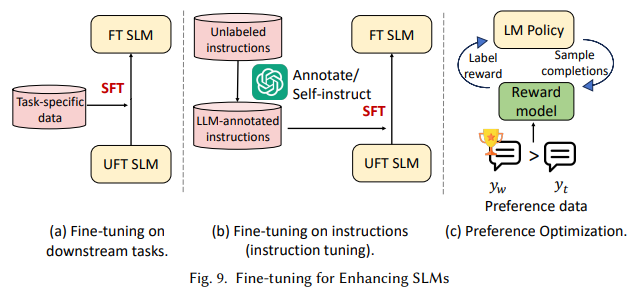

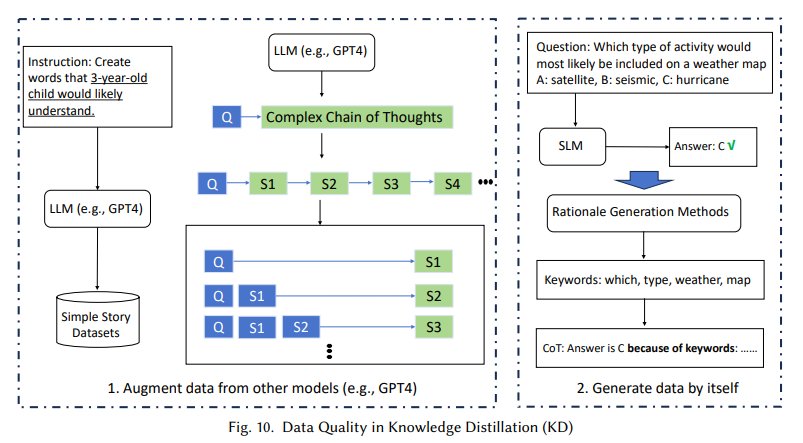

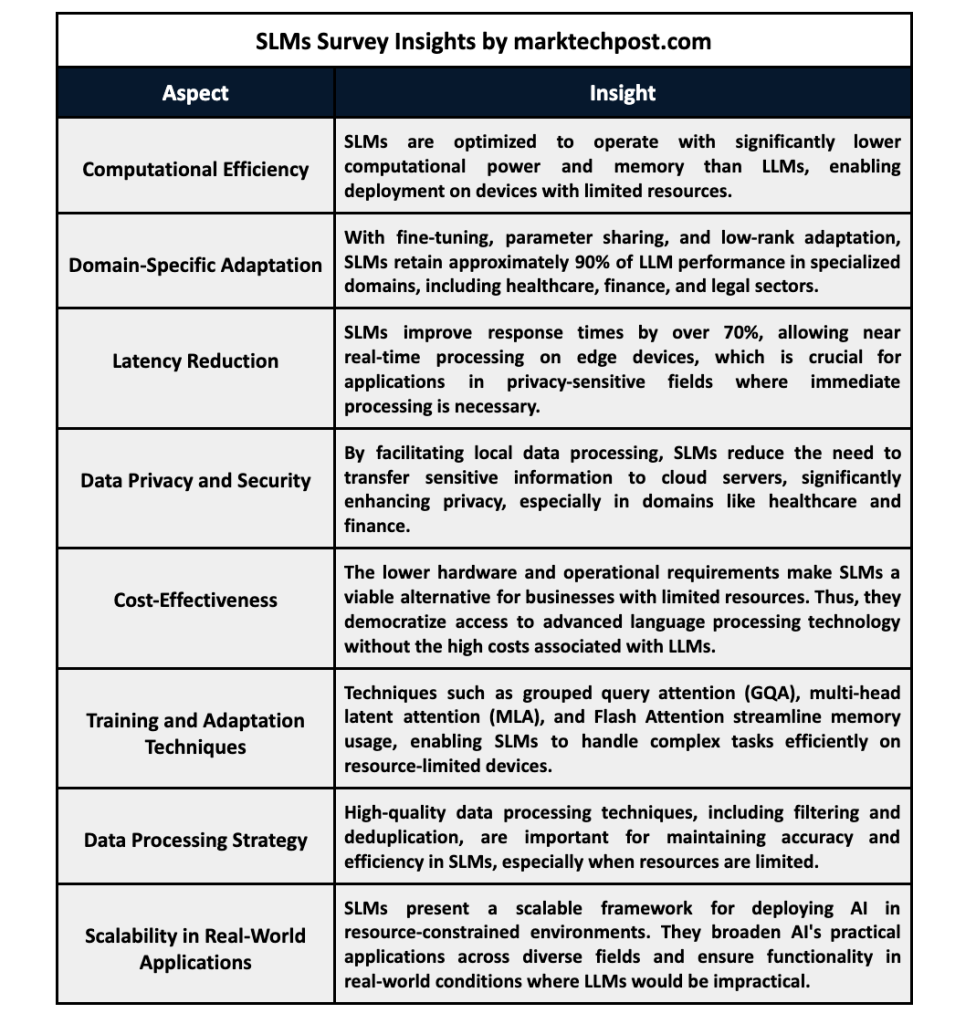

The technical methods proposed in this research are integral to optimizing the performance of the SLM. For example, the survey highlights Grouped Query Attention (GQA), Multi-Head Latent Attention (MLA), and Flash Attention as essential efficient memory modifications that speed up attention mechanisms. These improvements allow SLMs to maintain high performance without requiring the large amount of memory typical of LLMs. Additionally, parameter sharing and low-rank adaptation techniques ensure that SLMs can handle complex tasks in specialized fields such as healthcare, finance, and customer service, where immediate response and data privacy are crucial. . The framework's emphasis on data quality further improves model performance, incorporating filtering, deduplication, and optimized data structures to improve accuracy and speed in specific domain contexts.

The empirical results underline the performance potential of SLMs, as they can achieve efficiency close to that of LLMs in specific applications with reduced latency and memory usage. In healthcare, finance, and personalized assistant application benchmarks, SLMs show substantial latency reductions and increased data privacy due to local processing. For example, latency improvements in healthcare and secure local data handling offer an efficient solution for on-device data processing and protection of sensitive patient information. The methods used in SLM training and optimization allow these models to retain up to 90% of the accuracy of LLM in domain-specific applications, a notable achievement given the reduction in model size and hardware requirements.

Key research findings:

- Computational efficiency: SLMs operate with a fraction of the memory and processing power required by LLMs, making them suitable for devices with limited hardware, such as smartphones and IoT devices.

- Domain-specific adaptability: With specific optimizations, such as tuning and parameter sharing, SLMs retain approximately 90% of LLM performance in specialized domains, including healthcare and finance.

- Reduced latency: Compared to LLMs, SLMs reduce response times by more than 70%, providing essential real-time processing capabilities for edge applications and privacy-sensitive scenarios.

- Data privacy and security: SLM enables local processing, reducing the need to transfer data to cloud servers and improving privacy in high-risk applications such as healthcare and finance.

- Cost-effectiveness: By reducing computational and hardware requirements, SLMs present a feasible solution for resource-constrained organizations, democratizing access to ai-powered language models.

In conclusion, the Small Language Models Survey presents a viable framework that addresses critical issues of LLM implementation in resource-constrained environments. The proposed SLM approach offers a promising path to integrate advanced language processing capabilities into low-power devices, expanding the scope of ai technology in various fields. By optimizing latency, privacy, and computational efficiency, SLMs provide a scalable solution for real-world applications where traditional LLMs are not practical, ensuring broader applicability and sustainability of language models in industry and investigation.

look at the Paper. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter and join our Telegram channel and LinkedIn Grabove. If you like our work, you will love our information sheet.. Don't forget to join our SubReddit over 55,000ml.

(ai Magazine/Report) Read our latest report on 'SMALL LANGUAGE MODELS'

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of artificial intelligence for social good. Their most recent endeavor is the launch of an ai media platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is technically sound and easily understandable to a wide audience. The platform has more than 2 million monthly visits, which illustrates its popularity among the public.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>