Today we are announcing the general availability of amazon Bedrock Prompt Management, with new features that provide enhanced options for configuring your messages and enabling seamless integration to invoke them in your generative ai applications.

amazon Bedrock Prompt Management simplifies the creation, testing, versioning, and sharing of messages to help developers and engineers get better answers from core models (FMs) for their use cases. In this post, we explore the key capabilities of amazon Bedrock Prompt Management and show examples of how to use these tools to help optimize message performance and results for your specific use cases.

New features in amazon Bedrock Prompt Management

amazon Bedrock Prompt Management offers new capabilities that simplify the process of building generative ai applications:

- Structured prompts – Define additional system instructions, tools, and messages when creating your prompts.

- Converse API and InvokeModel integration – Invoke your cataloged messages directly from amazon Bedrock Converse and InvokeModel API calls

To show off the new additions, let's look at an example of how to create a message that summarizes financial documents.

Create a new message

Complete the following steps to create a new message:

- In the amazon Bedrock console, in the navigation pane, under Construction toolschoose Quick management.

- Choose Create message.

- Provide a name and description, and choose Create.

Build the message

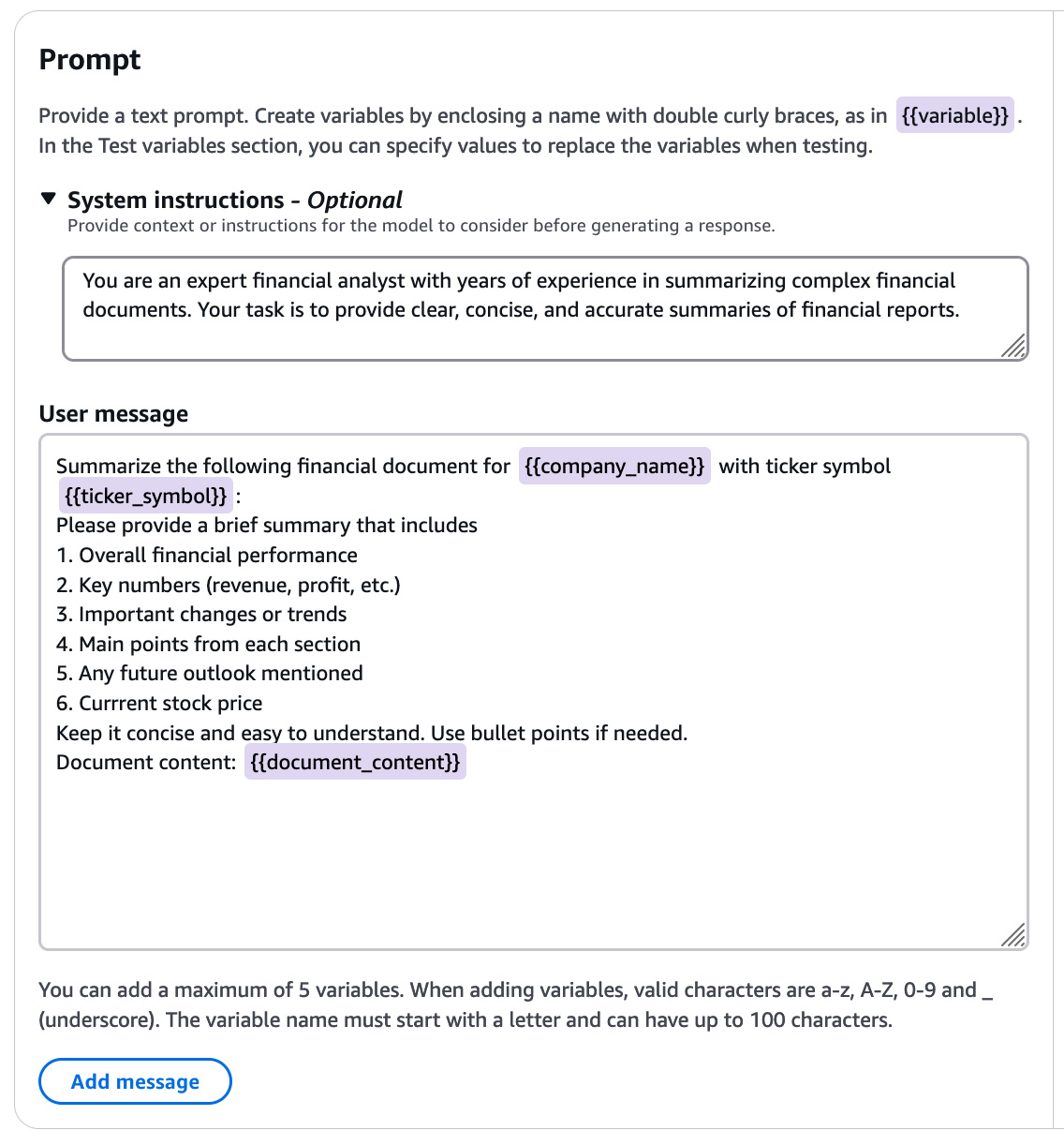

Use the message builder to personalize your message:

- For System instructionsdefines the role of the model. For this example, we enter the following:

You are an expert financial analyst with years of experience in summarizing complex financial documents. Your task is to provide clear, concise, and accurate summaries of financial reports. - Add the text message to the User message box.

You can create variables by enclosing a name in double braces. You can later pass values for these variables at invocation time, which are injected into your request template. For this post, we use the following message:

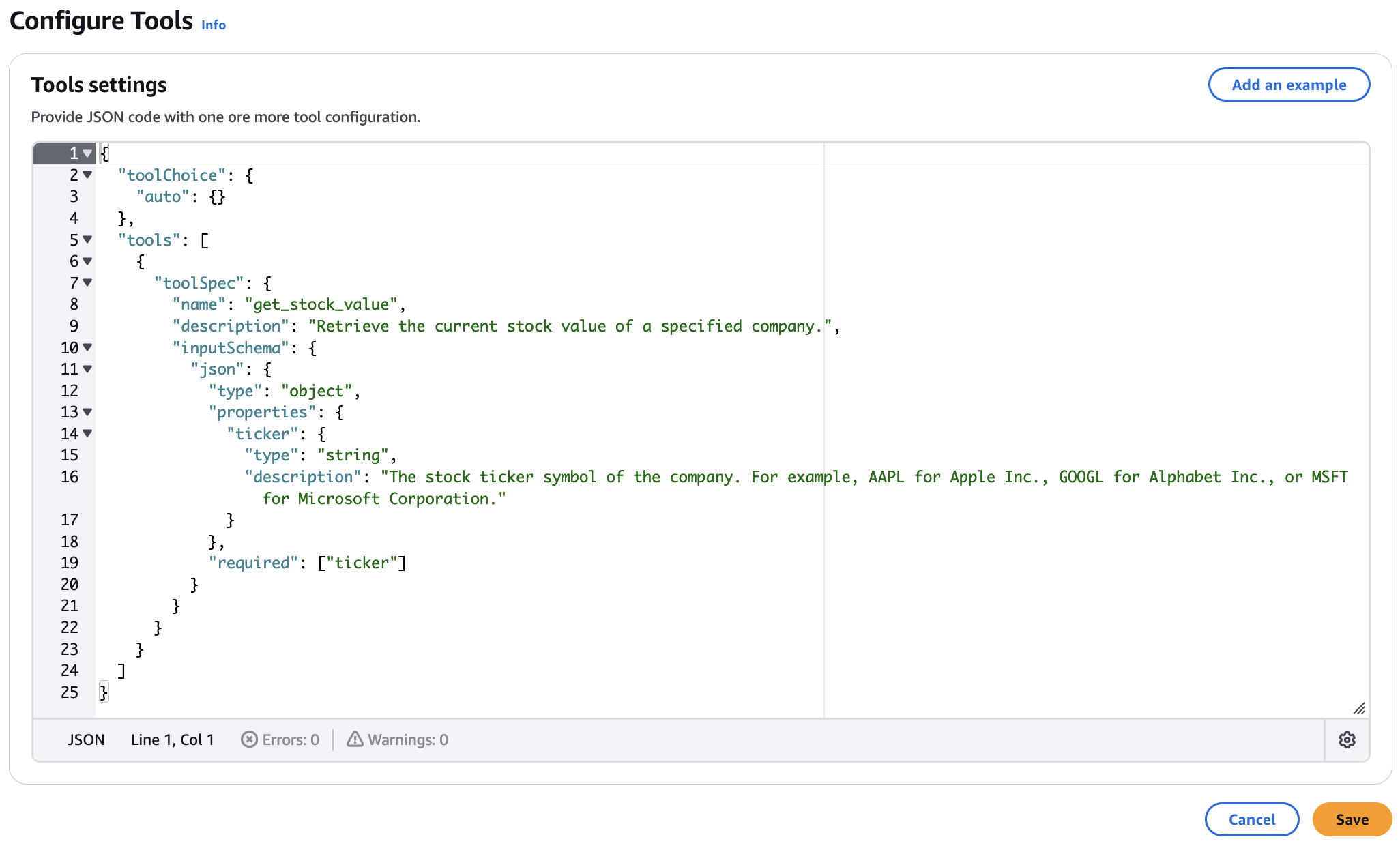

- Configure tools in the Tool Settings section to call functions.

You can define tools with names, descriptions, and input schemas to allow the model to interact with external functions and extend its capabilities. Provide a JSON schema that includes the tool information.

When using function calls, an LLM does not use tools directly; instead, it indicates the tool and the parameters necessary to use it. Users must implement logic to invoke tools based on model requests and send the results to the model. See Use a tool to complete an amazon Bedrock model response for more information.

- Choose Save to save your settings.

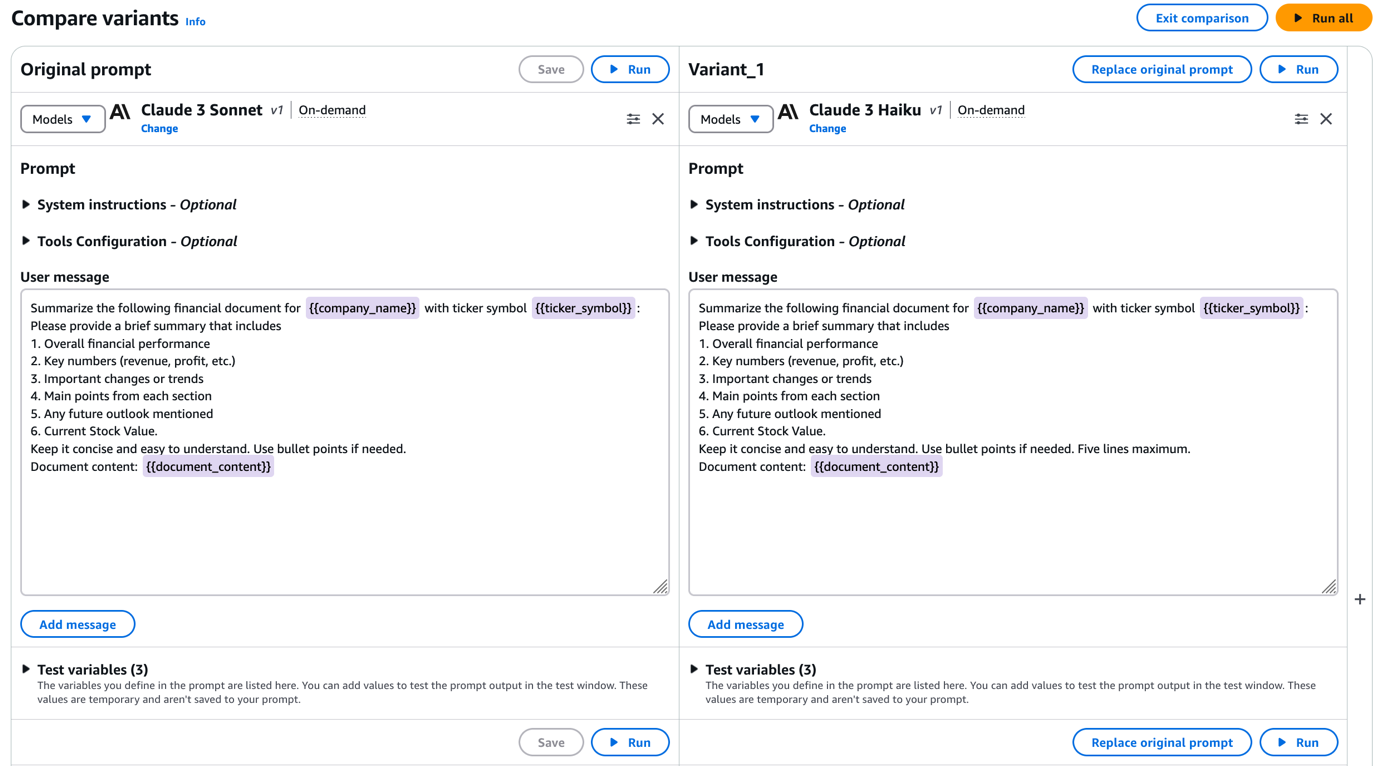

Compare message variants

You can create and compare multiple versions of your message to find the best one for your use case. This process is manual and customizable.

- Choose Compare variants.

- The original variant is already populated. You can manually add new variants by specifying the number you want to create.

- For each new variant, you can customize the user message, system instructions, tool settings, and additional messages.

- You can create different variants for different models. Choose Select model to choose the specific FM to test each variant.

- Choose run everything to compare the results of all message variants in the selected models.

- If a variant works better than the original, you can choose Replace original message to update your message.

- in it quick builder page, choose Create version to save the updated message.

This approach allows you to fine-tune your guidance for specific models or use cases and makes it easier to test and improve your results.

Invoke the message

To invoke the message from your applications, you can now include the message identifier and version as part of the amazon Bedrock Converse API call. The following code is an example that uses the AWS SDK for Python (Boto3):

We've passed the amazon Resource Name (ARN) in the model ID parameter and the request variables as a separate parameter, and amazon Bedrock directly loads our request version from our request management library to execute the invocation without overheads. latency. This approach simplifies the workflow by allowing direct invocation using the Converse or InvokeModel APIs, eliminating manual retrieval and formatting. It also allows teams to reuse and share prompts and keep track of different versions.

For more information about using these features, including required permissions, withand the documentation.

You can also invoke prompts in other ways:

Now available

amazon Bedrock Prompt Management is now generally available in the Eastern US (N. Virginia), Western US (Oregon), Europe (Paris), Europe (Ireland), Europe (Frankfurt), Europe (London), South America (Sao Paulo), Asia Pacific (Mumbai), Asia Pacific (Tokyo), Asia Pacific (Singapore), Asia Pacific (Sydney), and Canada (Central) AWS Regions. For pricing information, see amazon Bedrock Pricing.

Conclusion

The general availability of amazon Bedrock Prompt Management introduces powerful capabilities that enhance the development of generative ai applications. By providing a centralized platform to create, customize, and manage prompts, developers can streamline their workflows and work to improve prompt performance. The ability to define system instructions, configure tools, and compare message variants allows teams to create effective messages tailored to their specific use cases. With seamless integration into the amazon Bedrock Converse API and support for popular frameworks, organizations can now effortlessly create and deploy ai solutions that are more likely to drive relevant results.

About the authors

Danny Mitchell is a solutions architect specializing in generative ai at AWS. It focuses on computer vision use cases and helps accelerate EMEA businesses on their machine learning and generative ai journeys with amazon SageMaker and amazon Bedrock.

Danny Mitchell is a solutions architect specializing in generative ai at AWS. It focuses on computer vision use cases and helps accelerate EMEA businesses on their machine learning and generative ai journeys with amazon SageMaker and amazon Bedrock.

Ignacio Sanchez He is a solutions architect specializing in artificial intelligence and machine learning at AWS. He combines his skills in extended reality and artificial intelligence to help companies improve the way people interact with technology, making it accessible and more enjoyable for end users.

Ignacio Sanchez He is a solutions architect specializing in artificial intelligence and machine learning at AWS. He combines his skills in extended reality and artificial intelligence to help companies improve the way people interact with technology, making it accessible and more enjoyable for end users.