Video generation has quickly become a focal point in artificial intelligence research, especially in the generation of high-fidelity and temporally consistent videos. This area involves creating video sequences that maintain visual consistency between frames and preserve detail over time. Machine learning models, particularly diffusion transformers (DiT), have become powerful tools for these tasks, surpassing previous methods such as GAN and VAE in quality. However, as these models become complex, the computational cost and latency of generating high-resolution videos has become a significant challenge. Researchers are now focusing on improving the efficiency of these models to enable faster, real-time video generation while maintaining quality standards.

A pressing problem in video generation is the resource-intensive nature of current high-quality models. Generating complex, visually appealing videos requires significant processing power, especially with large models that handle longer, high-resolution video sequences. These demands slow down the inference process, making real-time generation difficult. Many video applications require models that can process data quickly while delivering high fidelity across all frames. A key problem is finding an optimal balance between processing speed and output quality, as faster methods often compromise detail. In contrast, high-quality methods tend to be computationally heavy and slow.

Over time, several methods have been introduced to optimize video generation models, with the aim of speeding up computational processes and reducing resource use. Traditional approaches such as stepwise distillation, latent diffusion, and caching have contributed to this goal. Step distillation, for example, reduces the number of steps required to achieve quality by condensing complex tasks into simpler forms. At the same time, latent diffusion techniques aim to improve the overall quality-latency ratio. Caching techniques store precomputed steps to avoid redundant calculations. However, these approaches have limitations, such as greater flexibility to adapt to the unique characteristics of each video sequence. This often leads to inefficiencies, particularly when dealing with videos that vary greatly in complexity, motion, and texture.

Researchers from Meta ai and Stony Brook University introduced an innovative solution called Adaptive Caching (AdaCache), which accelerates video broadcast transformers without additional training. AdaCache is a training-free technique that can be integrated into various video DiT models to optimize processing times by dynamically caching computations. By adapting to the unique needs of each video, this approach allows AdaCache to allocate computational resources where they are most effective. AdaCache is designed to optimize latency while preserving video quality, making it a flexible plug-and-play solution for improving performance across different video generation models.

AdaCache operates by caching certain residual computations within the transformer architecture, allowing these computations to be reused across multiple steps. This approach is particularly efficient because it avoids redundant processing steps, a common bottleneck in video generation tasks. The model uses a caching program tailored to each video to determine the best points to recalculate or reuse the residual data. This schedule is based on a metric that evaluates the rate of data change between frames. Additionally, the researchers incorporated a motion regularization mechanism (MoReg) into AdaCache, which allocates more computational resources to high-motion scenes that require greater attention to detail. Using a lightweight distance metric and a motion-based regularization factor, AdaCache balances the trade-off between speed and quality, adjusting the computational approach based on the motion content of the video.

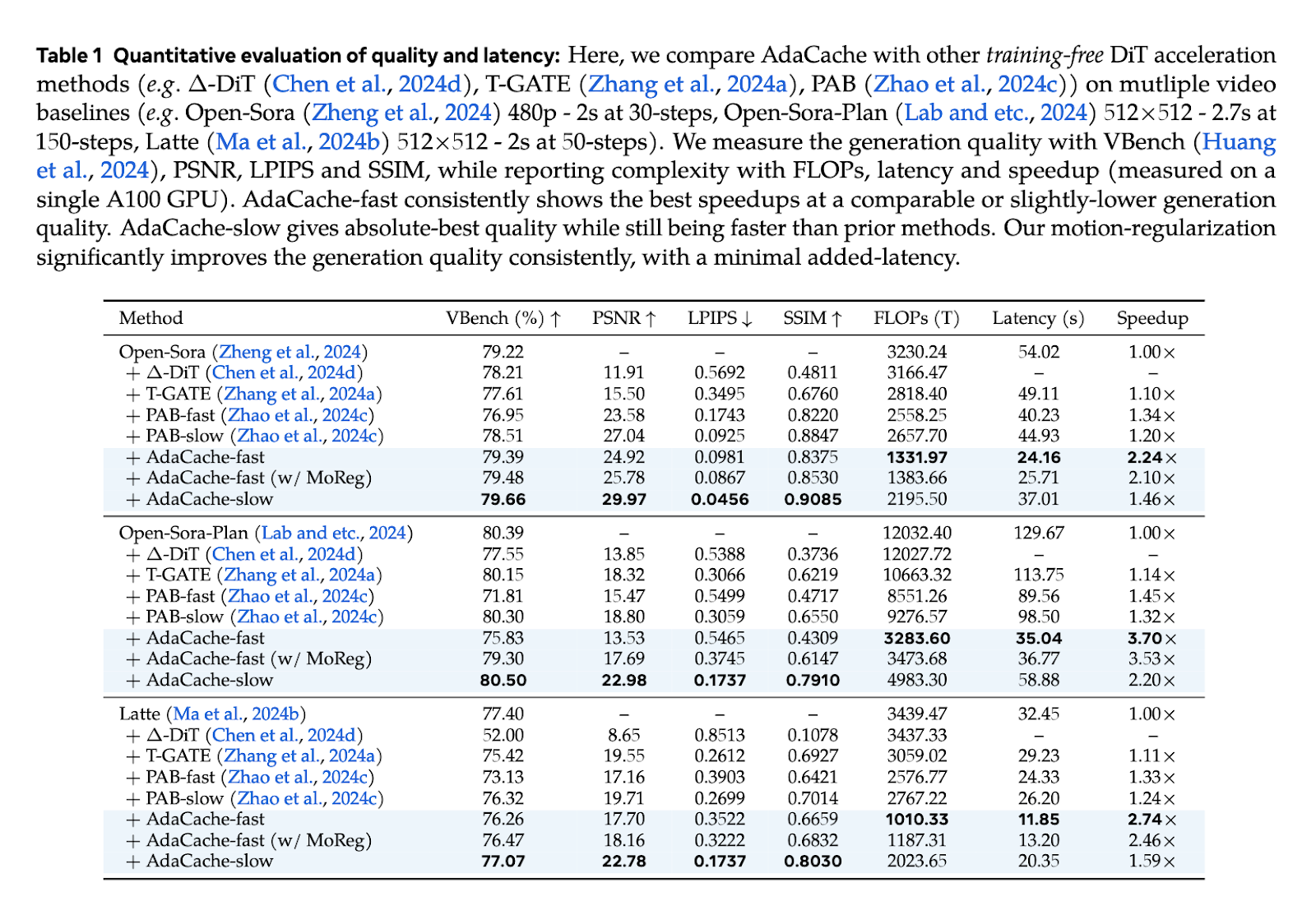

The research team conducted a series of tests to evaluate the performance of AdaCache. The results showed that AdaCache substantially improved processing speeds and quality retention across multiple video generation models. For example, in a test involving Open-Sora's 2-second 720p video generation, AdaCache recorded a speedup up to 4.7 times faster than previous methods while maintaining comparable video quality. Additionally, AdaCache variants such as “AdaCache-fast” and “AdaCache-slow” offer options based on speed or quality needs. With MoReg, AdaCache demonstrated improved quality, closely aligning with human preferences in visual evaluations and outperforming traditional caching methods. Speed tests on different DiT models also confirmed the superiority of AdaCache, with speedups ranging from 1.46x to 4.7x depending on configuration and quality requirements.

In conclusion, AdaCache marks a significant advancement in video generation, providing a flexible solution to the long-standing problem of balancing latency and video quality. By employing adaptive caching and motion-based regularization, the researchers offer a method that is efficient and practical for a wide range of real-world applications in real-time, high-quality video production. The plug-and-play nature of AdaCache allows it to enhance existing video generation systems without requiring extensive retraining or customization, making it a promising tool for future video generation.

look at the Paper, Codeand Project. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter and join our Telegram channel and LinkedIn Grabove. If you like our work, you will love our information sheet.. Don't forget to join our SubReddit over 55,000ml.

(Sponsorship opportunity with us) Promote your research/product/webinar to over 1 million monthly readers and over 500,000 community members

Nikhil is an internal consultant at Marktechpost. He is pursuing an integrated double degree in Materials at the Indian Institute of technology Kharagpur. Nikhil is an ai/ML enthusiast who is always researching applications in fields like biomaterials and biomedical science. With a strong background in materials science, he is exploring new advances and creating opportunities to contribute.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>