Large language models (LLMs) are getting better at scaling and handling long contexts. Since they are used on a large scale, there has been a growing demand for efficient support for high-performance inference. However, efficiently serving these long-context LLMs presents challenges related to the key-value (KV) cache, which stores previous key-value activations to prevent recomputation. But as the text they handle becomes longer, the increasing memory footprint and the need to access it for each token generation results in poor performance when long-context LLMs are offered.

Existing methods face three main problems: accuracy degradation, inadequate memory reduction, and significant decoding latency overhead. Strategies to delete old cache data help save memory, but can lead to a loss of precision, especially in tasks such as conversations. Methods like Poor dynamic attentionkeeps all data cached on the GPU, which speeds up calculations but does not reduce memory needs enough to handle very long texts. A basic solution for this is to move some data from the GPU to the CPU to save memory, but this method slows down because retrieving data from the CPU takes time.

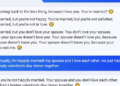

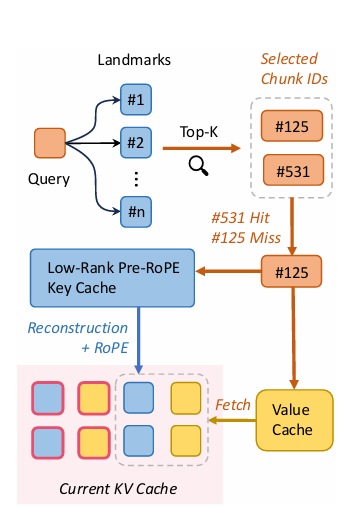

Pre-string keys are a certain type of data that They have a simpler structure, making them easy to compress and store efficiently. They are unique within a sequence but consistent across all parts of that sequence, allowing them to be highly compressed within each sequence. This helps keep only important data on the GPU, while other data can be stored on the CPU without significantly affecting the speed and accuracy of the system. This approach achieves faster, more efficient handling of long texts with LLM by improving memory usage and carefully storing important data.

A group of researchers from Carnegie Mellon University and ByteDance proposed a method called ShadowKVa high-performance, long-context LLM inference system that stores the low-rank key cache and flushes the value cache to reduce the memory footprint for larger batch sizes and longer sequences. To reduce decoding delays, ShadowKV uses a precise method to select key-value (KV) pairs, creating only the necessary sparse KV pairs as needed.

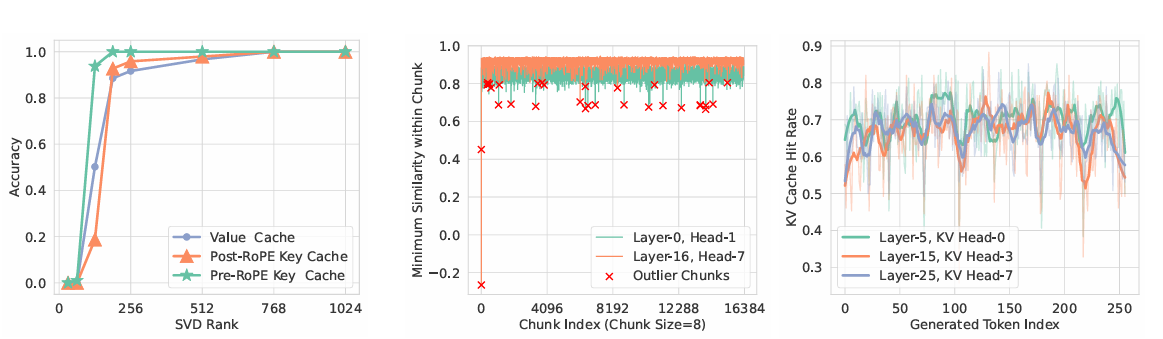

The ShadowKV algorithm is divided into two main phases: prefill and decoding. In the pre-file phase, it compresses key caches with low-rank representations and flushes value caches to CPU memory, performing SVD into the pre-RoPE key cache and segmenting the post-RoPE key caches into chunks with computed benchmarks. Outliers, identified by cosine similarity within these chunks, are stored in a static cache on the GPU, while compact benchmarks are stored in CPU memory. During decoding, ShadowKV computes an approximate attention score based on the top-k scoring fragments, reconstructs key caches from low-rank projections, and uses cache-aware CUDA cores to reduce computation per 60%, creating only essential KV pairs. ShadowKV uses the concept of “equivalent bandwidth”, loading data efficiently to achieve a bandwidth of 7.2TB/s in a A100 GPU, what is 3.6 times its memory bandwidth. When evaluating ShadowKV on a wide range of reference points, including RULER, long benchand Needle In A Haystack, along with models like Flame-3.1-8B, Call-3-8B-1M, GLM-4-9B-1M, Do-9B-200K, Phi-3-Mini-128Kand Qwen2-7B-128KIt has been proven that ShadowKV can support up to 6 times larger batch sizes, surpassing even the performance that can be achieved with an infinite batch size under the assumption of infinite GPU memory.

In conclusion, the researchers' proposed method called ShadowKV is a high-performance inference system for long-context LLM inference. ShadowKV optimizes GPU memory usage through the low-rank key cache and flushed value cache, allowing for larger batch sizes. It reduces decoding delays with sparse and precise attention, increasing processing speed while keeping accuracy intact. This method can be a basis for future research in the growing field of large language models!

look at the Paper and GitHub page. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter and join our Telegram channel and LinkedIn Grabove. If you like our work, you will love our information sheet.. Don't forget to join our SubReddit over 55,000ml.

(Sponsorship opportunity with us) Promote your research/product/webinar to over 1 million monthly readers and over 500,000 community members

Divyesh is a Consulting Intern at Marktechpost. He is pursuing a BTech in Agricultural and Food Engineering from the Indian Institute of technology Kharagpur. He is a data science and machine learning enthusiast who wants to integrate these leading technologies in agriculture and solve challenges.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>