Recent advances in large language models (LLM) have demonstrated exceptional natural language generation and understanding capabilities. Research has explored the unexpected abilities of LLMs beyond their primary training task of text prediction. These models have shown promise in function requiring software APIs, supported by the release of GPT-4 plugin functions. Integrated tools include web browsers, translation systems, dialogue state tracking (DST), and robotics. While LLMs show promising results in general complex reasoning, they still face challenges in mathematical problem solving and logical abilities. To address this, researchers have proposed techniques such as function calls, which allow LLMs to execute provided functions and use their results to help complete various tasks. These functions range from basic tools such as calculators that perform arithmetic operations to more advanced methods. However, focusing on specific tasks using only a small portion of the available APIs highlights the inefficiency of relying solely on large models, which require large computational power for both training and inference and due to the high cost of training. This situation calls for the creation of smaller, task-specific LLMs that maintain core functionality while reducing operational costs. While promising, the trend toward smaller models introduces new challenges.

Current methods involve the use of large-scale LLM for reasoning tasks, which are resource-intensive and expensive. Due to their generalized nature, these models often have difficulty solving specific logical and mathematical problems.

The proposed research method introduces a novel framework for training smaller LLMs on function calls, focusing on specific reasoning tasks. This approach employs an agent that queries the LLM by injecting descriptions and examples of usable functions into the message, creating a data set of correct and incorrect reasoning chain completions.

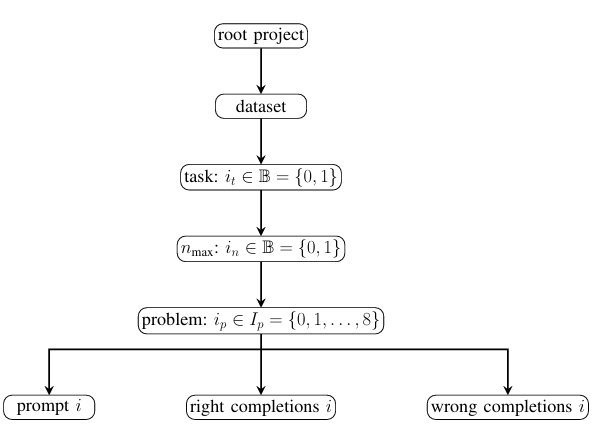

To address the drawbacks of large LLMs, which incur excessive training and inference costs, a group of researchers introduced a novel framework for training smaller language models from the function calling capabilities of large models for tasks. specific logical and mathematical reasoning. Given a problem and a set of functions useful for its solution, this framework involves an agent querying a large-scale LLM by injecting function descriptions and examples into the message and managing the appropriate function calls necessary to find the solution, all in a just pass -Step-by-step chain of reasoning. This procedure is used to create a data set with correct and incorrect endings. The generated data set then trains a smaller model using a reinforcement learning from human feedback (RLHF) approach, known as direct preference optimization (DPO). We present this methodology tested on two reasoning tasks, first-order logic (FOL) and mathematics, using a custom set of FOL problems inspired by the HuggingFace dataset.

The process of the proposed framework comprises four stages: first, defining tasks and problems to evaluate the abilities of large language models (LLM) in various domains of reasoning. Specific functions are then configured for each task, allowing LLM to solve reasoning steps, manage chain flow and verify results. A pre-trained large-scale LLM is then chosen to generate a correct and incorrect completion dataset using a chain-of-thought approach. Finally, a smaller LLM model is fine-tuned using the Direct Policy Optimization (DPO) algorithm on the created data set. The experimentation involved testing the model in first order logic (FOL) and mathematical problems, with results generated using an agent-based library, Microchain, that facilitates LLM queries with predefined functions to create a chain-of-thought dataset.

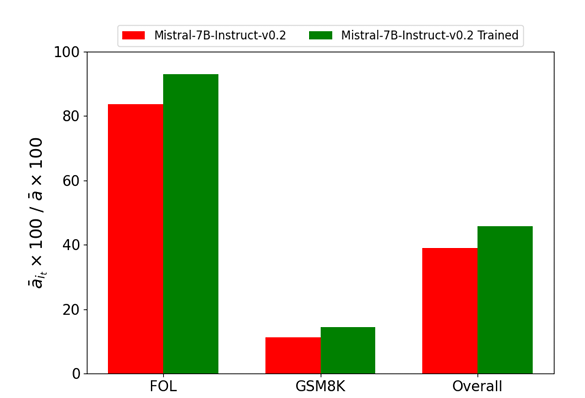

Data augmentation was performed to expand the data set and fine tuning was performed on Mistral-7B using a single GPU. Performance metrics demonstrated improved model accuracy on FOL tasks and moderate gains on mathematical tasks, with statistical significance confirmed through a wilcoxon test.

In conclusion, the researchers proposed a new framework to improve the function calling capabilities of small-scale LLMs, focusing on specific logical and mathematical reasoning tasks. This method reduces the need for large models and improves performance on logic and math-related tasks. The experimental results demonstrate significant improvements in the performance of the small-scale model on FOL tasks, achieving near-perfect accuracy in most cases. In future work, there is great scope to explore the application of the introduced framework to a broader range of reasoning tasks and function types.

look at the Paper. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter and join our Telegram channel and LinkedIn Grabove. If you like our work, you will love our information sheet.. Don't forget to join our SubReddit over 55,000ml.

(Trend) LLMWare Introduces Model Depot: An Extensive Collection of Small Language Models (SLM) for Intel PCs

Divyesh is a Consulting Intern at Marktechpost. He is pursuing a BTech in Agricultural and Food Engineering from the Indian Institute of technology Kharagpur. He is a data science and machine learning enthusiast who wants to integrate these leading technologies in agriculture and solve challenges.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>

NEWSLETTER

NEWSLETTER