In recent years, text-to-speech (TTS) technology has made significant progress, but numerous challenges remain. Autoregressive (AR) systems, while offering diverse prosody, tend to suffer from robustness issues and slow inference speeds. On the other hand, non-autoregressive (NAR) models require explicit alignment between text and speech during training, which can lead to unnatural results. The new Masked Generative Codec Transformer (MaskGCT) addresses these issues by eliminating the need for explicit text-speech alignment and duration prediction at the phone level. This novel approach aims to simplify the process while maintaining or even improving the quality and expressiveness of the generated speech.

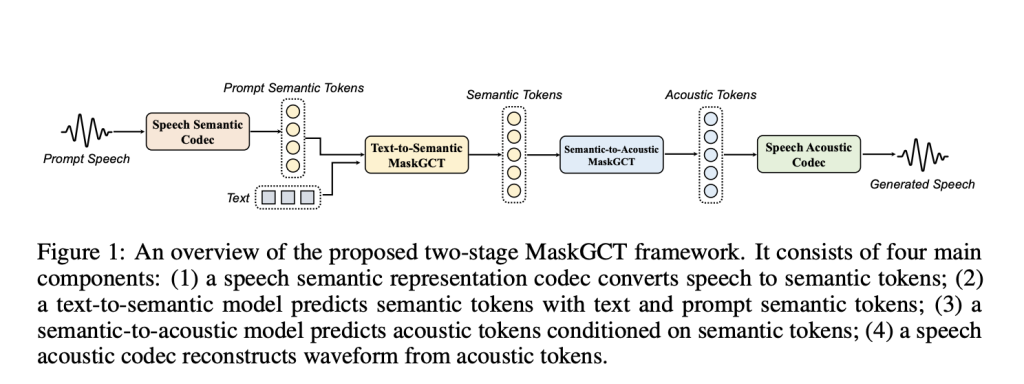

MaskGCT is a new state-of-the-art, open source TTS model available on Hugging Face. It brings several interesting features such as zero-shot voice cloning and emotional TTS, and can synthesize voice in both English and Chinese. The model was trained on an extensive dataset of 100,000 hours of voice data in the wild, allowing it to generate long-form, variable-rate synthesis. In particular, MaskGCT features a completely non-autoregressive architecture. This means that the model does not rely on iterative predictions, resulting in faster inference times and a simplified synthesis process. Using a two-stage approach, MaskGCT first predicts semantic tokens from the text and subsequently generates acoustic tokens conditioned on those semantic tokens.

MaskGCT uses a two-stage framework that follows a “mask and predict” paradigm. In the first stage, the model predicts semantic tokens based on the input text. These semantic tokens are extracted from a self-supervised learning (SSL) model of speech. In the second stage, the model predicts acoustic tokens conditioned on the previously generated semantic tokens. This architecture allows MaskGCT to completely bypass text-speech alignment and duration prediction at the phoneme level, distinguishing it from previous NAR models. Additionally, it employs a vector quantized variational autoencoder (VQ-VAE) to quantize speech representations, minimizing information loss. The architecture is highly flexible, enabling speech generation with controllable speed and duration, and supporting applications such as multilingual dubbing, speech conversion, and emotion control, all in a zero-trigger configuration.

MaskGCT represents a significant advancement in TTS technology due to its simplified process, non-autoregressive approach, and robust performance across multiple languages and emotional contexts. Its training with 100,000 hours of voice data, covering various speakers and contexts, gives it incomparable versatility and naturalness in the generated speech. Experimental results demonstrate that MaskGCT achieves human-level naturalness and intelligibility, outperforming other state-of-the-art TTS models on key metrics. For example, MaskGCT achieved superior scores on speaker similarity (SIM-O) and word error rate (WER) compared to other TTS models such as VALL-E, VoiceBox, and NaturalSpeech 3. These metrics, along with its prosody and flexibility high quality, make MaskGCT an ideal tool for applications that require precision and expressiveness in speech synthesis.

MaskGCT pushes the boundaries of what is possible in text-to-speech technology. By removing dependencies on explicit text-speech alignment and duration prediction and instead using a fully non-autoregressive and masked generative approach, MaskGCT achieves a high level of naturalness, quality, and efficiency. Its flexibility to handle voice cloning, emotional context, and bilingual synthesis makes it a game-changer for a variety of applications, including ai assistants, voice-over, and accessibility tools. With its open availability on platforms like Hugging Face, MaskGCT is not only advancing the field of TTS but also making cutting-edge technology more accessible to developers and researchers around the world.

look at the Paper and Model hugging face. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter and join our Telegram channel and LinkedIn Grabove. If you like our work, you will love our information sheet.. Don't forget to join our SubReddit over 55,000ml.

(Trend) LLMWare Introduces Model Depot: An Extensive Collection of Small Language Models (SLM) for Intel PCs

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of artificial intelligence for social good. Their most recent endeavor is the launch of an ai media platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is technically sound and easily understandable to a wide audience. The platform has more than 2 million monthly visits, which illustrates its popularity among the public.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>