Image by author

Python, R, and SQL are often cited as the most widely used languages for processing, modeling, and exploring data. While that may be true, there’s no reason others can’t be, or aren’t being, used to do this job.

The Bash shell is a Unix and Unix-like operating system shell, along with accompanying commands and programming language. Bash scripts are programs written using this Bash shell scripting language. These scripts are executed sequentially by the Bash interpreter and can include all the constructs normally found in other programming languages, including conditional statements, loops, and variables.

Common uses of the Bash script include:

- automation of system administration tasks

- backup and maintenance

- analyze log files and other data

- creating command line tools and utilities

Bash scripting is also used to orchestrate the deployment and management of complex distributed systems, making it an incredibly useful skill in the areas of data engineering, cloud computing environments, and DevOps.

In this article, we’re going to take a look at five different data science related scripting tasks, where we should see just how flexible and useful Bash can be.

Clean and format raw data

Here is an example bash script to clean up and format raw data files:

#!/bin/bash

# Set the input and output file paths

input_file="raw_data.csv"

output_file="clean_data.csv"

# Remove any leading or trailing whitespace from each line

sed 's/^[ \t]*//;s/[ \t]*$//' $input_file > $output_file

# Replace any commas within quoted fields with a placeholder

sed -i 's/","/,/g' $output_file

# Replace any newlines within quoted fields with a placeholder

sed -i 's/","/ /g' $output_file

# Remove the quotes around each field

sed -i 's/"//g' $output_file

# Replace the placeholder with the original comma separator

sed -i 's/,/","/g' $output_file

echo "Data cleaning and formatting complete. Output file: $output_file"This script:

- it assumes that your raw data file is in a CSV file named

raw_data.csv - save clean data as

clean_data.csv - use the

sedcommand to:- remove leading/trailing whitespace from each line and replace commas within quoted fields with a placeholder

- replace newlines within quoted fields with a placeholder

- remove quotes around each field

- replace the placeholder with the original comma separator

- prints a message indicating that data cleanup and formatting is complete, along with the location of the output file

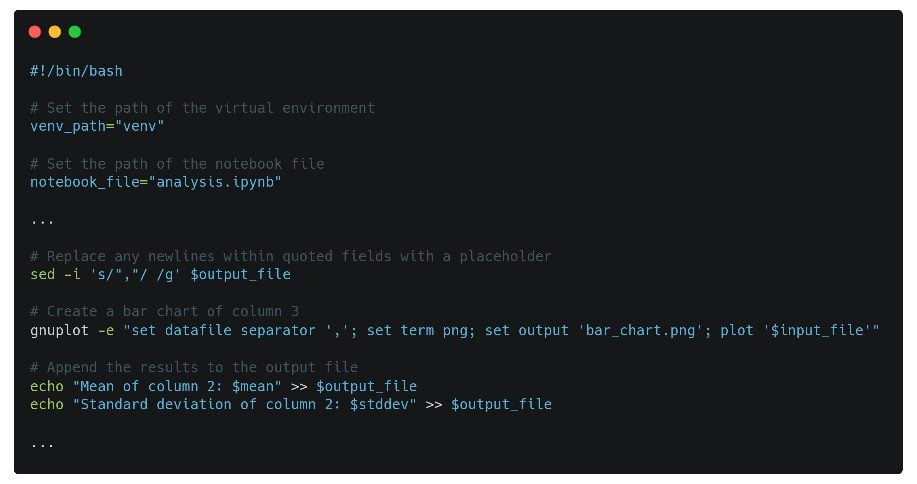

Automate data visualization

Here is an example bash script to automate data display tasks:

#!/bin/bash

# Set the input file path

input_file="data.csv"

# Create a line chart of column 1 vs column 2

gnuplot -e "set datafile separator ','; set term png; set output 'line_chart.png'; plot '$input_file' using 1:2 with lines"

# Create a bar chart of column 3

gnuplot -e "set datafile separator ','; set term png; set output 'bar_chart.png'; plot '$input_file' using 3:xtic(1) with boxes"

# Create a scatter plot of column 4 vs column 5

gnuplot -e "set datafile separator ','; set term png; set output 'scatter_plot.png'; plot '$input_file' using 4:5 with points"

echo "Data visualization complete. Output files: line_chart.png, bar_chart.png, scatter_plot.png"The above script:

- assumes your data is in a CSV file named

data.csv - use the

gnuplotCommand to create three different types of charts:- a line chart of column 1 vs. column 2

- a 3 column bar chart

- a scatter plot of column 4 vs. column 5

- generates the graphics in png format and saves them as

line_chart.png,bar_chart.pngandscatter_plot.pngrespectively - prints a message indicating that the data visualization is complete and the location of the output files

Please note that for this script to work, you need to adjust the column numbers and chart types based on your data and requirements.

Statistic analysis

Here is an example bash script to perform statistical analysis on a dataset:

#!/bin/bash

# Set the input file path

input_file="data.csv"

# Set the output file path

output_file="statistics.txt"

# Use awk to calculate the mean of column 1

mean=$(awk -F',' '{sum+=$1} END {print sum/NR}' $input_file)

# Use awk to calculate the standard deviation of column 1

stddev=$(awk -F',' '{sum+=$1; sumsq+=$1*$1} END {print sqrt(sumsq/NR - (sum/NR)**2)}' $input_file)

# Append the results to the output file

echo "Mean of column 1: $mean" >> $output_file

echo "Standard deviation of column 1: $stddev" >> $output_file

# Use awk to calculate the mean of column 2

mean=$(awk -F',' '{sum+=$2} END {print sum/NR}' $input_file)

# Use awk to calculate the standard deviation of column 2

stddev=$(awk -F',' '{sum+=$2; sumsq+=$2*$2} END {print sqrt(sumsq/NR - (sum/NR)**2)}' $input_file)

# Append the results to the output file

echo "Mean of column 2: $mean" >> $output_file

echo "Standard deviation of column 2: $stddev" >> $output_file

echo "Statistical analysis complete. Output file: $output_file"This script:

- assumes your data is in a CSV file named

data.csv - use the

awkcommand to calculate mean and standard deviation of 2 columns - separate the data by a comma

- save the results to a text file

statistics.txt. - prints a message indicating that the statistical analysis is complete and the location of the output file

Please note that you can add more awk commands to calculate other statistical values or for more columns.

Manage Python package dependencies

Here is an example bash script to manage and update dependencies and packages needed for data science projects:

#!/bin/bash

# Set the path of the virtual environment

venv_path="venv"

# Activate the virtual environment

source $venv_path/bin/activate

# Update pip

pip install --upgrade pip

# Install required packages from requirements.txt

pip install -r requirements.txt

# Deactivate the virtual environment

deactivate

echo "Dependency and package management complete."This script:

- assumes you have a virtual environment set up and a file named

requirements.txtcontaining the names of the packages and versions you want to install - use the

sourcecommand to activate a virtual environment specified by pathvenv_path. - applications

pipTo actualizepipto the latest version - installs the packages specified in the

requirements.txtarchive - use the deactivate command to deactivate the virtual environment after installing the packages

- prints a message indicating that package and dependency management is complete

This script should be run every time you want to update your dependencies or install new packages for a data science project.

Manage Jupyter Notebook execution

Here is an example bash script to automate the execution of Jupyter Notebook or other interactive data science environments:

#!/bin/bash

# Set the path of the notebook file

notebook_file="analysis.ipynb"

# Set the path of the virtual environment

venv_path="venv"

# Activate the virtual environment

source $venv_path/bin/activate

# Start Jupyter Notebook

jupyter-notebook $notebook_file

# Deactivate the virtual environment

deactivate

echo "Jupyter Notebook execution complete."The above script:

- assumes you have a virtual environment set up and Jupyter Notebook installed in it

- use the

sourcecommand to activate a virtual environment, specified by pathvenv_path - use the

jupyter-notebookcommand to start Jupyter Notebook and open the specifiednotebook_file - use the

deactivatecommand to disable virtual environment after Jupyter Notebook execution - prints a message indicating that the Jupyter Notebook execution is complete

This script should be run every time you want to run a Jupyter Notebook or other interactive data science environments.

I hope these simple scripts are enough to show you the simplicity and power of Bash scripting. It may not be your preferred solution for every situation, but it certainly has its place. Best of luck on your script.

Matthew May (@mattmayo13) is a data scientist and editor-in-chief of KDnuggets, the essential online resource for data science and machine learning. His interests lie in natural language processing, algorithm design and optimization, unsupervised learning, neural networks, and automated approaches to machine learning. Matthew has an MS in Computer Science and a Post Graduate Diploma in Data Mining. He can be reached at editor1 on kdnuggets[dot]com.