The YOLO (You Only Look Once) series has made real-time object identification possible. The latest version, YOLOv11, improves performance and efficiency. This article provides in-depth analysis of the main advances of YOLOv11, parallels with previous YOLO models, and practical uses. By understanding its developments, we can see why YOLOv11 is expected to become a key tool in real-time object detection.

Learning objectives

- Understand the basic principles and evolution of the YOLO object detection algorithm.

- Identify the key features and innovations introduced in YOLOv11.

- Compare the performance and architecture of YOLOv11 with previous versions of YOLO.

- Explore practical applications of YOLOv11 in various real-world scenarios.

- Learn how to implement and train a YOLOv11 model for custom object detection tasks.

This article was published as part of the Data Science Blogathon.

What is YOLO?

It is a real-time object detection system and can also be called a family of object detection algorithms. Unlike traditional methods, which would trigger multiple passes over an image, YOLO can instantly detect objects and their locations in a single pass, resulting in something efficient for tasks that must be performed at high speed without compromising accuracy. Joseph Redmon introduced YOLO in 2016 and changed the field of object detection by processing entire images, not regions, making detections much faster while still maintaining decent accuracy.

Evolution of YOLO models

YOLO has evolved through multiple iterations, each improving on the previous version. Here's a quick summary:

| YOLO version | Key Features | Limitations |

|---|---|---|

| YOLOv1 (2016) | First real-time detection model | Fight with small objects. |

| YOLOv2 (2017) | Added anchor boxes and batch normalization. | Still weak in small object detection |

| YOLOv3 (2018) | Multiscale detection | Higher computational cost |

| YOLOv4 (2020) | Improved speed and precision | Compensation in extreme cases |

| YOLOv5 | Easy-to-use PyTorch implementation | It is not an official release |

| YOLOv6/YOLOv7 | Improved architecture | Incremental improvements |

| YOLOv8/YOLOv9 | Better handling of dense objects | Increasing complexity |

| YOLOv10 (2024) | Transformers introduced, training without NMS | Limited scalability for edge devices |

| YOLOv11 (2024) | Transformer-based dynamic head, training without NMS, PSA modules | Challenging scalability for highly constrained edge devices |

Each version of YOLO has brought improvements in speed, accuracy, and ability to detect smaller objects, with YOLOv11 being the most advanced yet.

Also Read: YOLO – An Ultimate Solution for Object Detection and Classification

Key innovations in YOLOv11

YOLOv11 introduces several innovative features that distinguish it from its predecessors:

- Transformer based backbone network: Unlike traditional CNNs, YOLOv11 uses a transformer-based backbone network, which captures long-range dependencies and improves small object detection.

- Dynamic head design: This allows YOLOv11 to adapt based on image complexity, optimizing resource allocation for faster, more efficient processing.

- Training without NMS: YOLOv11 replaces non-maximum suppression (NMS) with a more efficient algorithm, reducing inference time while maintaining accuracy.

- Dual Tag Assignment: Improves the detection of overlapping and densely packed objects by using a one-to-one and one-to-many label assignment approach.

- Large kernel convolutions: It allows better feature extraction with fewer computational resources, improving overall model performance.

- Partial self-care (PSA): It selectively applies attention mechanisms to certain parts of the feature map, improving global representation learning without increasing computational costs.

Also Read: A Practical Guide to Object Detection Using the Popular YOLO Framework – Part III (with Python Codes)

YOLO Model Comparison

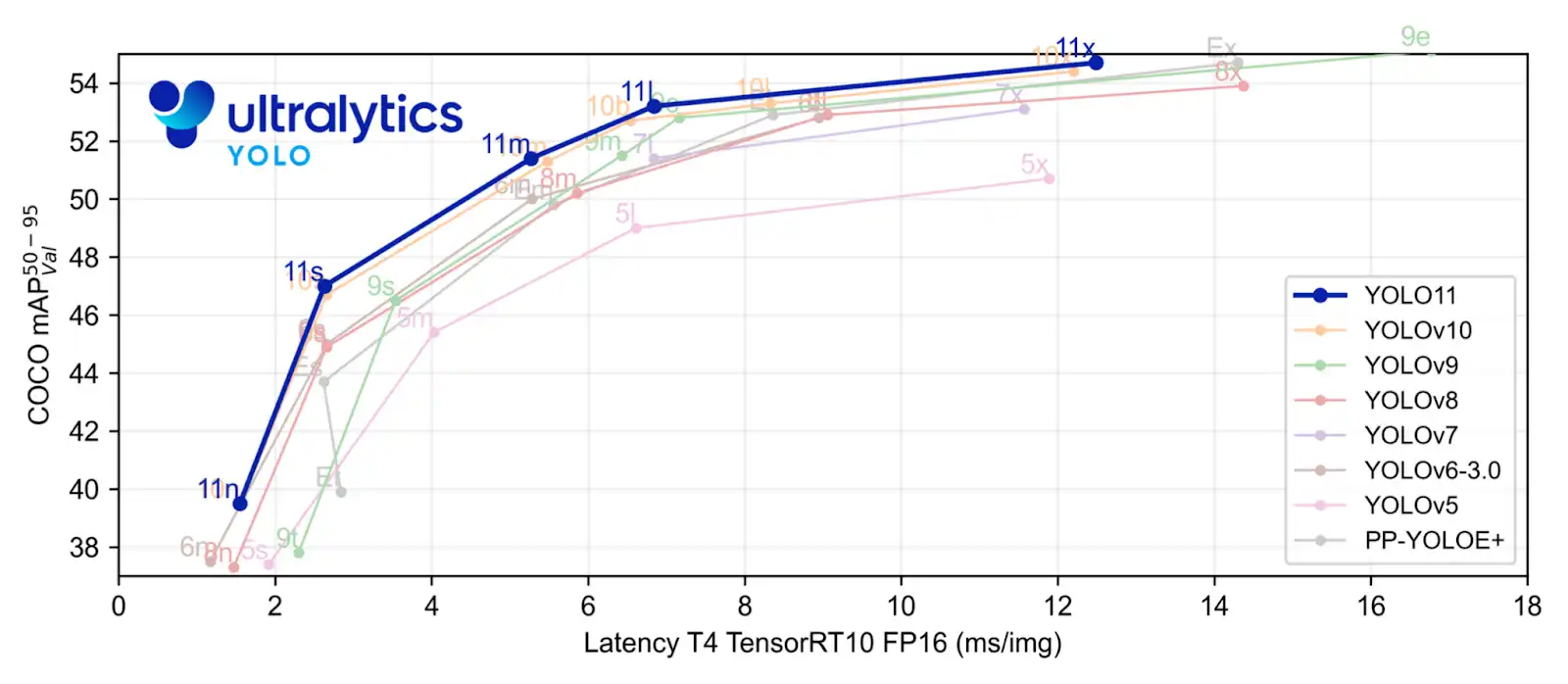

YOLOv11 outperforms previous versions of YOLO in terms of speed and accuracy, as shown in the following table:

| Model | Speed (FPS) | Accuracy (map) | Parameters | Use case |

|---|---|---|---|---|

| YOLOv3 | 30FPS | 53.0% | 62M | Balanced performance |

| YOLOv4 | 40FPS | 55.4% | 64M | Real time detection |

| YOLOv5 | 45FPS | 56.8% | 44 million | light model |

| YOLOv10 | 50FPS | 58.2% | 48M | Perimeter implementation |

| YOLOv11 | 60FPS | 61.5% | 40M | Faster and more precise |

With fewer parameters, YOLOv11 manages to improve speed and accuracy, making it ideal for a variety of applications.

Also read: YOLOv7: Real-time object detection at its best

Performance benchmark

YOLOv11 demonstrates significant improvements in several performance metrics:

- Latency: 25-40% less latency compared to YOLOv10, perfect for real-time applications.

- Accuracy: 10-15% improvement in mAP with fewer parameters.

- Speed: Capable of processing 60 frames per second, making it one of the fastest object detection models.

YOLOv11 model architecture

The YOLOv11 architecture integrates the following innovations:

- Transformer Backbone: Improves the model's ability to capture global context.

- Dynamic head design: Adapts processing to the complexity of each image.

- PSA module: increases global representation without adding much computational cost.

- Dual Label Mapping: Improves detection of multiple overlapping objects.

This architecture allows YOLOv11 to run efficiently on high-end systems and cutting-edge devices such as mobile phones.

YOLOv11 sample usage

Step 1: Install YOLOv11 dependencies

First, install the necessary packages:

!pip install ultralytics

!pip install torch torchvisionStep 2: Load the YOLOv11 model

You can load the YOLOv11 pretrained model directly using the Ultralytics library.

from ultralytics import YOLO

# Load a COCO-pretrained YOLO11n model

model = YOLO('yolo11n.pt')Step 3: Train the model on the data set

Train the model on your data set with the appropriate number of epochs

# Train the model on the COCO8 example dataset for 100 epochs

results = model.train(data="coco8.yaml", epochs=100, imgsz=640)Test the model

You can save the model and test it on unseen images as needed.

# Run inference on an image

results = model("path/to/your/image.png")

# Display results

results(0).show()Original and output image

I have unseen images to verify the model prediction and it has provided the most accurate result.

YOLOv11 Applications

YOLOv11's advancements make it suitable for various real-world applications:

- Autonomous vehicles: Improved detection of small and occluded objects improves safety and navigation.

- Health care: The accuracy of YOLOv11 helps in medical imaging tasks such as tumor detection, where accuracy is critical.

- Retail and inventory management: Track customer behavior, monitor inventory, and improve security in retail environments.

- Surveillance: Its speed and accuracy make it perfect for real-time surveillance and threat detection.

- Robotics: YOLOv11 enables robots to better navigate environments and interact with objects autonomously.

Conclusion

YOLOv11 sets a new standard for object detection, combining speed, accuracy and flexibility. Its transformer-based architecture, dynamic head design, and dual tag mapping allow it to excel in a variety of real-time applications, from autonomous vehicles to healthcare. YOLOv11 is poised to become an essential tool for developers and researchers, paving the way for future advances in object detection technology.

If you are looking for an online generative ai course, explore: GenAI Pinnacle Program.

Key takeaways

- YOLOv11 features a transformer-based backbone and dynamic head design, which improves real-time object detection with higher speed and accuracy.

- It outperforms previous YOLO models by achieving 60 FPS and 61.5% mAP with fewer parameters, making it more efficient.

- Key innovations such as NMS-free training, dual-label assignment, and partial self-attention improve detection accuracy, especially for overlapping objects.

- Practical applications of YOLOv11 span autonomous vehicles, healthcare, retail, surveillance, and robotics, benefiting from its speed and accuracy.

- YOLOv11 reduces latency by 25% to 40% compared to YOLOv10, solidifying its position as the leading tool for real-time object detection tasks.

The media shown in this article is not the property of Analytics Vidhya and is used at the author's discretion.

Frequently asked question

Answer. YOLO, or “You Only Look Once,” is a real-time object detection system that can identify objects in a single pass over an image, making it efficient and fast. It was introduced by Joseph Redmon in 2016 and revolutionized the field of object detection by processing images as a whole instead of analyzing regions separately.

Answer. YOLOv11 introduces several innovations, including a transformer-based backbone, dynamic head design, NMS-free training, dual label mapping, and partial self-attention (PSA). These features improve speed, accuracy, and efficiency, making them suitable for real-time applications.

Answer. YOLOv11 outperforms previous versions with a processing speed of 60 FPS and mAP accuracy of 61.5%. It has fewer parameters (40M) compared to YOLOv10's 48M, offering faster and more accurate object detection while maintaining efficiency.

Answer. YOLOv11 can be used in autonomous vehicles, healthcare (e.g. medical imaging), retail and inventory management, real-time surveillance, and robotics. Its speed and accuracy make it ideal for scenarios that require fast and reliable object detection.

Answer. The use of a transformer-based backbone, a dynamic head design that adapts to image complexity, and NMS-free training help YOLOv11 reduce latency by 25-40% compared to YOLOv10. These improvements allow you to process up to 60 frames per second, ideal for real-time tasks.