Large language models (LLMs) have revolutionized the way machines process and generate human language, but their ability to reason effectively on various tasks remains a major challenge. ai researchers are working to enable these models to perform not only language understanding but also complex reasoning tasks such as problem solving in mathematics, logic, and general knowledge. The goal is to create systems that can perform reasoning-based tasks autonomously and accurately across multiple domains.

One of the critical problems facing ai researchers is that many current methods for improving LLM reasoning capabilities rely heavily on human intervention. These methods often require meticulous human-designed reasoning examples or the use of superior models, both of which are expensive and time-consuming. Furthermore, when LLMs are tested on tasks outside their original training domain, they lose accuracy, revealing that current systems must be truly generalist in their reasoning capabilities. This gap in performance across various tasks presents a barrier to creating adaptive, general-purpose ai systems.

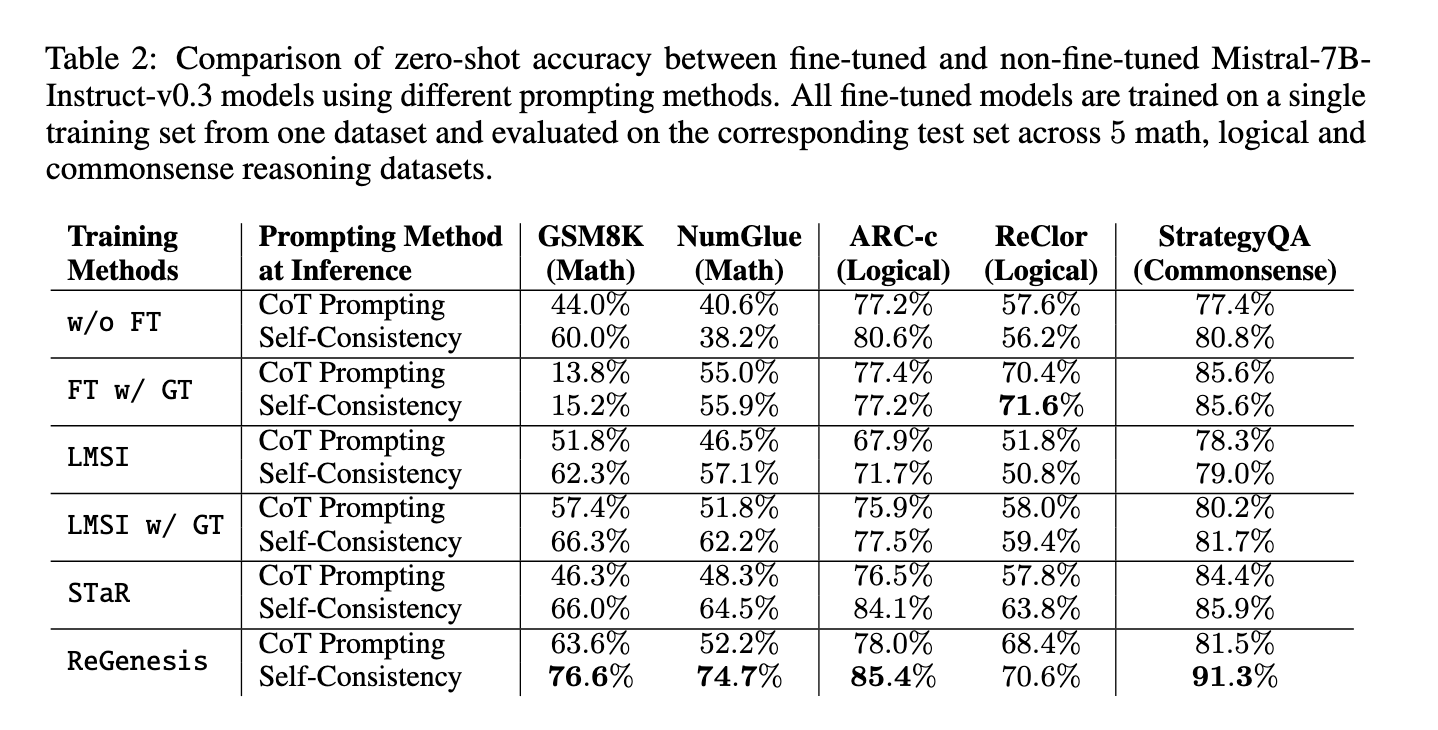

Several existing methods aim to address this problem. These approaches typically prompt LLMs to generate reasoning steps, often called chain-of-thought (CoT) reasoning, and filter these steps based on outcome or self-consistency. However, these methods, such as STAR and LMSI, have limitations. They use small, fixed sets of human-designed reasoning paths that help models perform well on tasks similar to those they were trained on, but struggle when applied to out-of-domain (OOD) tasks, limiting their general utility. Therefore, while these models can improve reasoning in a controlled environment, they must generalize and provide consistent performance when faced with new challenges.

In response to these limitations, researchers at Salesforce ai Research introduced a novel method called ReGenesis. This method allows LLMs to improve their reasoning skills without the need for additional human-designed examples. ReGenesis allows models to synthesize their reasoning paths as post-training data, helping them adapt to new tasks more effectively. By progressively refining reasoning from abstract guidelines to specific task structures, the method addresses the shortcomings of existing models and helps develop more generalized reasoning ability.

The methodology behind ReGenesis is structured in three key phases. First, it generates broad, task-independent reasoning patterns that are general principles applicable to various tasks. These guidelines are not tied to any particular problem, allowing the model to maintain flexibility in its reasoning. These abstract guidelines are then tailored to task-specific reasoning structures, allowing the model to develop more focused reasoning strategies for particular problems. Finally, the LLM uses these reasoning structures to create detailed reasoning paths. Once paths are generated, the model filters them using real answers or majority voting techniques to eliminate incorrect solutions. Therefore, this process improves the reasoning capabilities of the model without relying on predefined examples or extensive human input, making the entire process more scalable and effective for a variety of tasks.

The results of implementing ReGenesis are impressive. The researchers evaluated the method on in-domain and out-of-domain tasks and observed that ReGenesis consistently outperformed existing methods. Specifically, ReGenesis achieved a 6.1% improvement on OOD tasks, while other models showed an average performance drop of 4.6%. In a set of evaluations involving six OOD tasks, such as mathematical reasoning and logic, ReGenesis managed to maintain its performance, while other models experienced a significant decline after post-training. On in-domain tasks, such as those on which the models were originally trained, ReGenesis also showed superior performance. For example, he achieved between 7.1% and 18.9% better results on various tasks, including common sense reasoning and mathematical problem solving.

ReGenesis' more detailed results further highlight its effectiveness. For six OOD tasks, including mathematics, logic, and natural language inference, ReGenesis showed consistent improvement in accuracy. In one case, the model showed a 6.1% increase in OOD performance, in contrast to the average 4.6% performance drop seen in the baseline methods. Furthermore, while existing methods like STaR suffered from a decrease in accuracy when applied to new tasks, ReGenesis was able to avoid this decrease and demonstrate tangible improvements, making it a more robust solution for reasoning generalization. In another evaluation involving five tasks in the domain, ReGenesis outperformed five benchmark methods by a margin of 7.1% to 18.9%, further underscoring its superior ability to reason across various tasks effectively. .

In conclusion, Salesforce ai Research's introduction of ReGenesis addresses an important gap in LLM development. By allowing models to synthesize reasoning paths from general guidelines and tailor them to specific tasks, ReGenesis provides a scalable solution to improve performance both within and outside the domain. The method's ability to improve reasoning without relying on costly human supervision or task-specific training data marks an important step forward in developing ai systems that can truly generalize across a wide range of tasks. The reported performance improvements on in-domain and out-of-domain tasks make ReGenesis a promising tool for improving reasoning capabilities in ai.

look at the Paper. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter and join our Telegram channel and LinkedIn Grabove. If you like our work, you will love our information sheet.. Don't forget to join our SubReddit over 50,000ml.

(Next live webinar: October 29, 2024) Best platform to deliver optimized models: Predibase inference engine (promoted)

Nikhil is an internal consultant at Marktechpost. He is pursuing an integrated double degree in Materials at the Indian Institute of technology Kharagpur. Nikhil is an ai/ML enthusiast who is always researching applications in fields like biomaterials and biomedical science. With a strong background in materials science, he is exploring new advances and creating opportunities to contribute.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>