Omnimodal language models (OLMs) are a rapidly advancing area of ai that enables understanding and reasoning across multiple types of data, including text, audio, video, and images. These models aim to simulate human understanding by processing multiple inputs simultaneously, making them very useful in complex real-world applications. Research in this field seeks to create artificial intelligence systems that can seamlessly integrate these various types of data and generate accurate responses in different tasks. This represents a leap forward in the way ai systems interact with the world, bringing them more in line with human communication, where information is rarely limited to one modality.

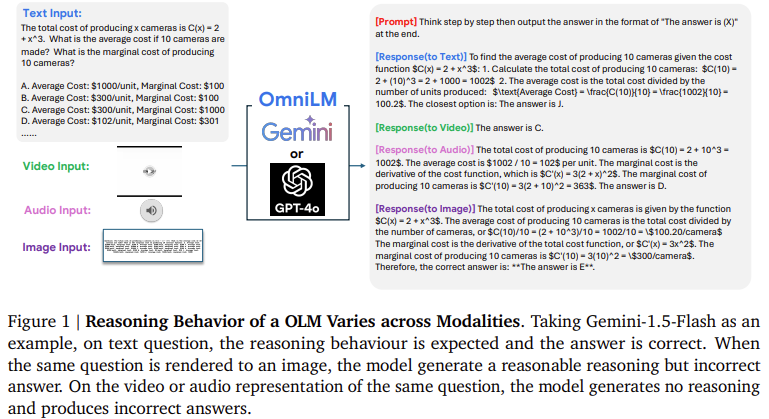

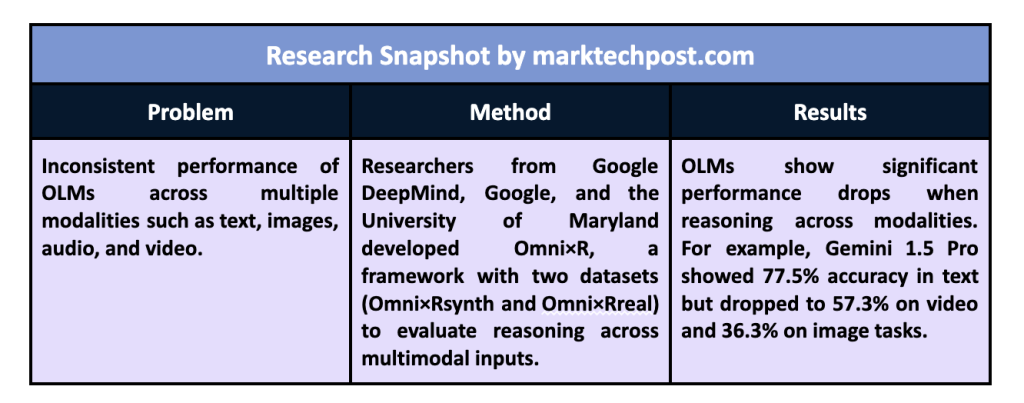

A persistent challenge in the development of OLMs is their inconsistent performance when faced with multimodal inputs. For example, a model may need to analyze data including text, images, and audio to complete a task in real-world situations. However, many current models need help to effectively combine these inputs. The main problem lies in the inability of these systems to fully reason between modalities, which generates discrepancies in their results. In many cases, models produce different responses when presented with the same information in various formats, such as a math problem displayed as an image or spoken aloud as audio.

Existing benchmarks for OLM are often limited to simple combinations of two modalities, such as text and images or video and text. These assessments should evaluate the full range of capabilities required for real-world applications, often involving more complex scenarios. For example, many current models perform well when handling dual-modality tasks. Still, they should improve significantly when asked to reason about combinations of three or more modalities, such as integrating video, text, and audio to arrive at a solution. This limitation creates a gap in evaluating how well these models actually understand and reason on multiple types of data.

Researchers from Google DeepMind, Google and the University of Maryland developed Omni×Ra new evaluation framework designed to rigorously test the reasoning capabilities of OLMs. This framework is distinguished by introducing more complex multimodal challenges. Omni×R evaluates models using scenarios in which they must integrate multiple forms of data, such as answering questions that require reasoning through text, images, and audio simultaneously. The framework includes two data sets:

- Omni×R synthesizer is a synthetic data set created by automatically converting text to other modalities.

- Omni×Rreal is a carefully curated real-world data set from sources like YouTube.

These data sets provide a more comprehensive and challenging testing environment than previous benchmarks.

Omni×Rsynth, the framework's synthetic component, is designed to push models to their limits by converting text into images, video, and audio. For example, the research team developed Omnify!, a tool for translating text in multiple modalities, creating a data set of 1,400 samples distributed across six categories, including mathematics, physics, chemistry, and computer science. Each category includes 100 examples for all six modalities: text, image, video, audio, video+audio, and image+audio, challenging models to handle complex input combinations. The researchers used this data set to test several OLMs, including Gemini 1.5 Pro and GPT-4o. The results of these tests revealed that the current models experience significant drops in performance when asked to integrate information from different modalities.

Omni×Rreal, the real-world dataset, includes 100 videos covering topics such as mathematics and science, where questions are presented in different modalities. For example, a video can visually display a math problem while answer options are said aloud, requiring the model to integrate visual and auditory information to solve the problem. The real-world scenarios further highlighted the models' difficulties in reasoning between modalities, as the results showed similar inconsistencies to those observed in the synthetic data set. In particular, models that performed well with text input experienced a sharp decline in accuracy when given video or audio inputs.

The research team conducted extensive experiments and found several key insights. For example, the Gemini 1.5 Pro model performed well in most modes, with a text reasoning accuracy of 77.5%. However, its performance dropped to 57.3% on video and 36.3% on image inputs. In contrast, GPT-4o demonstrated better results handling text and image tasks, but struggled with video, showing a 20% performance drop when given the task of integrating text and video data. These underscore the challenges of achieving consistent performance across multiple modalities, a crucial step toward advancing OLM capabilities.

The Omni×R benchmark results revealed several notable trends across different OLMs. One of the most critical observations was that even the most advanced models, such as Gemini and GPT-4o, significantly varied their reasoning capabilities across modalities. For example, the Gemini model achieved 65% accuracy when processing audio, but its performance dropped to 25.9% when combining video and audio data. Similarly, the GPT-4o-mini model, despite excelling at text-based tasks, struggled with video, showing a 41% performance gap compared to text-based tasks. These discrepancies highlight the need for more research and development to close the gap in cross-modal reasoning abilities.

The Omni×R benchmark findings point to several key conclusions that underscore the current limitations and future directions of OLM research:

- Models like Gemini and GPT-4o work well with text but struggle with multimodal reasoning.

- There is a significant performance gap between handling text-based input and complex multimodal tasks, especially when video or audio is involved.

- Larger models generally perform better in all modalities, but smaller models can sometimes outperform them on specific tasks, showing a trade-off between model size and flexibility.

- The synthetic data set (Omni×Rsynth) accurately simulates real-world challenges, making it a valuable tool for future model development.

In conclusion, the Omni×R framework presented by the research team offers a critical step forward in evaluating and improving the reasoning capabilities of OLMs. By rigorously testing models in various modalities, the study revealed important challenges that must be addressed to develop ai systems capable of human-like multimodal reasoning. The performance drops observed in tasks involving video and audio integration highlight the complexities of cross-modal reasoning and point to the need for more advanced training models and techniques to handle the complexities of real-world multimodal data.

look at the Paper. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter and join our Telegram channel and LinkedIn Grabove. If you like our work, you will love our information sheet.. Don't forget to join our SubReddit over 50,000ml.

(Next live webinar: October 29, 2024) Best platform to deliver optimized models: Predibase inference engine (promoted)

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of artificial intelligence for social good. Their most recent endeavor is the launch of an ai media platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is technically sound and easily understandable to a wide audience. The platform has more than 2 million monthly visits, which illustrates its popularity among the public.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>