Detecting objects in an image requires a certain precision, especially when the image does not just take the shape of a box to facilitate detection. However, numerous models have provided solutions with state-of-the-art features in object detection.

Zero shot object detection with the Grounding DINO base is another efficient model that allows you to scan images out of the box. This model extends to the detection of closed objects with a text encoder and at the same time allows the detection of open objects.

This model can be useful when performing a task that requires text queries to identify the object. An important feature of this model is that it does not need label data to display the image output. We will discuss everything you need to know about the Grounding DINO base model and how it works.

Learning objective

- Learn how no-shot object detection is performed with Grounding DINO Base.

- Learn about the working principle and operation of this model.

- Study the use cases of the Grounding DINO model.

- Run inference on this model.

- Explore real-life applications of the Grounding DINO base.

This article was published as part of the Data Science Blogathon.

Zero-shot object detection use cases

The main attribute of this model is the ability to identify objects in an image using a text message. This concept can help users in several ways; Models with no-shot object detection can help search images on smartphones and other devices. You can use it to search for specific places, cities, animals, and other objects.

Zero-shot classification models can also help count a specific object within a group of objects that appear in a single image. Another fascinating use case is tracking objects in videos.

How does the grounded DINO base work?

The Grounding DINO base does not have labeled data, so it works with a text message and tries to find the probability score after matching the image to the text. What this model begins with during the process is identifying the object mentioned in the text. It then generates an 'object proposal' using colors, shapes and other features to identify objects in the image.

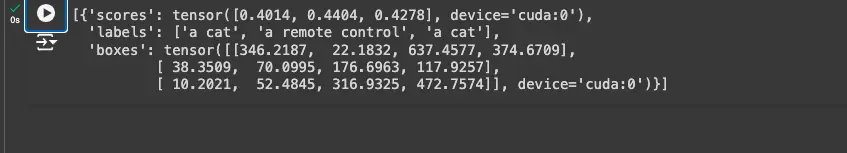

So, for each text message you add as input to the model, Grounding DINO processes the image and identifies an object through a score. Each object has a label with a probability score indicating that the object in the text input has been detected in the image. A good example is shown in the image below;

DINO Base Grounding Model Architecture

The DINO base (DETR with enhanced anchor boxes with denoising) is integrated with GLIP pre-training as the base of the mechanism. The architecture of this model combines two systems for object detection and endpoint optimization, bridging the gap between language and vision in the model.

The Grounding DINO architecture bridges the gap between language and vision using a two-stream approach. Image features are extracted using a visual backbone such as Swin Transformer and text features using a model such as BERT. These features are then transformed into a unified representation space through a feature enhancer that includes multiple layers of self-attention mechanisms.

Practically, the first layer of this model starts with the input of text and images. Since it uses two sequences, it can render the image and the text. This input is fed into the feature enhancers in the next stage of the process.

Feature Enhancers are multi-layered and can be used for text and images. Deformable text attention improves image features, while regular self-attention works to improve text features.

The next layer, language-guided query selection, makes some important contributions. You can leverage input text for object detection by selecting relevant features from images and text. The decoder can locate the position of the object in the image; This language-guided query selection helps the decoder do this and assign tags via a text description.

In the cross-modality stage, this layer integrates image and text modality features into the model. It does this through a series of layers of attention and feedback networks. Here the relationship between visual and textual information is achieved, allowing the appropriate labels to be assigned.

So, with these steps, you will have the final results, and the model will provide results including bounding box prediction, class-specific confidence filtering, and label assignment.

Running the DINO grounding model

Although you can run this model using a pipeline to help you, the autokenizer method can be effective in running this model.

Importing required libraries

import requests

import torch

from PIL import Image

from transformers import AutoProcessor, AutoModelForZeroShotObjectDetectionThis code imports the libraries for zero-shot object detection. Includes a request to upload processor and model images. Therefore, you can perform object detection with this operation even without specific training.

Preparing the environment

The next step is to define the model and identify which data pre-trained on the Grounding DINO base is used for the task. It also defines the device and the appropriate hardware system to run this model, as shown in the following line of code;

model_id = "IDEA-Research/grounding-dino-base"

device = "cuda" if torch.cuda.is_available() else "cpu"Starting the model using the processor

processor = AutoProcessor.from_pretrained(model_id)

model = AutoModelForZeroShotObjectDetection.from_pretrained(model_id).to(device)This code does two main things: initialize the pre-trained processor and map which device and hardware are comparable for efficient execution of object detection.

Processing the image

image_url = "http://images.cocodataset.org/val2017/000000039769.jpg"

image = Image.open(requests.get(image_url, stream=True).raw)

# Check for cats and remote controls

# VERY important: text queries need to be lowercased + end with a dot

text = "a cat. a remote control."This code downloads and processes the image from the URL. First, store the image and then open the URL using the 'image.open' function. This operation loads the raw image data. Additionally, the code displays the text message. So the model is looking for a jack and a remote control. It is also important to note that the text query must be in lower case for accurate processing.

Preparing the entrance

Here, you convert the image and text to a format understandable by the model using PyTorch's tensors. This code also involves the function that executes the inference while saving computational costs. Finally, the zero-shot object detection model generates predictions based on the text and image.

inputs = processor(images=image, text=text, return_tensors="pt").to(device)

with torch.no_grad():

outputs = model(**inputs)Result and output

results = processor.post_process_grounded_object_detection(

outputs,

inputs.input_ids,

box_threshold=0.4,

text_threshold=0.3,

target_sizes=(image.size(::-1))

)

This is where the model refines the raw model data and converts it into results that humans can read. It also handles image formats, sizes and dimensions while classifying the prediction from the text message.

resultsEntry image:

The output result of zero shot image object detection. Test the presence of a cat and a remote control in the image.

DINO Grounding Real Life Applications

There are many ways to apply this model in real-life applications and industries. These include;

- Models like Grounding DINO Base can be effective in robotic assistants as they can identify any object if they have larger image data sets available.

- Self-driving cars are another valuable use of this technology. Self-driving cars can use this model to detect cars, traffic lights, and other objects.

- This model can also be used as an image analysis tool to identify objects, people, and other things in an image.

Conclusion

The Grounding DINO base model provides an innovative approach to zero-shot object detection by effectively combining image and text inputs for accurate identification. Its ability to detect objects without the need for labeled data makes it versatile for various applications, from image search and object tracking to more complex scenarios such as autonomous driving.

This model ensures accurate detection and localization based on text prompts by leveraging advanced features such as deformable self-attention and multi-modal decoders. Grounding DINO shows the potential of language-guided object detection and opens new possibilities for real-life applications in ai-driven tasks.

Key takeaways

- The model architecture employs a system that helps integrate language and vision.

- Applications in robotics, autonomous vehicles and image analysis suggest that this model has promising potential and we could see greater use of it in the future.

- The Grounding DINO base performs object detection with labels trained on the model dataset, meaning it gets results from text prompts and results in probability scores. This concept makes it adaptable to various applications.

Resources

Frequently asked questions

A. Zero-shot object detection with Grounding DINO Base allows the model to detect objects in images using text prompts without the need for pre-labeled data. It uses a combination of language and visual features to identify and locate objects in real time.

A. The model processes the input text query and identifies objects in the image by generating an “object proposal” based on color, shape, and other visual characteristics. The text with the highest probability score is considered the detected object.

A. The model has numerous real-world applications, such as image search, object tracking in videos, robotic assistants, and autonomous vehicles. It can detect objects without prior knowledge, making it versatile in various industries.

A. Grounding DINO Base can be used for real-time applications, such as autonomous driving or robotic vision, due to its ability to detect objects using text prompts in dynamic environments without the need for labeled data sets.

The media shown in this article is not the property of Analytics Vidhya and is used at the author's discretion.