Generative ai models, powered by large language models (LLM) or diffusion techniques, are revolutionizing creative realms such as art and entertainment. These models can generate diverse content, including text, images, videos, and audio. However, refining the quality of the results requires additional inference methods during implementation, such as Classifier-Free Guidance (CFG). While CFG improves cue fidelity, it presents two major challenges: higher computational costs and lower diversity of results. This trade-off between quality and diversity is a critical issue in generative ai. Focusing on quality tends to reduce diversity, while increasing diversity can reduce quality, and balancing these aspects is crucial for creating ai systems.

Existing methods such as classifier-free guidance (CFG) have been widely applied to domains such as image, video, and audio generation. However, its negative impact on diversity limits its usefulness in exploratory tasks. Another method, knowledge distillation, has emerged as a powerful technique for training next-generation models, with some researchers proposing offline methods for distilling CFG-augmented models. The quality-diversity trade-offs of different inference timing strategies, such as temperature sampling, top-k sampling, and core sampling, have been compared, and core sampling performs best when quality is prioritized. Other related works, such as Model Merging for Pareto-Optimality and Music Generation, are also discussed in this article.

Researchers at Google DeepMind have proposed a novel tuning procedure called diversity-rewarded CFG distillation to address the limitations of classifier-free guidance (CFG) while preserving its strengths. This approach combines two training objectives: a distillation objective that encourages the model to follow CFG-augmented predictions and a reinforcement learning (RL) objective with a diversity reward to promote varied results for given cues. Additionally, this method allows for weight-based model fusion strategies to control the trade-off between quality and diversity at deployment time. It is also applied to the MusicLM text-to-music generative model, demonstrating superior performance in quality diversity Pareto optimization compared to the standard CFG.

The experiments were conducted to address three key questions:

- The efficiency of CFG distillation.

- The impact of diversity rewards on reinforcement learning.

- The potential of the fusion of models to create an adjustable front between quality and diversity.

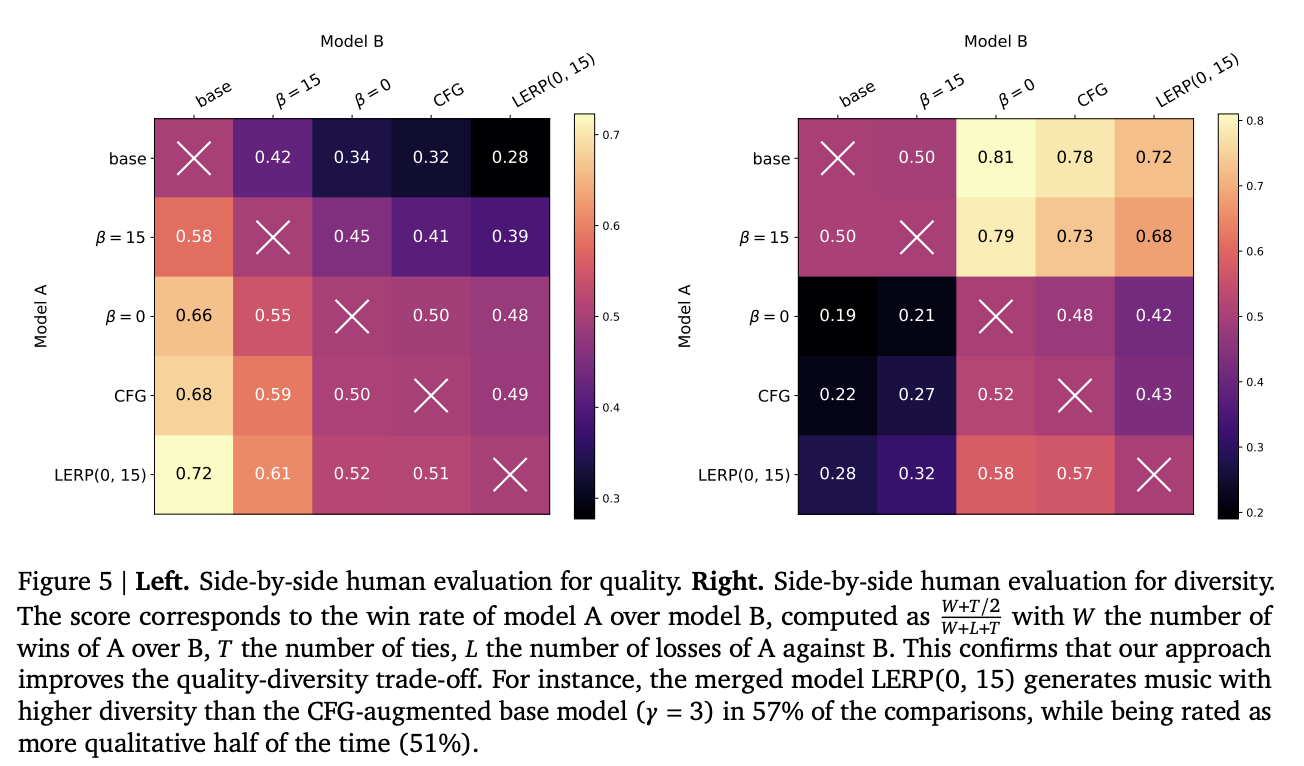

Quality assessments involve human raters for acoustic quality, text stickiness, and musicality on a scale of 1 to 5, using 100 prompts with three raters per prompt. Diversity is assessed similarly, with raters comparing pairs of generations from 50 cues. Evaluation metrics include the MuLan score for text stickiness and the user preference score based on pairwise preferences. The study incorporates human evaluations of quality, diversity, quality-diversity trade-offs, and qualitative analysis to provide a detailed evaluation of the performance of the proposed method in music generation.

Human evaluations show that the CFG-distilled model has comparable performance to the CFG-augmented base model in terms of quality, and both outperform the original base model. In terms of diversity, the CFG-distilled model with diversity reward (β = 15) significantly outperforms both the CFG-distilled and CFG-distilled models (β = 0). Qualitative analysis of generic cues such as “rock song” confirms that CFG improves quality but reduces diversity, while the β = 15 model generates a wider range of rhythms with improved quality. For specific messages like “opera singer,” the quality-focused model (β = 0) produces conventional results, while the diverse model (β = 15) creates less conventional and creative results. The fused model effectively balances these qualities, generating high-quality music.

In conclusion, researchers at Google DeepMind have introduced a tuning procedure called diversity-rewarded CFG distillation to improve the relationship between quality and diversity in generative models. This technique combines three key elements: (a) online distillation of classifier-free guidance (CFG) to eliminate computational overhead, (b) reinforcement learning with a diversity reward based on similarity embeddings, and (c) model fusion. for dynamic control of the quality-diversity balance during deployment. Extensive experiments in text-to-music generation validate the effectiveness of this strategy, and human evaluations confirm the superior performance of the fine-tuned and then merged model. This approach has great potential for applications where creativity and alignment with user intent are important.

look at the Paper. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter and join our Telegram channel and LinkedIn Grabove. If you like our work, you will love our information sheet.. Don't forget to join our SubReddit over 50,000ml.

(Next Event: October 17, 2024) RetrieveX – The GenAI Data Recovery Conference (Promoted)

Sajjad Ansari is a final year student of IIT Kharagpur. As a technology enthusiast, he delves into the practical applications of ai with a focus on understanding the impact of ai technologies and their real-world implications. Its goal is to articulate complex ai concepts in a clear and accessible way.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>