This post is co-written with Ike Bennion of Visier.

Visier's mission is based on the belief that people are the most valuable asset of every organization and that optimizing their potential requires a nuanced understanding of workforce dynamics.

Paycor is one example of the many leading global enterprise people analytics companies that trust and use the Visier platform to process large volumes of data to generate informative analytics and actionable predictive insights.

Visier's predictive analytics has helped organizations like Providence Healthcare retain critical employees within their workforce and save an estimated amount $6 million identifying and preventing employee burnout using a framework built on Visier's exit risk predictions.

Reliable sources such as Intelligent Knowledge Group, Gartner, G2, Trusted radioand RedThread Research have recognized Visier for its inventiveness, excellent user experience, and supplier and customer satisfaction. Today, more than 50,000 organizations in 75 countries use the Visier platform as an engine to shape business strategies and drive better business results.

Unlock growth potential by overcoming the technology stack barrier

Visier's predictive and analytical power is what makes its people analytics solution so valuable. Users without experience in data science or analytics can generate rigorous, data-backed predictions to answer big questions like time to fill important positions or risk of crucial employee resignation.

It was an executive priority at Visier to continue innovating its analytical and predictive capabilities because they are one of the cornerstones of what its users love about its product.

The challenge for Visier was that their data science technology prevented them from innovating at the pace they wanted. It was expensive and time-consuming to experiment and implement new analytical and predictive capabilities because:

- The data science technology stack was closely linked to the entire platform development. The data science team was unable to independently deploy changes to production. This limited the team to fewer and slower iteration cycles.

- The data science technology stack was a collection of solutions from multiple vendors, resulting in additional management and support overhead for the data science team.

Optimizing model management and deployment with SageMaker

amazon SageMaker is a managed machine learning platform that provides data scientists and engineers with familiar concepts and tools to build, train, deploy, govern, and manage the infrastructure necessary for highly available, scalable model inference endpoints. amazon SageMaker Inference Recommender is an example of a tool that can help data scientists and engineers become more autonomous and less dependent on external teams by providing guidance on how to properly size inference instances.

The existing data science technology stack was one of the many services that make up the Visier application platform. Using the SageMaker platform, Visier created an API-based microservices architecture for analytics and predictive services that was decoupled from the application platform. This gave the data science team the desired autonomy to implement changes independently and release new updates more frequently.

The results

The first improvement Visier saw after migrating analytics and prediction services to SageMaker was that it allowed the data science team to spend more time on innovations, such as creating a prediction model validation process, instead of having to spend time on implementation. details and integration of supplier tools.

Validation of the prediction model.

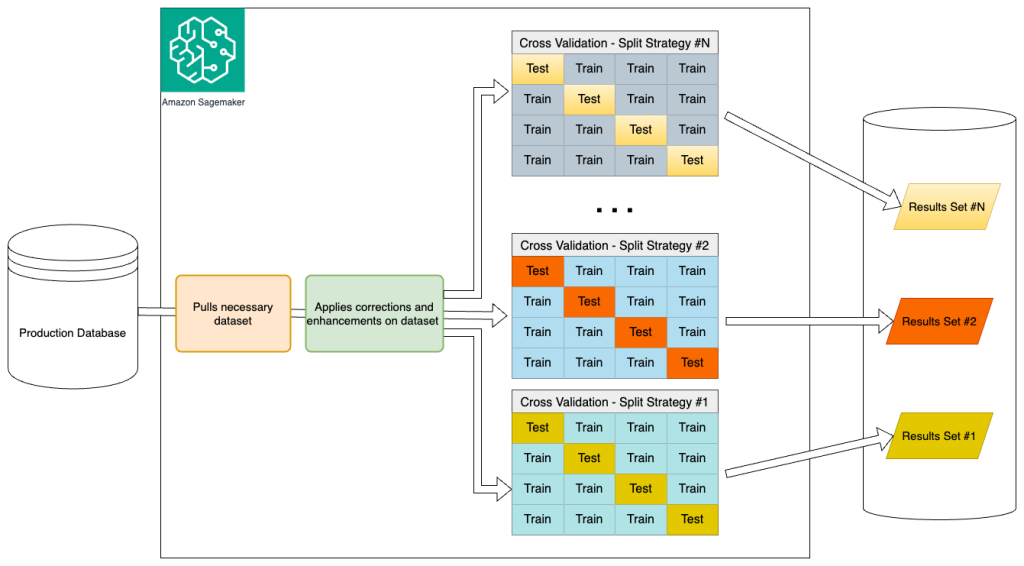

The following figure shows the validation process of the prediction model.

Using SageMaker, Visier created a prediction model validation process that:

- Extracts the training data set from the production databases.

- It gathers additional validation measures that describe the data set and specific corrections and improvements to the data set.

- Perform multiple cross-validation measurements using different splitting strategies.

- Stores validation results along with metadata about the execution in a permanent data store.

The validation process enabled the team to deliver a series of model advancements that improved prediction performance by 30% across their entire customer base.

Train customer-specific predictive models at scale

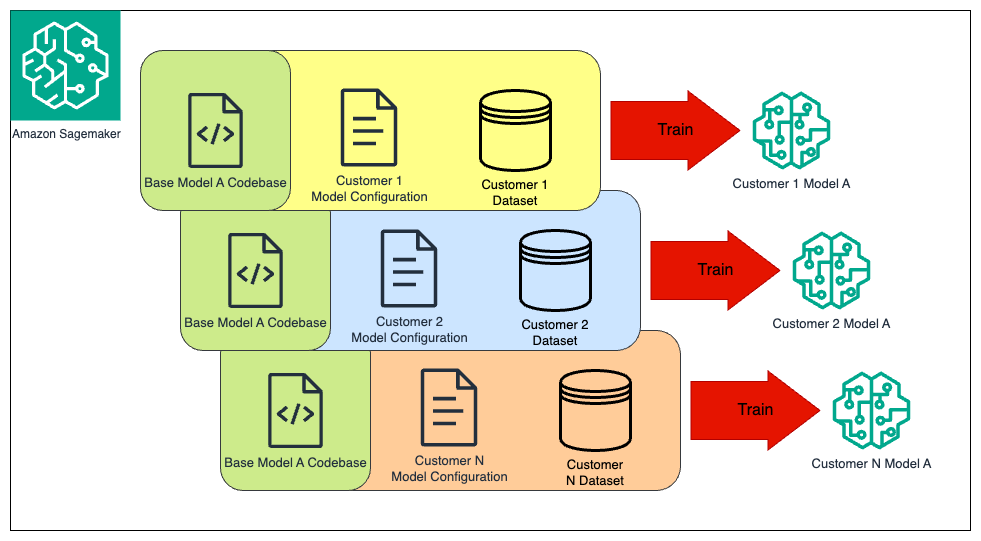

Visier develops and manages thousands of predictive models specific to its enterprise clients. The second workflow improvement the data science team made was to develop a highly scalable method for generating all customer-specific predictive models. This allowed the team to deliver ten times as many models with the same amount of resources.

As shown in the figure above, the team developed a model training process where model changes are made to a central prediction codebase. This codebase is run separately for each Visier client to train a sequence of custom models (for different time points) that are sensitive to the specialized configuration of each client and its data. Visier uses this pattern to scalably drive innovation from a single model design to thousands of custom models across its customer base. To ensure next-generation training efficiency for large models, SageMaker provides libraries that support training of parallel (SageMaker Model Parallel Library) and distributed (SageMaker Distributed Data Parallelism Library) models. To learn more about the power of these libraries, see Distributed Training and Efficient Scaling with amazon SageMaker Parallel Model and Data Libraries.

As shown in the figure above, the team developed a model training process where model changes are made to a central prediction codebase. This codebase is run separately for each Visier client to train a sequence of custom models (for different time points) that are sensitive to the specialized configuration of each client and its data. Visier uses this pattern to scalably drive innovation from a single model design to thousands of custom models across its customer base. To ensure next-generation training efficiency for large models, SageMaker provides libraries that support training of parallel (SageMaker Model Parallel Library) and distributed (SageMaker Distributed Data Parallelism Library) models. To learn more about the power of these libraries, see Distributed Training and Efficient Scaling with amazon SageMaker Parallel Model and Data Libraries.

Using the model validation workload shown above, changes made to a predictive model can be validated in as little as three hours.

Process unstructured data

Iterative improvements, a scalable implementation, and consolidation of data science technology were a great start, but when Visier adopted SageMaker, the goal was to enable innovation that was completely outside the scope of the previous technology stack.

A unique advantage Visier has is the ability to learn from the collective behaviors of employees across its customer base. Tedious data engineering tasks such as extracting data into the environment and database infrastructure costs were eliminated by securely storing your large number of customer-related data sets within amazon Simple Storage Service (amazon S3) and using amazon Athena to directly query the data using SQL. Visier used these AWS services to combine relevant data sets and feed them directly into SageMaker, resulting in the creation and launch of a new prediction product called Community Predictions. Visier's community predictions give smaller organizations the power to create predictions based on the entire community's data, rather than just their own. That gives a 100-person organization access to the kind of predictions that would otherwise be reserved for companies with thousands of employees.

To learn how you can manage and process your own unstructured data, see Managing and Governing Unstructured Data Using AWS ai/ML and Analytics Services.

Use Viewer data in amazon SageMaker

With the transformative success Visier had internally, they wanted to ensure their end customers could also benefit from the amazon SageMaker platform to develop their own ai and machine learning (ai/ML) models.

Visier has written a Complete tutorial on how to use Visier Data in amazon SageMaker and they have also made a Python connector available on their GitHub repository. The Python connector allows customers to funnel Visier data into their own ai/ML projects to better understand the impact of their people on finances, operations, customers, and partners. These results are often imported back into the Visier platform to distribute these insights and drive derived analytics to further improve outcomes throughout the employee lifecycle.

Conclusion

Visier's success with amazon SageMaker demonstrates the power and flexibility of this managed machine learning platform. By leveraging SageMaker's capabilities, Visier increased its model throughput by 10x, accelerated innovation cycles, and unlocked new opportunities, such as processing unstructured data for its Community predictions product.

If you're looking to streamline your machine learning workflows, scale your model deployments, and unlock valuable insights from your data, explore the possibilities with SageMaker and built-in capabilities like amazon SageMaker Pipelines.

Get started today and create an AWS account, go to the amazon SageMaker console and contact your AWS account team to set up an experience-based acceleration engagement to unlock the full potential of your data and create custom generative ai models and machine learning that drives actionable insights and business impact today.

About the authors

Kinman Lam He is a solutions architect at AWS. He is responsible for the health and growth of some of the largest ISV/DNB companies in Western Canada. He is also a member of the AWS Canada Generative ai vTeam and has helped a growing number of Canadian companies successfully launch advanced generative ai use cases.

Kinman Lam He is a solutions architect at AWS. He is responsible for the health and growth of some of the largest ISV/DNB companies in Western Canada. He is also a member of the AWS Canada Generative ai vTeam and has helped a growing number of Canadian companies successfully launch advanced generative ai use cases.

See Bennion is vice president of platform and platform marketing at Visier and a recognized thought leader at the intersection of people, work and technology. With a rich history in implementation, product development, product strategy and marketing. He specializes in market intelligence, business strategy and innovative technologies, including artificial intelligence and blockchain. Ike is passionate about using data to drive equitable and intelligent decision making. Outside of work, he enjoys dogs, hip hop, and weightlifting.

See Bennion is vice president of platform and platform marketing at Visier and a recognized thought leader at the intersection of people, work and technology. With a rich history in implementation, product development, product strategy and marketing. He specializes in market intelligence, business strategy and innovative technologies, including artificial intelligence and blockchain. Ike is passionate about using data to drive equitable and intelligent decision making. Outside of work, he enjoys dogs, hip hop, and weightlifting.