False memories, memories of events that did not occur or that deviate significantly from actual events, pose a significant challenge in psychology and have far-reaching consequences. These distorted memories can compromise legal proceedings, lead to erroneous decision-making, and distort testimony. The study of false memories is crucial due to their potential to impact various aspects of human life and society. Researchers face several challenges when investigating this phenomenon, including the reconstructive nature of memory, which is influenced by individual attitudes, expectations, and cultural contexts. The malleability of memory and its susceptibility to linguistic influence further complicate the study of false memories. Furthermore, the similarity between the neural signals of true and false memories presents a major obstacle to distinguishing them, making it difficult to develop practical methods for detecting false memories in real-world settings.

Previous research efforts have explored various aspects of false memory formation and its relationship to emerging technologies. Studies have investigated the impact of deepfakes and misleading information on memory formation, revealing the susceptibility of human memory to external influences. Social robots have been shown to influence recognition memory, with one study showing that 77% of inaccurate and emotionally neutral information provided by a robot was entered into participants' memory as errors. This effect was comparable to human-induced memory distortions. Neuroimaging techniques, such as functional magnetic resonance imaging (fMRI) and event-related potentials (ERPs), have examined the neural correlates of true and false memories. These studies have identified distinct patterns of brain activation associated with true and false recognition, particularly in early visual processing regions and the medial temporal lobe. However, the practical application of these neuroimaging methods in real-world settings still needs to be improved due to their high cost, complex infrastructure requirements, and high time demands. Despite these advances, there is a significant research gap in understanding the specific influence of conversational ai, particularly large language models, on the formation of false memories.

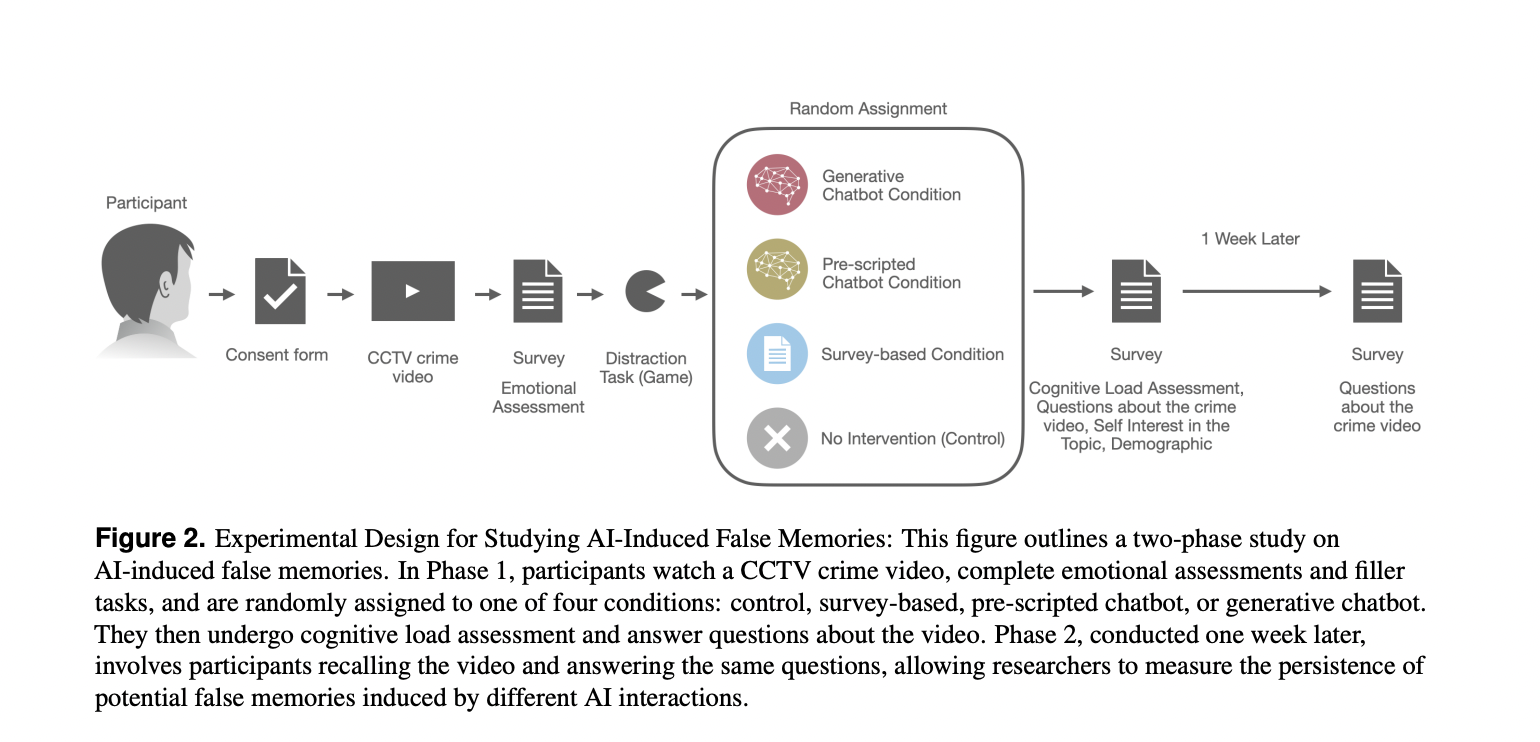

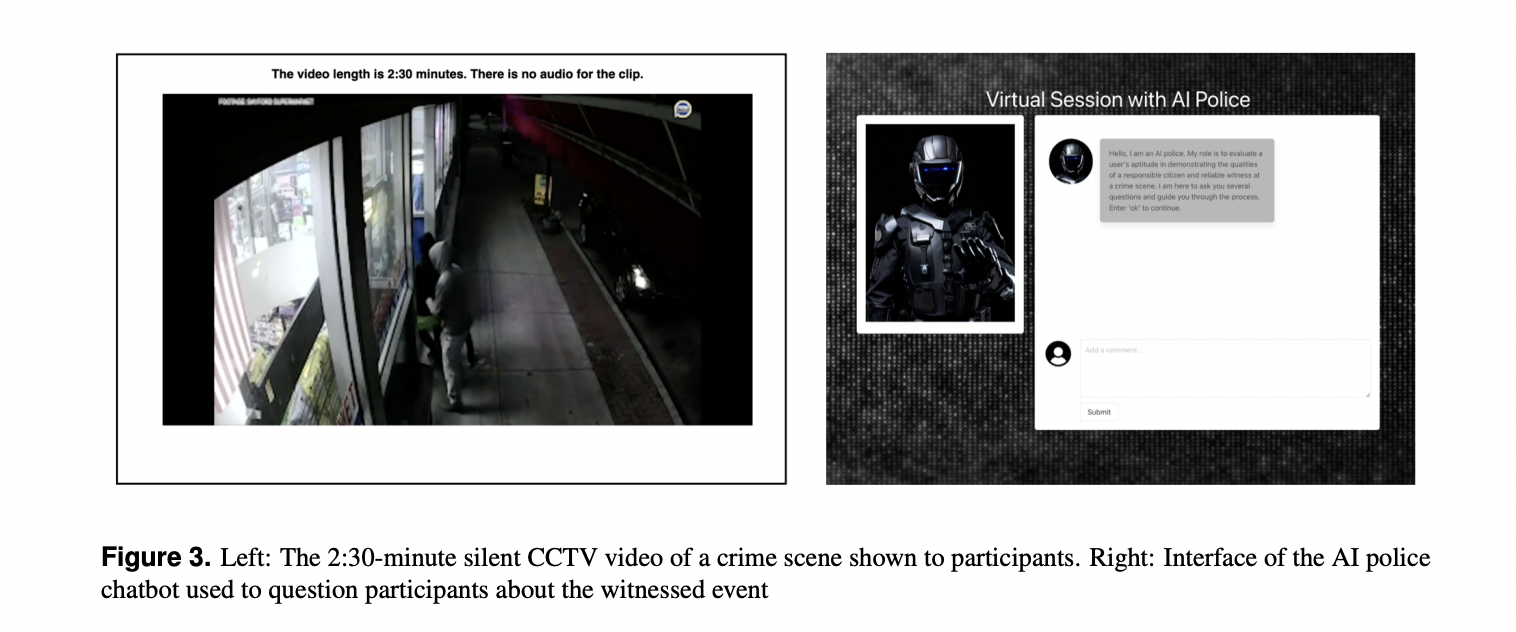

Researchers from the MIT Media Lab and the University of California have conducted a comprehensive study to investigate the impact of LLM-powered conversational ai on the formation of false memories, simulating a witness scenario in which ai systems served as interrogators. This experimental design involved 200 participants randomly assigned to one of four conditions in a two-phase study. The experiment used a cover story to conceal its true purpose, informing participants that the study aimed to assess reactions to video coverage of a crime. In Phase 1, participants watched a two-and-a-half-minute silent, non-pauseable CCTV video of a store armed robbery, simulating the experience of a witness. They then interacted with their assigned condition, which was one of four experimental conditions designed to systematically compare various mechanisms that influence memory: a control condition, a survey-based condition, a preset chatbot condition, and a generative chatbot condition. These conditions were carefully designed to explore different aspects of false memory induction, from traditional survey methods to advanced ai-powered interactions. This allows for a thorough analysis of how different interrogation techniques might influence memory formation and recall in witness scenarios.

The study employed a two-phase experimental design to investigate the impact of different ai interaction methods on the formation of false memories. In Phase 1, participants watched a CCTV video of an armed robbery and then interacted with one of four conditions: control, survey-based, prewritten chatbot, or generative chatbot. The survey-based condition used Google Forms with 25 yes or no questions, including five misleading ones. The prewritten chatbot asked the same questions as the survey, while the generative chatbot provided feedback using an LLM, potentially reinforcing false memories. After the interaction, participants answered 25 follow-up questions to measure their recall of the video content.

Phase 2, conducted one week later, assessed the persistence of induced false memories. This design allowed for the evaluation of the immediate and long-term effects of different interaction methods on the recall and retention of false memories. The study aimed to answer how various ai interaction methods influence the formation of false memories, with three pre-registered hypotheses comparing the effectiveness of different conditions and exploring moderating factors. Additional research questions examined levels of confidence in immediate and delayed false memories, as well as changes in false memory counts over time.

The results of the study revealed that short-term interactions (10 to 20 minutes) with generative chatbots can induce significantly more false memories and increase users' confidence in these false memories compared to other interventions. The generative chatbot condition produced a large misinformation effect: 36.4% of users were misled through the interaction, compared to 21.6% in the survey-based condition. Statistical analysis showed that the generative chatbot induced significantly more immediate false memories than the survey-based intervention and the pre-established chatbot.

All intervention conditions significantly increased users' confidence in immediate false memories compared to the control condition, with the generative chatbot condition producing confidence levels approximately twice as high as the control. Interestingly, the number of false memories induced by the generative chatbot remained constant after one week, while the control and survey conditions showed significant increases in false memories over time.

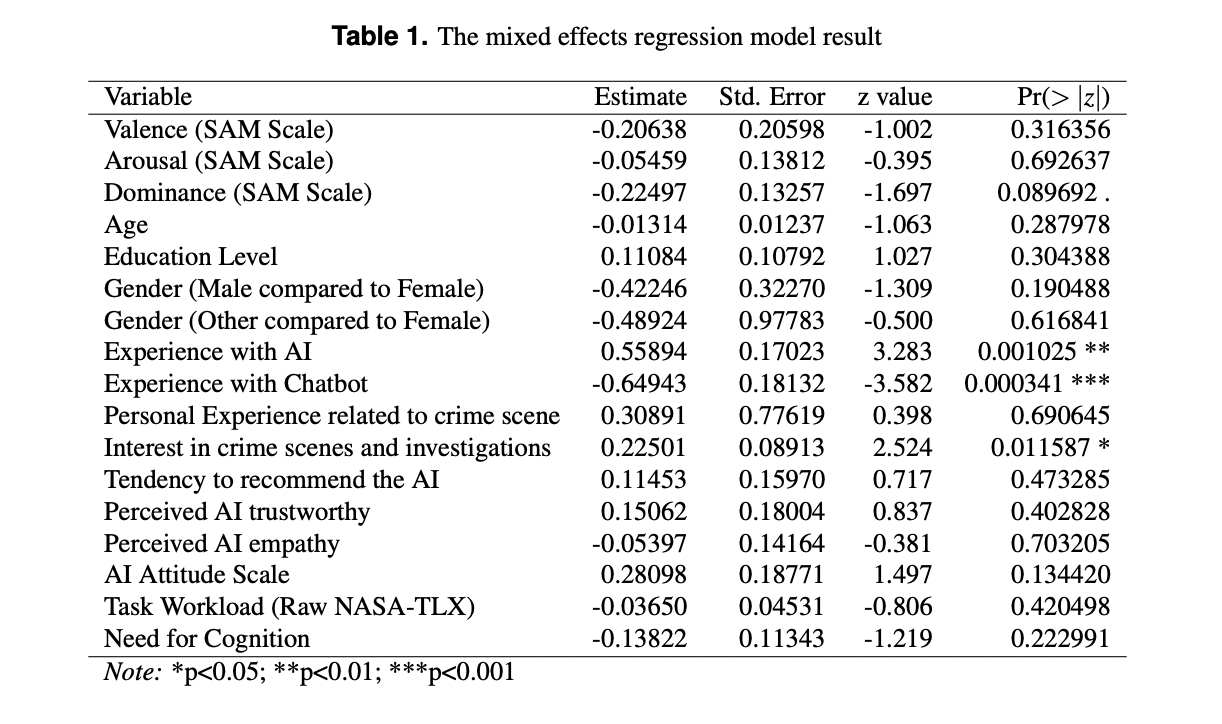

The study also identified several moderating factors that influence ai-induced false memories. Users less familiar with chatbots, more familiar with artificial intelligence technology, and those more interested in crime investigations were found to be more susceptible to the formation of false memories. These findings highlight the complex interplay between user characteristics and the potential for ai-induced false memories, emphasizing the need for careful consideration of these factors in the deployment of ai systems in sensitive contexts such as eyewitness testimonies.

This study provides compelling evidence of the significant impact that ai, particularly generative chatbots, can have on the formation of false memories in humans. The research underscores the urgent need for careful consideration and ethical guidelines as ai systems become increasingly sophisticated and integrated into sensitive contexts. The findings highlight the potential risks associated with interactions between ai and humans, especially in areas such as eyewitness testimony and legal proceedings. As the world continues to advance ai technology, it is crucial to balance its benefits with safeguards to protect the integrity of human memory and decision-making processes. More research is essential to fully understand and mitigate these effects.

look at the Paper. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter and join our Telegram channel and LinkedIn Grabove. If you like our work, you will love our information sheet..

Don't forget to join our SubReddit over 50,000ml

Are you interested in promoting your company, product, service or event to over 1 million ai developers and researchers? Let's collaborate!

Asjad is an internal consultant at Marktechpost. He is pursuing B.tech in Mechanical Engineering from Indian Institute of technology, Kharagpur. Asjad is a machine learning and deep learning enthusiast who is always researching applications of machine learning in healthcare.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>