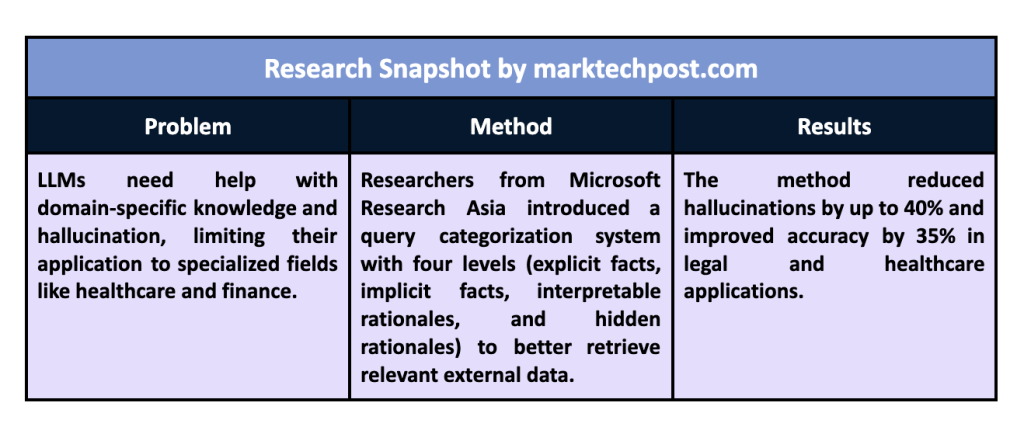

Large language models (LLMs) have revolutionized the field of ai with their ability to generate human-like text and perform complex reasoning. However, despite their capabilities, LLMs need help with tasks that require specific domain knowledge, especially in healthcare, law, and finance. When trained on large data sets, these models often miss critical information from specialized domains, leading to hallucinations or inaccurate responses. Improving LLMs with external data has been proposed as a solution to these limitations. By integrating relevant information, models become more accurate and effective, significantly improving their performance. The retrieval-augmented generation (RAG) technique is an excellent example of this approach, allowing LLMs to recover the data needed during the generation process to provide more accurate and timely responses.

One of the most important problems in the implementation of LLM is its inability to handle queries that require specific and up-to-date information. While LLMs are very capable when it comes to general knowledge, they fail when assigned specialized or urgent queries. This deficit occurs because most models are trained with static data, so they can only update their knowledge with external input. For example, in healthcare, a model that requires access to current medical guidelines will struggle to provide accurate advice, potentially putting lives at risk. Similarly, legal and financial systems require constant updates to keep up with changing regulations and market conditions. Therefore, the challenge lies in developing a model that can dynamically extract relevant data to meet the specific needs of these domains.

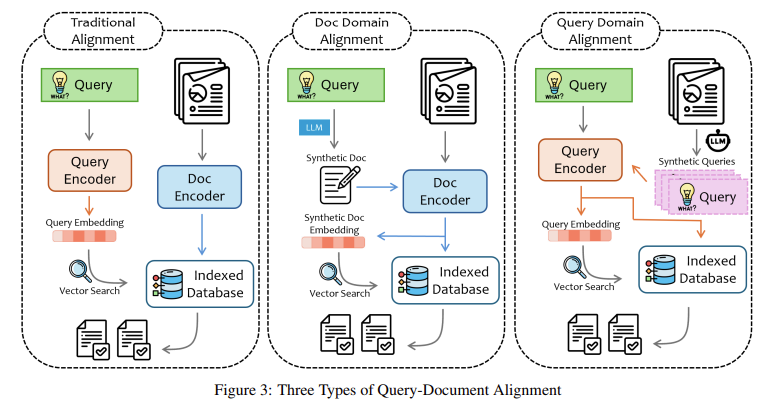

Current solutions, such as fine-tuning and RAG, have made progress in addressing these challenges. Fine-tuning allows a model to be retrained with domain-specific data, tailoring it for particular tasks. However, this approach is time-consuming and requires a large amount of training data, which is only sometimes available. Additionally, fine tuning often results in overfitting, where the model becomes too specialized and needs help with general queries. On the other hand, RAG offers a more flexible approach. Instead of relying solely on pre-trained knowledge, RAG allows models to retrieve external data in real time, improving their accuracy and relevance. Despite its advantages, RAG still faces several challenges, such as the difficulty of processing unstructured data, which can come in various forms, such as text, images, and tables.

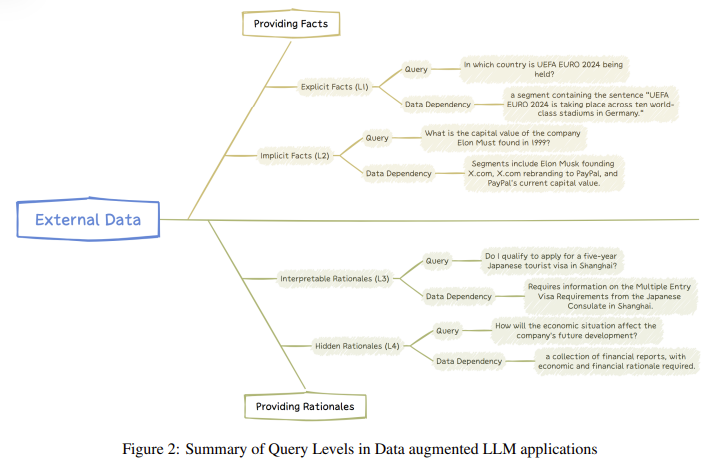

Researchers at Microsoft Research Asia introduced a novel method that classifies user queries into four different levels based on the complexity and type of external data required. These levels are explicit facts, implicit facts, interpretable foundations, and hidden foundations. Categorization helps adapt the model's approach to retrieving and processing data, ensuring that it selects the most relevant information for a given task. For example, explicit fact queries involve simple questions, such as “What is the capital of France?” where the response can be retrieved from external data. Implicit fact queries require more reasoning, such as combining multiple pieces of data to infer a conclusion. Interpretable foundation queries involve domain-specific guidelines, while hidden foundation queries require deep reasoning and often deal with abstract concepts.

The method proposed by Microsoft Research allows LLMs to differentiate between these types of queries and apply the appropriate level of reasoning. For example, in the case of hidden foundation queries, where there is no clear answer, the model could infer patterns and use domain-specific reasoning methods to generate an answer. By dividing queries into these categories, the model becomes more efficient in retrieving the necessary information and providing accurate answers based on context. This categorization also helps reduce the computational load on the model, as it can now focus on retrieving only the data relevant to the query type instead of scanning large amounts of unrelated information.

The study also highlights the impressive results of this approach. The system significantly improved performance in specialized domains such as healthcare and legal analysis. For example, in healthcare applications, the model reduced the rate of hallucinations by up to 40%, providing more informed and reliable answers. The model's accuracy in processing complex documents and providing detailed analysis increased by 35% in legal systems. Overall, the proposed method allowed for more accurate retrieval of relevant data, leading to better decision making and more reliable results. The study found that RAG-based systems reduced incidents of hallucinations by basing model responses on verifiable data, improving accuracy in critical applications such as medical diagnosis and legal document processing.

In conclusion, this research provides a crucial solution to one of the fundamental problems in implementing LLM in specialized domains. By introducing a system that classifies queries based on complexity and type, Microsoft Research researchers have developed a method that improves the accuracy and interpretability of LLM results. This framework allows LLMs to retrieve the most relevant external data and effectively apply it to domain-specific queries, reducing hallucinations and improving overall performance. The study showed that using structured query categorization can improve results by up to 40%, making it an important step forward in ai-powered systems. By addressing both the problem of data retrieval and the integration of external knowledge, this research paves the way for more reliable and robust LLM applications in various industries.

look at the Paper. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter and join our Telegram channel and LinkedIn Grabove. If you like our work, you will love our information sheet..

Don't forget to join our SubReddit over 50,000ml

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of artificial intelligence for social good. Their most recent endeavor is the launch of an ai media platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is technically sound and easily understandable to a wide audience. The platform has more than 2 million monthly visits, which illustrates its popularity among the public.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>