Introduction

The ai revolution has ushered in a new era of creativity, where text-to-image models are redefining the intersection of art, design, and technology. Pixtral 12B and Qwen2-VL-72B are two pioneering forces driving this transformation, enabling the seamless conversion of text cues into stunning images that captivate, inspire, and inform. Pixtral 12B and Qwen2-VL-72B are making this reality possible, leveraging cutting-edge ai architectures and vast training datasets to transform text into stunning visuals. From artistic expressions to commercial applications, these models are transforming industries and redefining the boundaries of what’s possible.

In this blog, we will conduct an in-depth hands-on evaluation of the Pixtral 12B and Qwen2-VL-72B using Hugging Face Spaces as our testing ground.

Learning outcomes

- Understand the contrasting strengths of Pixtral 12B and Qwen2-VL-72B in text-to-image generation.

- Evaluate the impact of model size on performance and output quality in ai-driven creativity.

- Identify suitable applications for Pixtral 12B in real-time scenarios versus the strengths of Qwen2 in high-level projects.

- Recognize the importance of efficiency and accuracy when selecting ai models for various use cases.

- Analyze practical performance results to determine the best model for specific imaging tasks.

This article was published as part of the Data Science Blogathon.

Comparison between Pixtral 12B and Qwen2-VL-72B

Now let's compare Pixtral 12B and Qwen2-VL-72B in the following table:

| Feature | Pixtral 12B | Qwen2-VL-72B |

|---|---|---|

| Parameters | 12 billion | 72 billion |

| Main focus | Speed and efficiency | Detail and contextual understanding |

| Ideal use cases | Marketing, mobile applications, web platforms | Entertainment, advertising, film production. |

| Performance | Fast, low-latency responses | High quality, intricate details. |

| Hardware Requirements | Consumer GPUs, Edge Devices | High-end GPU, cloud-based infrastructure |

| Output quality | Visually accurate, good scalability. | Extremely detailed, photorealistic. |

| Architecture | Optimized for general purpose tasks | Multimodal transformer |

| Target users | Developers, artists, designers. | High-level creative professionals |

| Compensations | Less complexity, less hardware intensive | Requires powerful hardware and complex handling of prompts |

Architectural differences between Pixtral 12B and Qwen2-VL-72B

| Feature | Pixtral 12B | Qwen2-VL-72B |

| Model size | 12 billion parameters | 72 billion parameters |

| Focus | Efficiency and speed in image generation | Synthesis of highly complex and detailed images |

| Architecture | Transformer based with optimization for real-time use | Multimodal transformer with deep contextual learning |

| Training data | Dataset optimized for speed and performance | Large dataset focused on capturing high-quality visual details |

| Handling visual details | Focus on generalized tasks with decent quality. | He excels at intricate, detailed and complex images. |

| Inference speed | Faster, with minimal latency | Slower due to model size and depth of analysis |

| Fine tuning of flexibility | Easier to adjust for smaller projects | Requires more resources to fit large models |

The table should give you a clearer picture of how these models differ architecturally and how those differences impact their performance and use cases.

Pixtral 12B and Qwen2-VL-72B performance analysis

We will be conducting a thorough hands-on evaluation of the Pixtral 12B and Qwen2-VL-72B using Hugging Face Spaces as a testing ground. Both are available for hands-on testing via Hugging Face Spaces, allowing users to explore their capabilities first-hand. You can access them via the links below:

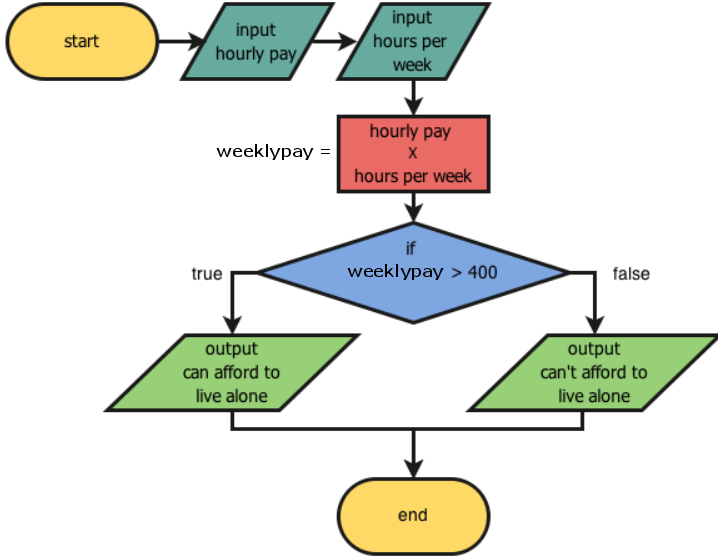

Task 1: Provide the Python code for the following flowchart

Pixtral 12B

# Function to determine if one can afford to live alone

def can_afford_to_live_alone(hourly_pay, hours_per_week):

# Calculate weekly pay

weekly_pay = hourly_pay * hours_per_week

# Determine if weekly pay is greater than 400

if weekly_pay > 400:

return "can afford to live alone"

else:

return "can't afford to live alone"

# Main function to execute the flowchart

def main():

# Input hourly pay and hours per week

hourly_pay = float(input("Enter hourly pay: "))

hours_per_week = float(input("Enter hours per week: "))

# Determine if one can afford to live alone

result = can_afford_to_live_alone(hourly_pay, hours_per_week)

# Output the result

print(result)

# Execute the main function

if __name__ == "__main__":

main()

Qwen2-VL-72B

# Start

hourly_pay = float(input("Enter your hourly pay: "))

hours_per_week = float(input("Enter the number of hours you work per week: "))

# Calculate weekly pay

weekly_pay = hourly_pay * hours_per_week

# Check if weekly pay is greater than 400

if weekly_pay > 400:

print("You can afford to live alone.")

else:

print("You can't afford to live alone.")

# End

Winner: Pixtral 12B

The Pixtral 12B code snippet is better because it follows good programming practices by using functions to separate logic and improve readability and maintainability. This modular approach makes the code easier to extend, test, and debug. For simple scripts, the Qwen2-VL-72B code snippet may be sufficient, but for more complex scenarios or larger projects, the structure of the first code snippet is preferable.

Task 2: Convert the image to CSV format

Pixtral 12B

Qwen2-VL-72B

Winner- Qwen2-VL-72B

Qwen2-VL-72B provided a better result. It correctly formatted the CSV without additional headers, ensuring that the data lines up correctly with the columns. This makes it easier to use and analyze the data directly from the CSV file.

Task 3: Tell me the input fields in this image

Pixtral 12B

Qwen2-VL-72B

Winner: Pixtral 12B

Both models identified the input field, but Pixtral ai emerged as the winner by providing detailed and comprehensive information about the image and identifying the input fields.

Task 4: Explain this image

Pixtral 12B

Qwen2-VL-72B

Winner: Pixtral 12B

Both models were able to identify that the cat was running in the image, but Pixtral gave a more appropriate explanation with fully identifiable information.

Performance rating

In terms of performance, Pixtral emerged the winner in 3 out of 4 tasks, demonstrating its strength in terms of accuracy and detail despite being a smaller model (12B) compared to the Qwen2-VL-72B. The overall score can be summarized as follows:

- Pixtral 12B: It demonstrated a strong ability to provide detailed, accurate, and context-aware descriptions, outperforming Qwen2 on most tasks despite its smaller size. Its ability to provide accurate information consistently earns it a higher rating in this comparison.

- Qwen2-VL-72B: Although it was larger, it had issues with accuracy on key tasks. It performed well in terms of providing overviews, but lacked the depth and accuracy of Pixtral.

Overall rating

- Pixtral 12B: 4.5/5

- Qwen2-VL-72B: 3.5/5

Pixtral’s ability to outperform a much larger model indicates its efficiency and focus on delivering accurate results.

Conclusion

In the rapidly evolving landscape of ai-driven creativity, the Pixtral 12B and Qwen2-VL-72B represent two distinct approaches to text-to-image generation, each with their own strengths. Through hands-on evaluation, it’s clear that the Pixtral 12B, despite being a smaller model, consistently delivers accurate and detailed results, and particularly excels at tasks that prioritize speed and accuracy. It’s an ideal choice for real-time applications, offering a balance between efficiency and output quality. Meanwhile, the Qwen2-VL-72B, while powerful and capable of handling more complex and nuanced tasks, falls short in some areas, primarily due to its larger size and need for more advanced hardware.

The comparison between the two models highlights that bigger is not always better. Pixtral 12B demonstrates that smaller, well-optimized models can outperform larger ones in certain contexts, especially when speed and accessibility are critical.

Key points

- Pixtral 12B shines in speed and accuracy, making it suitable for real-time applications and general tasks where fast and efficient results are essential.

- The Qwen2-VL-72B is best suited for complex, high-end creative tasks, but its size and resource demands may limit accessibility for everyday users.

- Pixtral outperformed Qwen2 on 3 out of 4 tasks, showing that model size is not the only factor determining performance.

- Real-world use cases such as marketing, mobile apps, and design might benefit most from Pixtral’s efficiency, while large-scale projects with a need for intricate details may favor Qwen2.

Frequently Asked Questions

A. Pixtral 12B is designed to deliver speed and efficiency in real-time image generation, making it ideal for applications such as marketing and mobile apps.

A. Qwen2-VL-72B focuses on the synthesis of complex and high-detail images, suitable for creative industries that require complex images.

A. Pixtral 12B can run on consumer-grade GPUs, while Qwen2-VL-72B requires high-end GPUs or cloud infrastructure.

A. Pixtral 12B outperformed Qwen2-VL-72B in 3 out of 4 tasks, demonstrating its accuracy and detail despite being smaller.

A. While Pixtral 12B is primarily optimized for speed, it can handle general tasks effectively, but may not be comparable to Qwen2 for highly detailed projects.

The media displayed in this article is not the property of Analytics Vidhya and is used at the discretion of the author.