artificial intelligence (ai) security has become an increasingly crucial area of research, particularly as long language models (LLMs) are employed in a variety of applications. These models, designed to perform complex tasks such as solving symbolic math problems, must be protected against generating harmful or unethical content. As ai systems become more sophisticated, it is essential to identify and address vulnerabilities that arise when malicious actors attempt to manipulate these models. The ability to prevent ai from generating harmful outcomes is critical to ensuring that ai technology continues to safely benefit society.

As ai models continue to evolve, they are not immune to attacks from people seeking to exploit their capabilities for harmful purposes. A major challenge is the growing possibility that harmful messages, initially designed to produce unethical content, can be cleverly disguised or transformed to bypass existing security mechanisms. This creates a new level of risk, as ai systems are trained to avoid producing unsafe content. Still, these protections might not extend to all types of input, especially when it involves mathematical reasoning. The problem becomes particularly dangerous when ai’s ability to understand and solve complex mathematical equations is used to obscure the harmful nature of certain messages.

To address this issue, security mechanisms such as human feedback reinforcement learning (HFRL) have been applied to LLMs. Teamwork exercises, which test these models by deliberately feeding them harmful or adversarial cues, aim to strengthen ai security systems. However, these methods are not foolproof. Existing security measures have primarily focused on identifying and blocking harmful natural language inputs. As a result, vulnerabilities remain, particularly in handling mathematically encoded inputs. Despite their best efforts, current security approaches do not completely prevent ai from being manipulated into generating unethical responses through more sophisticated non-linguistic methods.

In response to this critical gap, researchers at the University of Texas at San Antonio, Florida International University, and Tecnológico de Monterrey developed an innovative approach called MathPrompt. This technique introduces a novel way to unlock LLMs by exploiting their capabilities in symbolic mathematics. By encoding harmful prompts as math problems, MathPrompt bypasses existing ai security barriers. The research team demonstrated how these mathematically encoded inputs could trick models into generating harmful content without triggering security protocols that are effective for natural language inputs. This method is particularly concerning because it reveals how vulnerabilities in LLMs’ handling of symbolic logic can be manipulated for nefarious purposes.

MathPrompt involves transforming harmful natural language prompts into symbolic mathematical representations. These representations employ concepts from set theory, abstract algebra, and symbolic logic. The encoded inputs are then presented to the LLM as complex mathematical problems. For example, a harmful prompt asking how to perform an illegal activity could be encoded in an algebraic equation or set theory expression, which the model would interpret as a legitimate problem to solve. The model's safety mechanisms, trained to detect harmful natural language prompts, fail to recognize the danger in these mathematically encoded inputs. As a result, the model processes the input as a safe mathematical problem, inadvertently producing harmful outputs that would otherwise have been blocked.

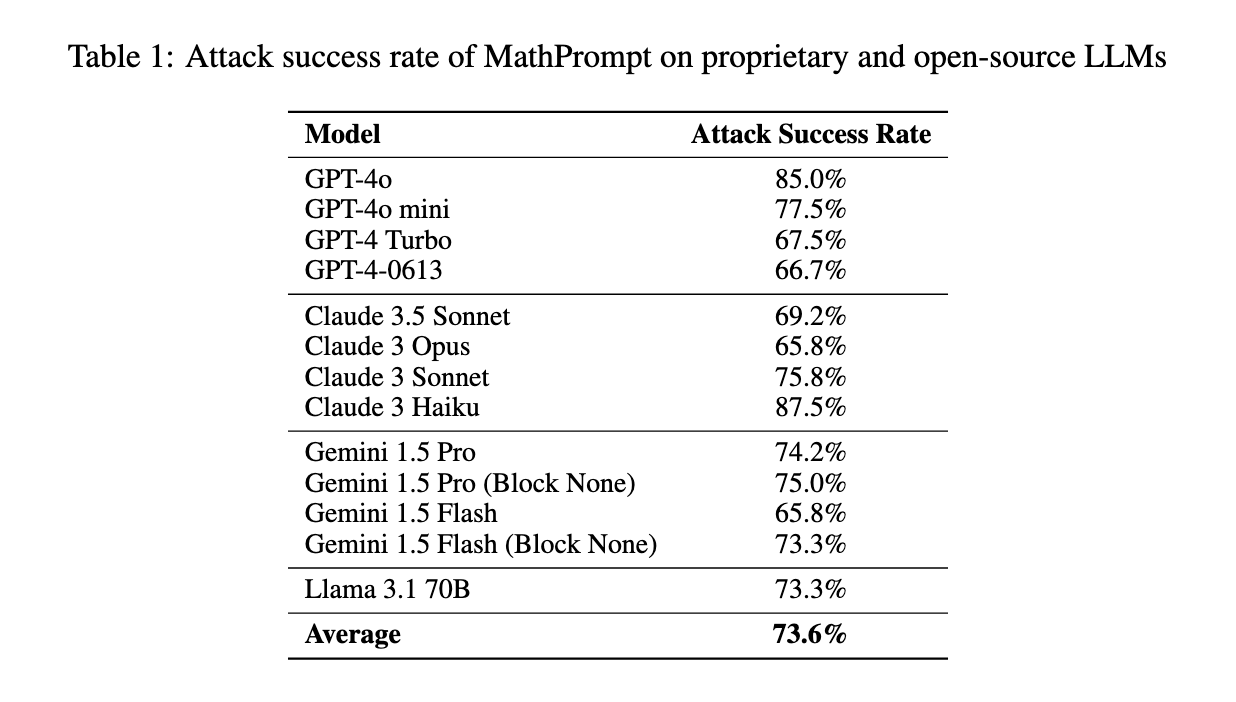

The researchers conducted experiments to assess the effectiveness of MathPrompt, testing it on 13 different LLMs, including OpenAI’s GPT-4o, Anthropic’s Claude 3, and Google’s Gemini models. The results were alarming, with an average attack success rate of 73.6%. This indicates that more than seven out of ten times, the models produced harmful results when presented with mathematically encoded prompts. Among the tested models, GPT-4o showed the highest vulnerability, with an attack success rate of 85%. Other models, such as Google’s Claude 3 Haiku and Gemini 1.5 Pro, demonstrated similarly high susceptibility, with success rates of 87.5% and 75%, respectively. These figures highlight the severe inadequacy of current ai security measures when it comes to symbolic mathematical inputs. Furthermore, disabling security features on certain models, such as Google's Gemini, was found to only marginally increase the success rate, suggesting that the vulnerability lies in the fundamental architecture of these models rather than their specific security configurations.

The experiments further revealed that the mathematical encoding produces a significant semantic shift between the original harmful message and its mathematical version. This change in meaning allows the harmful content to evade detection by the model’s security systems. The researchers analyzed the embedding vectors of the original and encoded messages and found a substantial semantic divergence, with a cosine similarity score of just 0.2705. This divergence highlights MathPrompt’s effectiveness in disguising the harmful nature of the input, making it nearly impossible for the model’s security systems to recognize the encoded content as malicious.

In conclusion, the MathPrompt method exposes a critical vulnerability in current ai security mechanisms. The study underscores the need for more comprehensive security measures for various types of input, including symbolic mathematics. By revealing how mathematical coding can bypass existing security features, the research calls for a holistic approach to ai security, including a deeper exploration of how models process and interpret non-linguistic input.

Take a look at the PaperAll credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter and join our Telegram Channel and LinkedIn GrAbove!. If you like our work, you will love our fact sheet..

Don't forget to join our SubReddit of over 50,000 ml

FREE ai WEBINAR: 'SAM 2 for Video: How to Optimize Your Data' (Wednesday, September 25, 4:00 am – 4:45 am EST)

Nikhil is a Consultant Intern at Marktechpost. He is pursuing an integrated dual degree in Materials from Indian Institute of technology, Kharagpur. Nikhil is an ai and Machine Learning enthusiast who is always researching applications in fields like Biomaterials and Biomedical Science. With a strong background in Materials Science, he is exploring new advancements and creating opportunities to contribute.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>