Introduction

Gender detection from facial images is one of the many fascinating applications of computer vision. In this project, we combine OpenCV for face localization and the Roboflow API for gender classification, creating a device that identifies faces, examines them, and predicts their gender. We will use Python, in particular in Google Colab, to write and run this code. This guide provides a simple walkthrough of the code, explaining each step so that you can understand and apply it in your projects.

Learning Objective

- Understand how to implement face detection using OpenCV's Haar Cascade.

- Learn how to integrate Roboflow API for gender classification.

- Explore methods for processing and manipulating images in Python.

- Visualize detection results using Matplotlib.

- Develop practical skills in combining ai and computer vision for real-world applications.

This article was published as part of the Data Science Blogathon.

How to detect gender using OpenCV and Roboflow in Python?

Let’s learn how to implement OpenCV and Roboflow in Python for gender detection:

Step 1: Import libraries and load images

The first step is to generate the necessary libraries. We used OpenCV for image preparation, NumPy to handle the clusters and Matplotlib to visualize the results. We also loaded an image containing the faces we wanted to analyze.

from google.colab import files

import cv2

import numpy as np

from matplotlib import pyplot as plt

from inference_sdk import InferenceHTTPClient

# Upload image

uploaded = files.upload()

# Load the image

for filename in uploaded.keys():

img_path = filename

In Google Colab, the files.upload() function allows customers to transfer files, such as images, from their local machines to the Colab environment. Upon upload, the image is stored in a Word reference called transferred, where the keys correspond to the file names. A for loop is then used to extract the file path for further processing. To handle the image processing tasks, OpenCV is used to detect faces and draw bounding boxes around them. At the same time, Matplotlib is used to visualize the results, including displaying the image and cropped faces.

Step 2: Loading the Haar cascade model for face detection

Next, we stack on OpenCV’s Haar Cascade demo, which is pre-trained to identify faces. This model scans the image for patterns that resemble human faces and returns their coordinates.

# Load the Haar Cascade model for face detection

face_cascade = cv2.CascadeClassifier(cv2.data.haarcascades + 'haarcascade_frontalface_default.xml')

This is usually a predominant approach for object detection. It identifies edges, textures, and patterns associated with the object (in this case, faces). OpenCV provides a pre-trained face detection model, which is loaded using `CascadeClassifier`.

Step 3: Face detection in the image

We stack the transferred image and change it to grayscale as this makes a difference in improving location accuracy. We then use the face detector to find faces in the image.

# Load the image and convert to grayscale

img = cv2.imread(img_path)

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

# Detect faces in the image

faces = face_cascade.detectMultiScale(gray, scaleFactor=1.1, minNeighbors=5, minSize=(30, 30))- Uploading and converting images:

- Use cv2.imread() to stack the transferred image.

- Change the image to grayscale with cv2.cvtColor() to reduce complexity and improve discovery.

- Face Detection:

- Use detectMultiScale() to find faces in the grayscale image.

- The feature scales the image and checks different areas for facial patterns.

- Parameters such as scaleFactor and minNeighbors adjust the sensitivity and accuracy of detection.

Step 4: Setting up the Gender Detection API

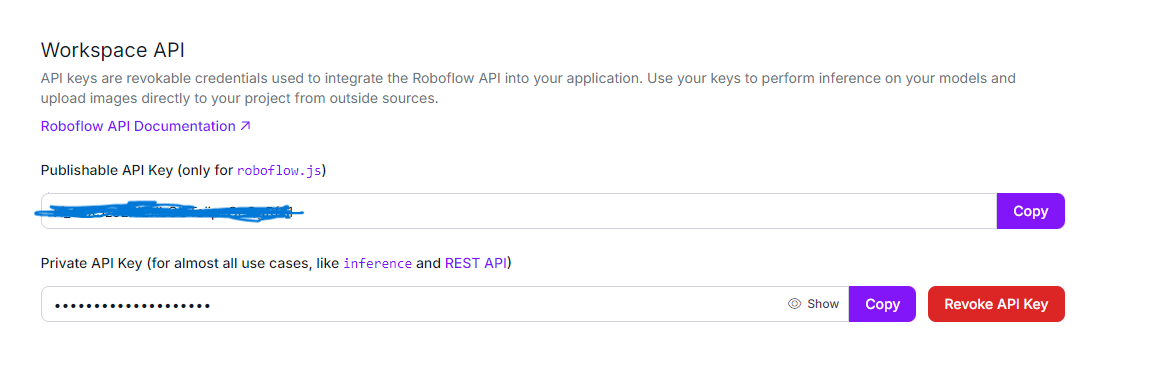

Now that we have detected the faces, we initialize the Roboflow API using InferenceHTTPClient to predict the gender of each detected face.

# Initialize InferenceHTTPClient for gender detection

CLIENT = InferenceHTTPClient(

api_url="https://detect.roboflow.com",

api_key="USE_YOUR_API"

)

InferenceHTTPClient simplifies interaction with Roboflow pre-trained models by configuring a client with the Roboflow URL and API key. This setup allows requests to be sent to the gender detection model hosted on Roboflow. The API key serves as a unique identifier for authentication, allowing secure access to and use of the Roboflow API.

Step 5: Processing each detected face

We loop through each detected face, draw a rectangle around it, and crop the face image for further processing. Each cropped face image is temporarily saved and sent to the Roboflow API, where the gender-detection-qiyyg/2 model is used to predict the gender.

The gender-detection-qiyyg/2 model is a pre-trained deep learning model optimized to classify gender as male or female based on facial features. It provides predictions with a confidence score, which indicates how confident the model is about the classification. The model is trained on a robust dataset, allowing it to make accurate predictions on a wide range of facial images. The API returns these predictions and uses them to label each face with the identified gender and confidence level.

# Initialize face count

face_count = 0

# List to store cropped face images with labels

cropped_faces = ()

# Process each detected face

for (x, y, w, h) in faces:

face_count += 1

# Draw rectangles around the detected faces

cv2.rectangle(img, (x, y), (x + w, y + h), (255, 0, 0), 2)

# Extract the face region

face_img = img(y:y+h, x:x+w)

# Save the face image temporarily

face_img_path="temp_face.jpg"

cv2.imwrite(face_img_path, face_img)

# Detect gender using the InferenceHTTPClient

result = CLIENT.infer(face_img_path, model_id="gender-detection-qiyyg/2")

if 'predictions' in result and result('predictions'):

prediction = result('predictions')(0)

gender = prediction('class')

confidence = prediction('confidence')

# Label the rectangle with the gender and confidence

label = f'{gender} ({confidence:.2f})'

cv2.putText(img, label, (x, y - 10), cv2.FONT_HERSHEY_SIMPLEX, 0.8, (255, 0, 0), 2)

# Add the cropped face with label to the list

cropped_faces.append((face_img, label))

For each recognized face, the system draws a bounding box using cv2.rectangle() to visually highlight the face in the image. Then crop the face region using cuts (face_img = img(y:y+h, x:x+w)), isolating it for further processing. After temporarily saving the cropped face, the system sends it to the Roboflow model via CLIENT.infer()which returns the gender prediction along with a confidence score. The system adds these results as text labels on each face using cv2.putText()providing a clear and informative overlay.

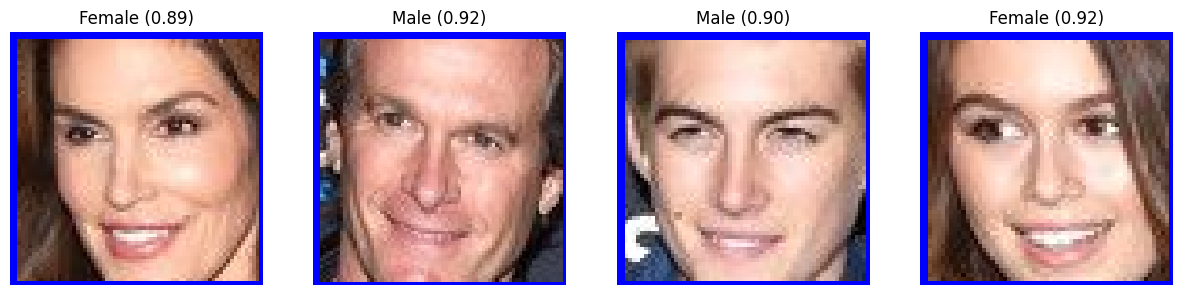

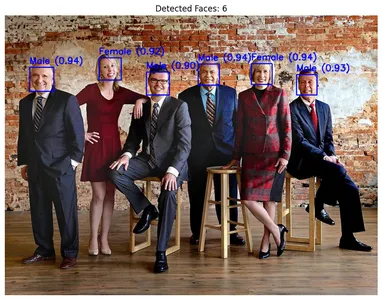

Step 6: Viewing the results

Finally, we visualize the result. We first convert the image from BGR to RGB (since OpenCV uses BGR by default), then we display the detected faces and gender predictions. After that, we display the individual cropped faces with their respective labels.

# Convert image from BGR to RGB for display

img_rgb = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

# Display the image with detected faces and gender labels

plt.figure(figsize=(10, 10))

plt.imshow(img_rgb)

plt.axis('off')

plt.title(f"Detected Faces: {face_count}")

plt.show()

# Display each cropped face with its label horizontally

fig, axes = plt.subplots(1, face_count, figsize=(15, 5))

for i, (face_img, label) in enumerate(cropped_faces):

face_rgb = cv2.cvtColor(face_img, cv2.COLOR_BGR2RGB)

axes(i).imshow(face_rgb)

axes(i).axis('off')

axes(i).set_title(label)

plt.show()

- Image conversion: Since OpenCV uses BGR format by default, we convert the image to RGB using cv2.cvtColor() for correct color visualization in Matplotlib.

- Showing results:

- We use Matplotlib to display the image with the detected faces and gender labels on top of them.

- We also show each cropped face image and the predicted gender label in a separate subplot.

Original data

Output result data

Conclusion

In this guide, we have successfully developed a powerful gender detection system using OpenCV and Roboflow in Python. By implementing OpenCV for face detection and Roboflow for gender prediction, we created a system that can accurately identify and classify gender in images. The addition of Matplotlib for visualization further enhanced our project, by providing clear and insightful visualizations of the results. This project highlights the effectiveness of combining these technologies and demonstrates their practical benefits in real-world applications, offering a robust solution for gender detection tasks.

Key points

- The project demonstrates an effective method for detecting and classifying gender from images using a pre-trained ai model. The demonstration accurately distinguishes sexual orientation with high certainty, showcasing its unwavering quality.

- By combining devices like Roboflow for ai inference, OpenCV for image preparation, and Matplotlib for visualization, the company effectively combines different innovations to achieve its goals.

- The system's ability to distinguish and classify the gender of different people in a single image highlights its strength and flexibility, making it suitable for various applications.

- Using a pre-trained demo ensures high prediction accuracy, as demonstrated by the certainty scores given below. This accuracy is crucial for applications requiring reliable gender classification.

- The project uses visualization techniques to annotate images with detected faces and predicted genders. This makes the results more interpretable and valuable for further analysis.

Read also: Name-based gender identification using NLP and Python

Frequently Asked Questions

A. The project aims to detect and classify gender from images using artificial intelligence. It leverages pre-trained models to identify and label the gender of people in photographs.

A. The project used Roboflow gender detection model for ai inference, OpenCV for image processing, and Matplotlib for visualization. It also used Python for scripting and data handling.

A. The model analyzes images to detect faces and then classifies each detected face as male or female based on the trained ai algorithms. It generates confidence scores for the predictions.

A. The model demonstrates high accuracy with confidence scores indicating reliable predictions. For example, confidence scores on the results were above 80%, demonstrating strong performance.

The media displayed in this article is not the property of Analytics Vidhya and is used at the discretion of the author.