Editor's image

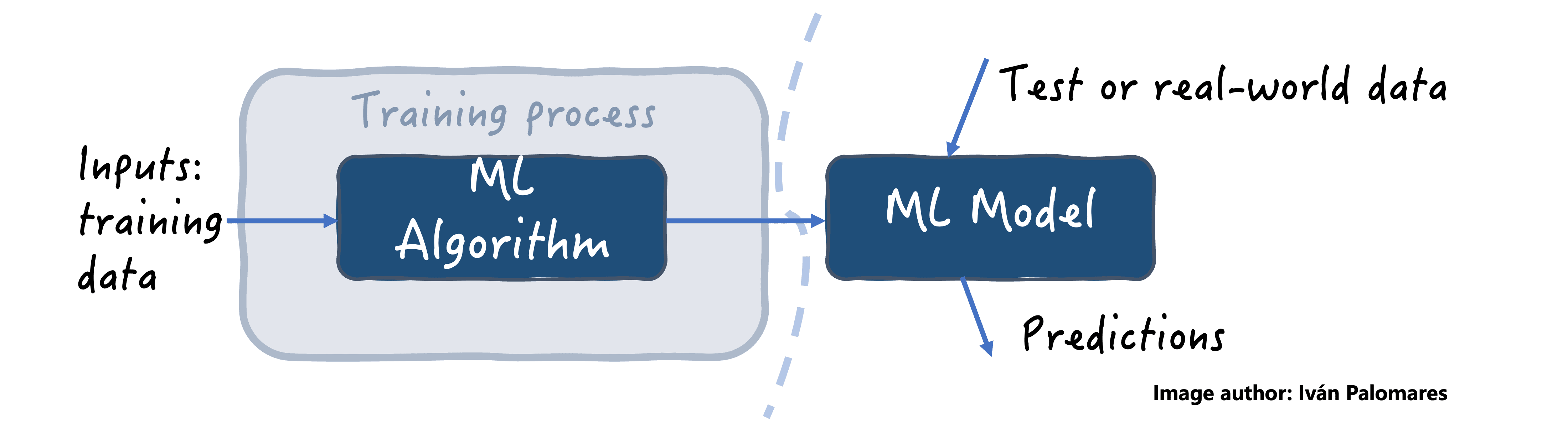

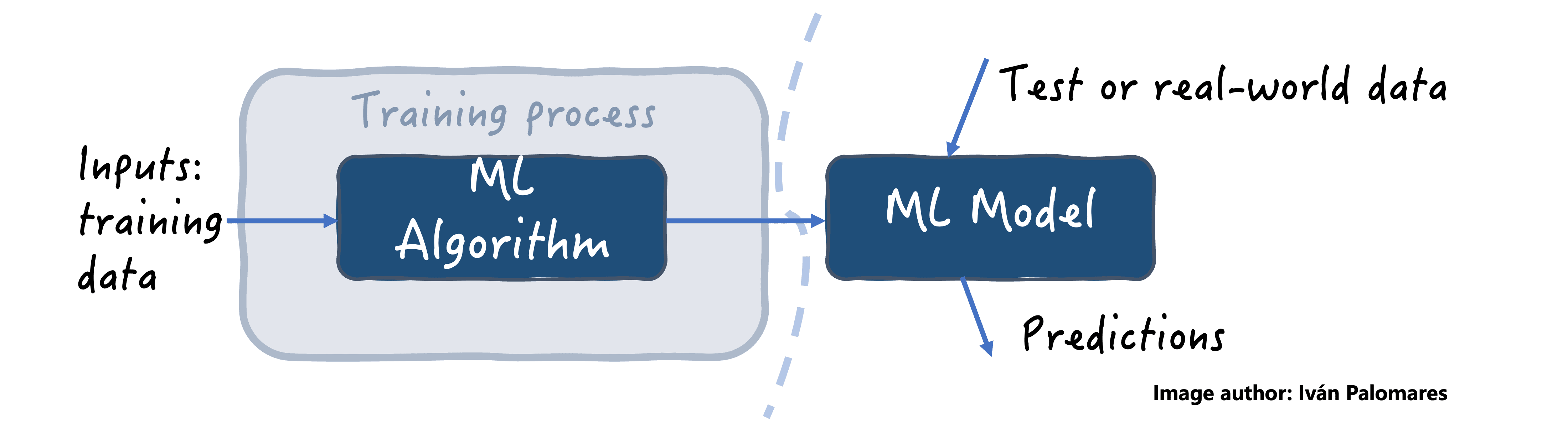

Machine learning (ML) algorithms are essential for creating intelligent models that learn from data to solve a particular task, such as making predictions, classifications, detecting anomalies, and more. Optimizing ML models involves fine-tuning the data and the algorithms that lead to the creation of such models to achieve more accurate and efficient results, and improve their performance in the face of new or unexpected situations.

The following list summarizes the five key tips for optimizing the performance of ML algorithms, more specifically, optimizing the accuracy or predictive power of the resulting ML models. Let’s take a look.

1. Preparing and selecting the right data

Before training an ML model, it is very important to preprocess the data used to train it: cleaning the data, removing outliers, dealing with missing values, and scaling numerical variables when necessary. These steps often help improve the quality of the data, and high-quality data is often synonymous with high-quality ML models trained on it.

Furthermore, not all features of the data may be relevant to the model being created. Feature selection techniques help to identify the most relevant attributes that will influence the model results. Using only relevant features can help not only reduce the complexity of the model but also improve its performance.

2. Hyperparameter tuning

Unlike ML model parameters that are learned during the training process, hyperparameters are settings that we select before training the model, like buttons or gears on a dashboard that can be manually adjusted. Properly tuning hyperparameters by finding a setting that maximizes model performance on test data can significantly impact model performance — try experimenting with different combinations to find an optimal setting.

3. Cross validation

Implementing cross-validation is a smart way to increase the robustness of ML models and their ability to generalize to new, unseen data once they are deployed for real-world use. Cross-validation involves splitting the data into multiple subsets or sets and using different training/testing combinations on those sets to test the model under different circumstances and consequently get a more reliable picture of its performance. It also reduces the risks of overfitting, a common problem in ML whereby the model has “memorized” the training data instead of learning from it, thus having a hard time generalizing when exposed to new data that looks even slightly different than the instances it memorized.

4. Regularization techniques

The problem of overfitting is sometimes caused by creating an overly complex ML model. Decision tree models are a clear example where this phenomenon is easy to detect: an overly large decision tree with dozens of levels of depth may be more prone to overfitting than a simpler tree with a smaller depth.

Regularization is a very common strategy to overcome the problem of overfitting and thus make ML models more generalizable to any real data. It adapts the training algorithm itself by adjusting the loss function used to learn from errors during training, so that “simpler paths” to the final trained model are encouraged and “more sophisticated” ones are penalized.

5. Ensemble methods

Strength in numbers: This age-old motto is the principle behind ensemble techniques, which consist of combining multiple ML models through strategies such as bagging, boosting or stacking, capable of significantly increasing the performance of their solutions compared to that of a single model. Random Forests and XGBoost are common ensemble-based techniques that are known to perform comparably to deep learning models for many predictive problems. By leveraging the strengths of individual models, ensembles can be the key to building a more accurate and robust predictive system.

Conclusion

Optimizing machine learning algorithms is perhaps the most important step in creating accurate and efficient models. By focusing on data preparation, hyperparameter tuning, cross-validation, regularization, and ensemble methods, data scientists can significantly improve the performance and generalization of their models. Try these techniques to not only improve predictive power, but also help create more robust solutions capable of tackling real-world challenges.

Ivan Palomares Carrascosa is a leader, writer, speaker, and advisor on ai, machine learning, deep learning, and law. He trains and guides others to leverage ai in the real world.